Activity

Mon

Wed

Fri

Sun

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

Learn Microsoft Fabric

15.5k members • Free

Fabric Dojo 织物

348 members • $67/month

68 contributions to Learn Microsoft Fabric

Fabric May 2025 Product Updates 👀

Hey everyone! Hope you're doing well?! This week it's Build conference, so there are many new features being rolled out! You can read the full new features summary page here: Fabric May 2025 Feature Summary Video: https://youtu.be/5qbIn80JrqY?si=a26fNRQnHpykwEPP I've skimmed through it, and the following new features were interesting to me! - Shortcut transformations (Preview) - Warehouse Snapshots (Preview) - Continuous Ingestion from Azure Storage to Eventhouse (Preview) - Introducing Cosmos DB in Microsoft Fabric (Preview) - Native change data capture (CDC) support in Copy Job (Preview) - Mirroring for SQL Server On-Premises (Preview) - Mirroring for SQL Server 2025 (Preview) - Dataflow Gen2 Public APIs (Preview) - Dataflow Gen2 parameterization (Preview) - SharePoint files as a destination in Dataflow Gen2 (Preview)

Upcoming free online conference New Stars of Data, Friday, May 16th, 2025

Hi! You can now add the online conference New Stars of Data: https://www.meetup.com/datagrillen/events/305456700/ If you want to learn how you can automate common, repetitive tasks that you're likely to perform in every semantic model, join my session at 10:15 AM CET. Done in 60 seconds - Speed up your development with Tabular Editor. Full schedule: https://www.newstarsofdata.com/speakers/ If you have something interesting to share, I can recommend you to send an abstract for the next round of New Stars of Data (organized twice a year). You will get an experience mentor and learn a lot on the way.

2

0

🧵 FABCON 2025 ANNOUNCEMENTS

Hey everyone, today there will be lots of announcements from FabCon in Las Vegas. Also, we had a live call where I did some hot takes on the updates/ releases as they were coming in - Pinned Comment for the recording 👇

3 likes • Apr '25

@Will Needham thank you for a good summary. Coming from the Power BI side, it is hard to keep up with the updates and understand the impact from reading the blogs. Noe many exciting Power BI updates (really hope PBIP and PBIR is GA soon). Came across this one, that looked interesting: https://github.com/microsoft/fabric-toolbox

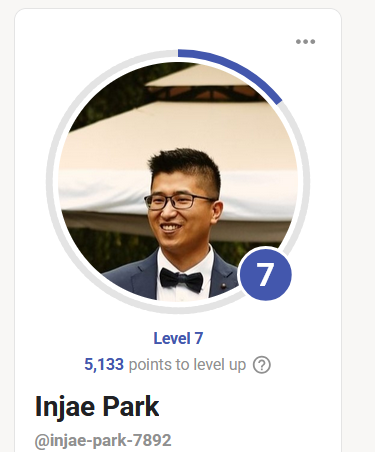

Supporting Injae at the Power BI World Championships!

Best of luck to my friend, fellow YouTuber, and fellow Skool Community owner, Injae Park, who is competing in the Power BI World Championship Finals today at FabCon Las Vegas! He is one of the best in the world for pushing the boundaries in Power BI, so I'm sure he'll do well! So if you want to develop market-leading Power BI skills - you should check out his Skool: 👉 🔗 Join Power BI Park Skool Community here (affiliate link) COME ON INJAE!

Measure table in Lakehouse

Hi all :) I have a question regarding creating a measure table in lakehouse. I readed some materials in web and I'm stuck. Below are steps that I did: 1. I've created a measure table via Notebook: data = [(1, 'MeasureTable')] columns = ['ID', 'Col1'] measure_df = spark.createDataFrame(data, columns) measure_df.show() spark.sql("DROP TABLE IF EXISTS MeasureTable") measure_df.write.format("delta").saveAsTable('MeasureTable') 2. In SQL Endpoint I added measures to the table, when I'm clicking New Report button, I can see measureTable, properly formatted. But... there is no option to download this report with connection to SQL endpoint (button is inactive). 3. When I go back to the lakehouse and I creating new semantic model, my measure table don't show measures that I added before to default semantic model in SQL Endpoint. And it show only table added in the notebook: +--+----------------------+ | ID| Col1 | +--+----------------------+ | 1 |MeasureTable| +--+----------------------+ Do you know how to handle that case, where you want to have a default measures added to the model? I will be very appreciate for your help and advice ;)

1-10 of 68

@eivind-haugen-5500

BI Consultant. Insights. Visualization, Tabulator Editor and Fabric enthusiast

Active 5d ago

Joined Mar 12, 2024

Oslo, Norway

Powered by