Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Owned by Will

Build robust data engineering solutions with Microsoft Fabric & get hired as a Fabric Data Engineer.

Ultimate environment to learn, share & engage with the Microsoft Fabric AI Workflows that are changing the enterprise analytics game - forever.

Memberships

Power BI Park

261 members • $69/m

Azure Innovation Station

809 members • Free

1496 contributions to Learn Microsoft Fabric

Power BI to MS Fabric Migration

Hello i am new Power BI and have to migrate Power BI to MS Fabric. What user permissions needed for the migration is workspace admin enough or we need even tenant admin as well. once the tenant of the MS fabric created just reassigning the workspace from Power BI MS fabric will do ? or do we have any step by step documents which can help me to achieve this. Also i do have some of the workspace where i am the ownder and for am not the owner. How do i identify who is owner when i do see the contact details for that workspace. what all are the pre checks i need to take care

🚀

0 likes • 1h

Hi Shobha, this depends on what you mean by migrating Power BI to MS Fabric. Power BI is part of Fabric - it can be as simple as changing the assigned capacity of the workspace from a Power BI license to a Fabric Capacity. For this you will need to be Workspace Admin, and Capacity Contributor (in Azure), as a minimum.

Direct Lake model stuck with SQL endpoint connection

Hi, I have a model that should be direct lake but when looking at the Gateway and Cloud connections in settings is it using a SQL endpoint. Normally this would show a string containing AzureDataLakeStorage as my other direct lake models do. I'm using git integration so I could look at changing .tmdl configurations. Any suggestions appreciated.

Table Column Encryption

Is there any way to encrypt the warehouse table column in Fabric?

Dataverse n Fabric integration

Hi All, anyone dabbled with dataverse to fabric integration? Any gotchas that I would need to be wary of ?

🚀

1 like • 2d

This post / article might be relevant for you Mark: Gotcha’s of bringing Dataverse data into Microsoft Fabric Also lots of other previous conversations via the search: https://www.skool.com/microsoft-fabric/-/search?q=dataverse It's not something I've needed to do though personally yet, so can't help first hand on that one!

API Pagination in Copy Data pipeline activity

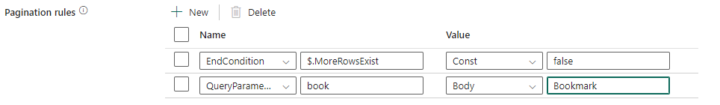

I am working with an API that does pagination via bookmarks. In the response JSON file there are 2 string value pairs that are used for identifying more records and the parameter to pass in the next request URL. The JSON is structured like this. { "Items": [ { ... }, { ... } ], "Bookmark": "<B><P><p>job</p><p>suffix</p><p>site_ref</p></P><D><f>false</f><f>false</f><f>false</f></D><F><v> 1</v><v>0</v><v>BLGS</v></F><L><v> 783</v><v>0</v><v>BLGS</v></L></B>", "MoreRowsExist": true, "Success": true, "Message": null } When setting up the Pagination rules in the Copy Data activity, how can I pass the value in the Bookmark back to the Relative URL? My current Relative URL looks like this, @concat('IDORequestService/ido/load/',item().source_table,'?filter=',item().FILTER,'&loadtype=NEXT&Properties=',item().cols,'&recordcap=',pipeline().parameters.recordcount,'&bookmark=') I need to add the bookmark parameter at the very end. In Azure Data Factory it is done via a parameter set in the Linked Service but since that doesn't exist in Fabric I'm stuck at getting this to work. I can handle the pagination in a notebook easily, but I wanted to explore using a Data Pipeline to try a more Metadata driven approach.

1-10 of 1,496

🚀

@will-needham-6328

I simplify Microsoft Fabric for data professionals. Passionate about teaching Data & AI.

Active 14m ago

Joined Jan 15, 2024

Powered by