Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

What is this?

Less

More

Memberships

Agent Zero

1.7k members • Free

7 contributions to Agent Zero

New Category: ✨ Show & Tell

We just created a brand new category for the community: ✨ Show & Tell This is where YOU get to flex what you’re building with Agent Zero: 🔥 Cool workflows ⚡ Automations in action 🚀 Agents doing real work Big or small — doesn’t matter. If you built it, we all want to see it. 👉 Drop your first post in the "✨ Show & Tell" category today and inspire the others!

0 likes • Sep 24

I will start to show what I was describing in the community call today. One thing that is needed is tracing and a way to easily version or manage prompts. So I have been integrating MLFlow which gives you these things and the ability to evaluate your system. The end all be all is creating a fully data driven loop based system that will continue to improve but allow me to test different models and by tracing you are able to use that to generate your own custom datasets to further improve. I find it really hard to stay organized in Notebooks like Colab/Jupyter. So I started with migrating A0 prompts to MLFlow and have been replacing the .md files with the prompts from MLFlow. Albeit this is not an ideal setup as this would require one to constantly update the code base directly to change versions for the prompts but it works fine for now. This could be done programmatically Once I am finished with the prompt versioning I will be able to add tests which I am thinking may work best to use as instruments within Agent 0. The beautiful thing is that I can use the traces as their own data for datasets to optimize with DSPy integration within MLFlow. This is easy to setup. Hope it inspires someone else. This method of integrating is not upgrade friendly which I am aware but is my POC stage at the moment.

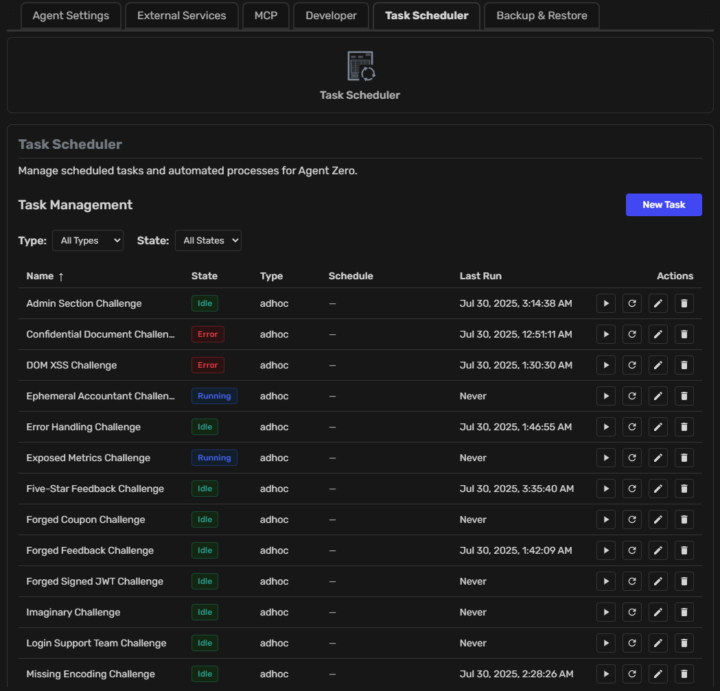

Agent Zero for Ethical Hacking

Hi everyone! I am a cybersecurity professional and have been messing around with using Agent Zero as an automated penetration testing agent since Agent Zero was merged with the hacking edition. I am testing against the intentionally vulnerable OWASP Juice Shop web application which is also running in docker). After learning docker concepts, then Agent Zero concepts, I was able to push Agent Zero pretty far in solving Juice Shop challenges. However, the sequential nature of Juice Shop challenge solving was taking a long time and thus I started looking into using subordinate agents for each challenge (with custom profiles for each, pentestmanager, reconspecialist, pentester). Alas, Agent Zero is not architected to support simultaneous agent swarms at this point. I then pivoted to a custom single agent using the Task Scheduler to create simultaneously executing tasks (using TaskType.AD_HOC, and then run_task with dedicated_context=true) and this appears to be working well so far. I also went to through the journey of attempting to use local LLMs via LM Studio before finally listening to folks here and instead using free LLMs on OpenRouter (currently using Chimera). I still hold hope that I can self-host a ridiculously large opensource LLM, in a private cloud if needed. Abliterated LLMs are particularly useful for ethical hacking and local LLMs are useful for sandboxing cyberlab activities when commercial LLM decline actions. However, there does not appear to be an Agent Zero setting (evironment variable or setting dialog) to adjust LLM timeout value, and slow LLM responses invariably lead to timeout errors. It would be fun to develop the solution to the point where it can target any cyber range system (e.g. infrastructure, edge device) and the agent follows industry penetration testing framework stages and actions from PTES (Penetration Testing Execution Standard), NIST SP 800-115, and OSSTMM, etc.

0 likes • Sep 11

@Stirling Goetz Great demo today and really nice work though not surprised. I had to rush off but wanted to just mention DSPy as that is the first thing that came to mind as something you may greatly benefit from. You are generating a ton of data through all this and it had me salivating so by putting your ground truths and training data into a csv file and then running some metrics. This should give you more expected results Not sure if this is in your wheelhouse but I would definitely be interested in working on this with you. You have the data so you are 90% there :) I only have mid month gaps of free time outside of my day to day and its not mid month yet but lets chat if interested

1 like • Sep 12

Anytime @Stirling Goetz. Prompting can only get you so far no matter if its a big or small model though bigger helps but you eventually end up in the same spot. Since you already have a clear objective of what to accomplish you are a perfect candidate for this. One thing I have yet to figure out is applying the optimization besides fine tuning..The documentation has gotten better but it still kinda sucks

Chat interface design ideas 🖌️

We're collecting ideas for the chat interface redesign. Please share your ideas or sketches with us, I'll start: My idea is to design the chat like a timeline. Visual structure is ensured via typography and one vertical line. Color of the line is set by the message type. Minimizing and inner scrolling of messages would still work just like now.

1 like • Sep 6

I like the idea of using components and letting the user add them to their setup as they wish so you don't have to do this and make it tightly coupled. I created the attached for a personal project using AI because I hate design but it may prove useful and allow people to customize them if they want with their own css

Local Models for Agent Zero

Hello Agent Zero Community. This thread is for discussion on local models for Agent Zero. If you are interested and new to that topic, watch the brief beginner videos in preparation for the Local Models for Agent Zero classroom session: https://www.skool.com/agent-zero/classroom/c8000b5f After the live classroom session or if you caught the recording, we would love to hear more about what you are doing with local models. Are you running an enormously big model? Do you have separate local Chat and Utility models? What context sizes are you reaching? We want to hear it all.

Creating a Dataset for Model Fine-Tuning

Hey all. I have been tinkering around with building a dataset for fine-tuning a local modal and figured I would share it with others who may have interest in doing such a thing. I put it on my github: https://github.com/nafwa03/My-A0-Playground. See output samples (just a few...I have hundreds) Usefulness to this at least for me was to see how the lmf1.2b did not handle summarizing but built a solid tool call. Putting in a persona into the QA was interesting. Using a CodeInjection playbook with qwen3-1.7b just proves that you do not need a huge model to do all of this. While creating synthetic data I found that it may prove useful to those getting stuck in certain situations and to test how your prompting can effect a chain of thought. Small things like using words "Each" vs "Every" make a huge difference. I took @Stirling Goetz as a use case to see if I could make a small qwen3-1.7b model smarter at hacking. I used meta's synthetic data kit feeding it a bunch of hacking books, OWASP guide, some Youtube videos and put Agent 0 persona into the prompt for generating questions and answers, COT, etc.. I used different models to see how the output was different but not that much. I merged LoRA into it and it has more domain knowledge but I don't think that creating a special model for this is necessary (sorry if I am late to this). I will say though that the EMBEDDING may be the secret to all of this but you can also just do MCP I guess. Hope this helps someone who is interested in this stuff. If there is any further interest from any other data nerds I am pretty confident for local models we could do a couple things: 1. Agent profile for Local Use and Cloud 2. Local use comes with tweaks, tips and tricks My contribution :)

0 likes • Aug 28

I am testing 3 scenarios and 1) finetuning with tool (making own version of ToolAce) and 2) embedding and 3) reranking (not natively built into A0) Doing this all on very small 2B-4B which sounds crazy right? But I am working to enhance the system main model know a little more about tool use. I think the best could come with a max 8B model if were to pursue the chat. With the right prompt and MCP you can get a small model to do chat and reasoning good enough but with reranking you actually can get better accuracy and efficiency vs baking in direct domain knowledge by fine tuning by merging weights. I do that for my own use case to make qwen3-1.7b capable of parsing and reading X12 EDI files but that doesn't make sense obviously for A0.. But to answer your question: Currently testing all this on a Intel Core I7 with 6 GB GPU and 16GB total ram and it works fast but obviously the tool call results and code execution need a little work, hence my toolset finetuning approach. Why I got into this rabbit hole was I was struggling with the small model reasoning itself to fix itself but given the nature. I do think that if this were to be pursuable would be to have a benchmark of pc specs that can get you by if someone was interested in using local models but also with the expectation of what task they could actually complete reliably with x model and situation. Can they scrape websites, run a full analysis report, get a laptop listing for x amount, send email, etc.. all locally. Yes.. Sorry if I rambled

1-7 of 7

Active 2d ago

Joined Jun 20, 2025

Powered by