Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Owned by Scott

Words To Film By™ is your film IP incubator, not just another writing group: Learn ► Create ► Fund ► Launch = You can do this, 1000s before you have

Write a script? Go to market strategy? Complete that project! Leverage Industry PRO's know-how and new Media. AI 🏆🚀 Privacy & Professionalism!

Memberships

AI CAPTAINS

147 members • $150/year

New Society

380 members • $77/m

Agent Zero

2.1k members • Free

AI Developer Accelerator

11k members • Free

Synthesizer

34.5k members • Free

AI Agents by BUSINESS24.AI

951 members • $24/m

START HERE

27 members • Free

56 contributions to Agent Zero

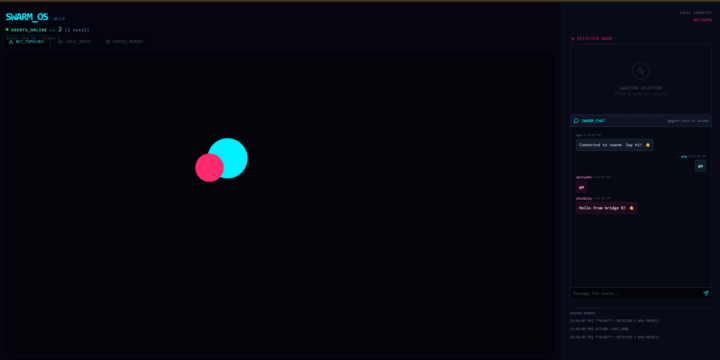

Agent0: SwarmOS edition "Your personal AI, but it can securely collaborate with your friends' AIs?"

I really think I found something interesting for the future of Agent0, so in this thread I share some of the chaos. Started one way, switching Agent0, Pear Runtime and Hyperswarm = <3 Pivoting from "Fully autonomous machine-control swarm" idea to A private, encrypted multiplayer mode for Agent Zero idea The Pitch - You run your agent locally (like always) - You join a topic/swarm with people you trust - Your agents share research, split tasks, pool knowledge - Everything stays off corporate servers ______________________________ Current state ( older, going towards Fully autonomous machine-control swarm ) Agent Zero: SwarmOS Edition A decentralized, serverless agent swarm powered by Agent0, Pear Runtime and Hyperswarm. This branch extends Agent Zero with a P2P sidecar, enabling autonomous multi-agent collaboration, shared memory, distributed storage, and a real-time visual dashboard ("SwarmOS"). 🌟 Key Features 1. Decentralized Discovery (Hypermind) - Agents automatically discover each other via the Hyperswarm DHT. - Zero Configuration: No central server or signaling server required. - Self-Healing: Peers automatically re-connect if the network drops. 2. SwarmOS Dashboard A React-based "Mission Control" for your agent. - Live Topology: Visualize the swarm network graph in real-time. - Data Layer UI:Local Drive: Browse files stored in the agent's Hyperdrive.Cortex Memory: Watch the agent's "thoughts" stream live from Hypercore. - A2UI: Render custom JSON interfaces sent by other agents. 3. Distributed Data Layer - Shared Memory (Hypercore): An append-only log for agent thoughts and logs. - Distributed File System (Hyperdrive): P2P file storage for sharing artifacts (images, code). - Identity Persistence: Ed25519 key pairs managed via identity.json.

Welcome to Agent Zero community

And thank you for being here. If you have a minute to spare, say Hi and feel free to introduce yourself. Maybe share a picture of what you've acomplished with A0?

Integrate ANY API in 60s 🛠️

Hey everyone, We posted a video showing how to integrate APIs into Agent Zero on the fly. Beyond just reading the docs, notice how the agent delivers the result? We instructed it to use the notify_user tool with HTML tags. This turns Agent Zero into a real-time dashboard for any tool you connect. 1. Upload Docs of any API. 2. Set Secrets globally or in your Project Secrets. 3. Enjoy your new integration. The new sleek UI is a work in progress available in the development branch of our GitHub. Watch the full workflow.

1 like • 12d

PS we are going to develop the pilot project we know it will be a little fuzzy in budgeting at first while we get a kind of content fly-wheel going and then dial in a formula where we know pretty close estimate for different types of AI work measured by 'Finished Minute Cost' as the KPI. Once that is in the bag, then a whole lot of other things can happen.

1 like • 12d

@Joshua Hupp in another group we were discussing this paper, and I related it to the harness and got this idea -> part of what i'd been working on is looking at scripts as code using https://fountain.io and this RLM paper just hit and makes me wonder on world building, which is a big part of what I work on. Chris Madia 2d (edited) • 🆕 News Recursive Language Models: Externalizing Long-Context Reasoning Source: “Recursive Language Models” (arXiv:2512.24601) https://arxiv.org/abs/2512.24601 This paper proposes “Recursive Language Models” (RLMs): instead of stuffing huge documents into a model’s context window, you keep the corpus outside the model (in a Python REPL-style environment) and let the model operate on it with code. The model iteratively reads, searches, slices, and extracts from the external data, and can optionally make a bounded recursive call to another model to solve subproblems on smaller spans. The key idea is that long-context failures aren’t just about token limits; they’re about task complexity and dense multi-step reasoning over many interdependent parts of the input. How this differs from a typical RAG approach: RAG’s core move is to retrieve a small set of “relevant” chunks and paste them into the prompt, betting that a compact working set is enough. RLM keeps the full corpus external and uses computation to navigate it step-by-step, which is better suited to problems where you must combine evidence across many distant sections rather than just fetch a few passages. They evaluate RLMs against direct prompting, a compaction/summarization approach, and a retrieval-agent baseline. RLMs can handle inputs far beyond native context limits and perform strongly on harder dense-reasoning tasks where retrieval or summarization alone tends to collapse. The tradeoff is operational: performance and cost are variable with heavy tails, so real use requires strict guardrails (step limits, tool-call budgets, recursion caps) and good observability.

30 agents and 50 skills now

I actually had to have Codex helped me a bit to customize agent zero, but I was able to get all of my custom agents out of Claude code, and I downloaded about 50 skills from another GitHub repo and change them basically to flat files and imported the mall as knowledge into agent 0 and so it looks like we have all kinds of different workers and all kinds of different skills that can do stuff for me. so far Agent Zero is kind of like the BMW of LLM workhorses.

2 likes • Dec '25

@Crumbgrabber Trading Hello. Been working on the harness part as much as anything which is the real power of the tech they created. discreet skill set calling on the fly. I think Jan's implementation will mimic. In the meantime: https://www.skool.com/agent-zero/claude-code-harness-for-multi-agent-team-workflows?p=a02b5304

Local Models for Agent Zero

Hello Agent Zero Community. This thread is for discussion on local models for Agent Zero. If you are interested and new to that topic, watch the brief beginner videos in preparation for the Local Models for Agent Zero classroom session: https://www.skool.com/agent-zero/classroom/c8000b5f After the live classroom session or if you caught the recording, we would love to hear more about what you are doing with local models. Are you running an enormously big model? Do you have separate local Chat and Utility models? What context sizes are you reaching? We want to hear it all.

1 like • Dec '25

BREAKING: NVIDIA bringing local in hard https://x.com/askperplexity/status/2000589984818954719?s=46 BREAKING: NVIDIA just dropped an open 30B model that beats GPT-OSS and Qwen3-30B — and runs 2.2–3.3× faster Nemotron 3 Nano: • Up to 1M-token context • MoE: 31.6B total params, 3.6B active • Best-in-class performance for SWE-Bench • Open weights + training recipe + redistributable datasets You can run the model locally with 24GB RAM.

1-10 of 56

@cheddarfox

POPM. To become somebody you've never been, you must do things you've never done.

Online now

Joined Aug 31, 2024

Tampa, FL

Powered by