Activity

Mon

Wed

Fri

Sun

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

Mar

What is this?

Less

More

Owned by Michael

Build real-world AI fluency to confidently learn & apply Artificial Intelligence while navigating the common quirks and growing pains of people + AI.

Memberships

OpenClaw Lab

272 members • $29/month

AI for Life

13 members • Free

Jacked Entrepreneurs

323 members • Free

AI Automation Society Plus

3.3k members • $99/month

270 contributions to AI Bits and Pieces

🎯 Naming Your AI Agency Part 5 of 5: Taglines - The Hidden Multiplier

You don’t need to have the company name do all the work. That’s rarely necessary. In many cases, the name carries identity — and the tagline carries clarity. Together, they do far more than either one alone. Think of it this way. The name is the container. The tagline explains what’s inside. A strong tagline answers the question people almost always ask when they hear a company name: “What exactly do you do?” It clarifies your positioning. It reduces confusion. It strengthens your market signal. For example: AI & Data Strategies LLC Adopt AI with confidence. The name signals the lane. The tagline signals the outcome. Or take AI Bits & Pieces. The name carries story and identity. The tagline clarifies the tone and focus. AI Bits & Pieces Quick quips, quirks, and insights on people + AI Used together, they create signal. 🎯 What a Good Tagline Should Do A strong tagline usually clarifies at least one of three things: What you do Who you help What outcome you create For example: AI Education for Operators Agent Systems for Founders Adopt AI with confidence Short. Clear. Memorable. It shouldn’t feel like a paragraph. It should feel like positioning. 🎯 The Simple Test Look at your name and tagline together. If someone reads both and still asks, “So what exactly do you do?” It needs tightening. The goal isn’t cleverness. The goal is signal. 🎯 The Strategic Advantage A well-constructed name and tagline together give you: - Clarity - Story - Positioning - Flexibility - Longevity The name anchors identity. The tagline carries explanation. And explanation is where positioning lives. 🎯 Final Thought for the Series Naming isn’t about sounding innovative. It’s about signaling the kind of company you’re building. Some names carry story. Some names carry clarity. Some names optimize for search. Some names are built for longevity. The key is choosing intentionally. And then supporting that name with positioning that makes the signal clear. For example:

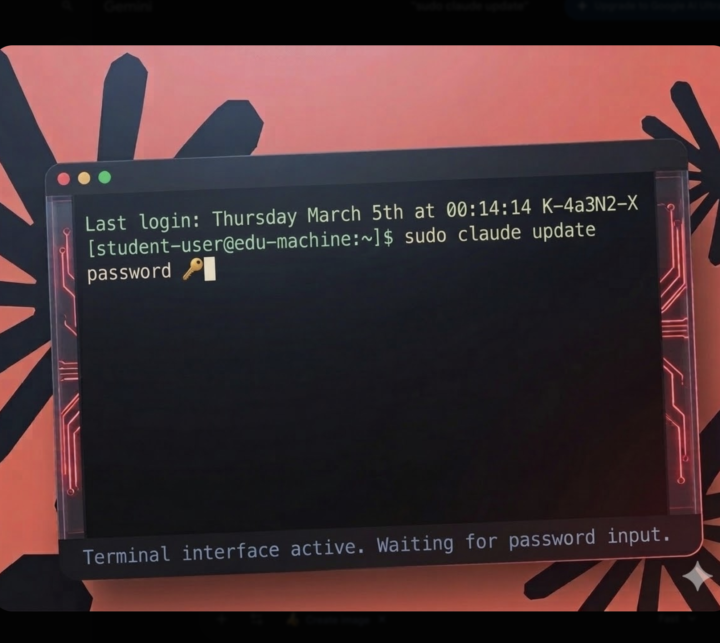

Two ways to update Claude on Mac. Here's exactly how to do both.

Claude Desktop (the app you're probably using right now): Look at the very top of your screen. You'll see the Apple logo on the far left. Right next to it, you'll see the word "Claude." Click that. A small menu drops down. Click "Check for Updates." Done. Claude Code (the terminal version): Look at the toolbar at the bottom of your screen — that's called the Dock. If Terminal is pinned there, just click it. If it's not there: open your Applications folder, type "Terminal" in the search bar, and click it when it shows up. Once it's open, right-click the Terminal icon in your Dock and select "Keep in Dock" so it's always there going forward. A black window opens. Don't panic. Type this exactly: sudo claude update Hit Enter. It'll ask for your Mac password. Type it in — you won't see it appear as you type, that's completely normal. Hit Enter again. It updates automatically. Two tools. Two steps. Neither one is hard.

👋 Welcome to AI Bits and Pieces!

We’re glad you’re here. This community is all about exploring the human side of AI — through bite-sized insights, quips, quirks, and practical stories you can use right away. 🚀 Your First Step Introduce yourself below! Share a bit about who you are, how you’re using AI, or where you’re curious to start. 🎓 Start Learning In the Classroom New here? A great place to begin is our Classroom Training. It’s designed to help you build AI literacy and fluency in small, practical bites you can use in conversations, projects, and learning. 📝 We Encourage You to Post Often We’ve got three main spaces — here’s what each one is for, with examples 👇 ❓Ask the Community Use this when you’re looking for help, advice, or feedback. Examples: - “Has anyone tried ___?” - “How would you approach ___?” - “I’m stuck on ___ — any ideas?” 💬 General Discussion Use this for thoughts, updates, conversations, or anything that doesn’t fit elsewhere. Examples: - “Lately I’ve been thinking about ___” - “Sharing something interesting I came across” - “Curious what everyone thinks about ___” 🎉 Wins Use this to celebrate progress — big or small. Examples: - “Finally finished ___!” - “Small win, but I’m proud of it” - “Hit a milestone today 🎉” ✨ If you’re ever unsure — General Discussion is always a safe choice. Jump in anytime. Short posts are more than welcome! 📌 No Apology Spam Free Community: Please take a moment to review our Community Rules. This is directed at spammers. As you will see, we are very diligent about keep our community free of spam so our members can enjoy their experience. We make several sweeps per day. If you see am spam, please report it and it will be taken care of immediately. Thank you. We’re building this community one small piece at a time — and we’re glad you’re part of it.

AI in Real Life: When ChatGPT Rode Shotgun to Florida

We were deep in conversation, driving to Florida. Talking at some length about the 2026 strategy for AI Bits & Pieces. What stays. What evolves. What new ideas might want a little room to grow. After a lot of back and forth, there was a pause. The kind that means someone is thinking, not finished. Then Michael started thinking out loud — as he does. I was listening. Or so I thought. Michael was reciting a finished thought — pulling together all the pieces of the conversation we’d just had about AI Bits & Pieces and its next chapter. Naturally, I answered. Quickly. Confidently. Like a spouse who’s been married a long time and knows the rhythm of these conversations. And then ChatGPT started talking. And then it just… stopped. Like, oh — sorry, go ahead. I remember thinking, "Why did it start talking?" Completely forgetting that Michael had ChatGPT set to voice mode to capture our thoughts and notes. So, I kept going. Added a little more context. And then, suddenly, ChatGPT jumped back in and essentially said, “Yes, I agree with Michele.” 😳 I looked at Michael with that "what just happened" face. That’s when it clicked. He wasn’t asking me. He was asking "TARS" (yes, from *Interstellar*) — as Michael calls ChatGPT. And somehow, without meaning to, I had jumped into a three-way conversation… and the AI wasn’t waiting at all — more like a cat behind the couch, ready to spring. I didn’t know whether to laugh or shake my head in bewilderment. Probably both 😂 And I thought to myself — "damn… it’s already here." Woven quietly into our conversations, our thinking, our planning. It made me wonder — where else is AI showing up that I’m not even consciously aware of? We’re just going on about our day — the kind of conversation I’ve had a thousand times with my partner of 27 years — and it’s already inserting itself into our lives. And maybe that’s how the biggest changes arrive — already settled in, before we realize we’ve adjusted. And that's AI in Real Life...

AI in Real Life: A New Series by Michele Wacht

I’m excited to share something special with our AI Bits & Pieces community, especially the AI-curious members of our community. 🥁 🚀 Starting this week, my wife Michele will be contributing a new series called AI in Real Life — a warm, honest look at what it actually feels like to learn and use AI from the perspective of an everyday, real-world user. ✨ AI in Real Life is for anyone who’s ever thought: “I’m curious… but where do I begin?” This series will follow her personal journey with ChatGPT and other AI tools as she explores how they show up in everyday life — conversations with family, planning and organizing, trying new ideas, and even navigating the hesitation many of us felt in the beginning. Each week, Michele will share a short story, a small discovery, or a real-life moment that brought AI into her world in a simple, human way. My hope is that her voice helps make this community feel even more welcoming for those who are just getting started. ________ 🕰️ By way of background, Michele (@Michele Wacht ) spent twenty years as an executive selling services to the automotive OEM industry. She came from a corporate marketing and sales background, achieving top salesperson status at her company for many years before stepping away eight years ago to prioritize our family and be fully present for our daughter during her teen years. Now that Emma is off to college and recently turned 21, Michele felt ready to re-engage. And to my delight, she decided to join AI Bits and Pieces in helping people understand the benefits of AI — not from the perspective of an engineer or a strategist, but from the vantage point of someone discovering her own curiosity and how AI fits in as a life skill. If you’ve read Michele’s writing — as I and many of her friends have — you know she has a gift for turning simple moments into meaningful reflections. She approaches AI the same way — with curiosity, humor, and a down-to-earth honesty that reminds us that learning something new doesn’t always start with confidence. Sometimes it starts with dinner plans for friends, a college-age daughter on speed dial, and a willingness to try (a preview of her first post).

1-10 of 270

@michael-wacht-9754

Creator of AI Bits and Pieces |

AIS+ Assistant Community Manager |

Active 2h ago

Joined Aug 23, 2025

Mid-West United States

Powered by