Migrating prompts across models is a pain in the ass

Sometimes, our carefully crafted prompts work superbly with one model but fall flat with another. This can happen when we’re switching between various model providers, as well as when we upgrade across versions of the same model. For example, Voiceflow found that migrating from gpt-3.5-turbo-0301 to gpt-3.5-turbo-1106 led to a 10% drop on their intent classification task. (Thankfully, they had evals!) Similarly, GoDaddy observed a trend in the positive direction, where upgrading to version 1106 narrowed the performance gap between gpt-3.5-turbo and gpt-4. (Or, if you’re a glass-half-full person, you might be disappointed that gpt-4’s lead was reduced with the new upgrade) Thus, if we have to migrate prompts across models, expect it to take more time than simply swapping the API endpoint. Don’t assume that plugging in the same prompt will lead to similar or better results. Also, having reliable, automated evals helps with measuring task performance before and after migration, and reduces the effort needed for manual verification. Article link: https://applied-llms.org/#prompting

Using Claude Code's browser tools for market research

Quick workflow I stumbled into that's been surprisingly useful. Instead of manually analyzing a platform or community, I have Claude Code do it: • Navigate to the target site • Screenshot key pages • Analyze content patterns • Report back structured findings Did this for this Skool community yesterday. In about 10 minutes I had: • Community size and activity levels • All the content categories • Which post formats get engagement • Platform-specific formatting quirks (like code blocks not rendering) • Tone patterns from high-performing posts The browser automation caught things I would have missed. Like discovering the platform doesn't render markdown properly — would have built the wrong thing without that insight. The prompt pattern is simple: "Navigate to [URL], analyze the content structure and engagement patterns, tell me what resonates with this audience." Works for competitor analysis, community research, content strategy — anywhere you need to understand what actually works vs what you assume works. Anyone else using Claude's browser tools for research like this?

Have you guys heard of Pencil on Claude Code?

https://x.com/tomkrcha/status/2014028990810300498?s=12

0

0

AI Engineering Treasure Trove

Hey guys, I wanted to recommend this article from article from Alex Razvant (I mentioned him in the call today). His article has really important resources, specially on deploying AI systems: https://read.theaimerge.com/p/the-smartest-ai-engineers-will-bet?utm_source=post-email-title&publication_id=2799726&post_id=184113282&utm_campaign=email-post-title&isFreemail=true&r=1i4qau&triedRedirect=true&utm_medium=email Check it out!

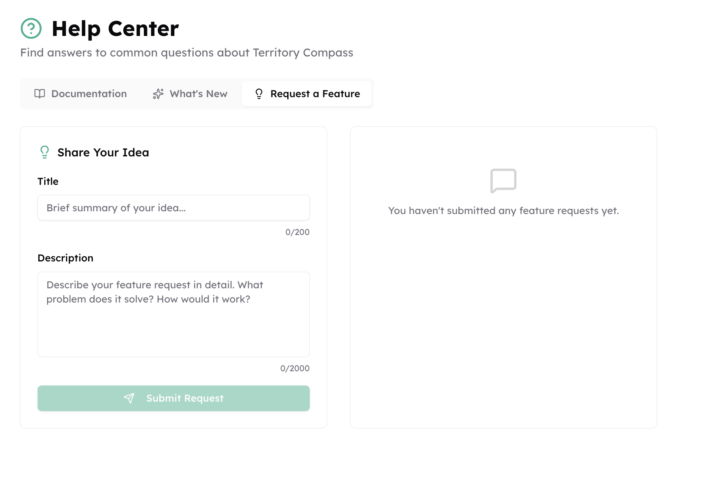

Get Claude Code to build Help from your code base & More

Per the call yesterday, here are some screenshots from my auto-generated Help, Product Updates (Generated from GitHub updates translated by AI to user speak), and product suggestions sections. Doing what users need doesn't need to be a chore and yet another thing that Claude Code can master for you.

1-30 of 240

skool.com/ai-developer-accelerator

Master AI & software development to build apps and unlock new income streams. Transform ideas into profits. 💡➕🤖➕👨💻🟰💰

Powered by