Pinned

New Year’s Sale: 69% Off (ends Friday)

For a New Year’s special, I'm dropping the price of AI Automation Society Plus by 69%. Your first month is just $29 (normally $94). If you decide to stick around, the following months are the normal $94 price. But once you get inside, I don’t think you will want to leave. 3,000+ members are already finding clients, landing referrals, and building the connections that turn AI skills into income. This deal runs today through Friday. Then it's gone. Click here to see everything that’s included I’ll see you inside. Cheers, Nate

Pinned

🚀New Video: Once You Know This, Building RAG Agents Becomes Easy in n8n

In this video, I break down the different ways you can handle retrieval and context in RAG systems when building AI agents in n8n. I start by explaining why chunk-based retrieval often causes hallucinations and inaccurate answers, especially when the agent is missing full context. Then I walk through three practical approaches I actually use in real systems: using filters to narrow context, using SQL queries to pass full and structured context to the agent, and using vector search when semantic matching makes sense. For each approach, I explain what it is, how it works, when it breaks down, and when it is the right tool for the job.

Pinned

🏆 Weekly Wins Recap | Dec 27 – Jan 2

We wrapped up the year strong and kicked off the new one with momentum. From first sales to real-world impact builds, AIS+ members kept shipping 👇 Here are this week’s highlights inside AIS+ 👇 👉 @Jon Roth made his first sale with a workflow built for a 3D print shop - optimizing Shopify order processing end to end. 👉 @Maciej Wesolowski secured a $5,200 client to build a lead-gen AI chatbot for a training firm — consistency paying off again. 👉 @Ai Stromae built a transcription - NotebookLM workflow that saves a client hours of manual effort every week. 👉 @Kevonte Demery launched a live AI program for underserved students - turning automation skills into real social impact. 👉 Satyam Sharma built a mini SaaS in just 3 hours, complete with payments and a live demo - fast execution, real product. 🎥 Super Win Spotlight of the Week: @Christian Barraza | From Do-or-Die to Full-Time Builder Christian joined AIS+ with almost nothing left in his bank account - and went all in. Within days, he restructured his offer, signed his first client, posted the win, and quickly landed a second client - all by applying what he learned and leaning into the community. 🎥 Watch his quick story 👇 Christian’s journey is proof that when you commit fully and take action, momentum can change everything - fast. ✨ Want to see more wins like these every week? Join the builders inside AI Automation Society Plus - where action, community, and consistency turn learning into real results 🚀

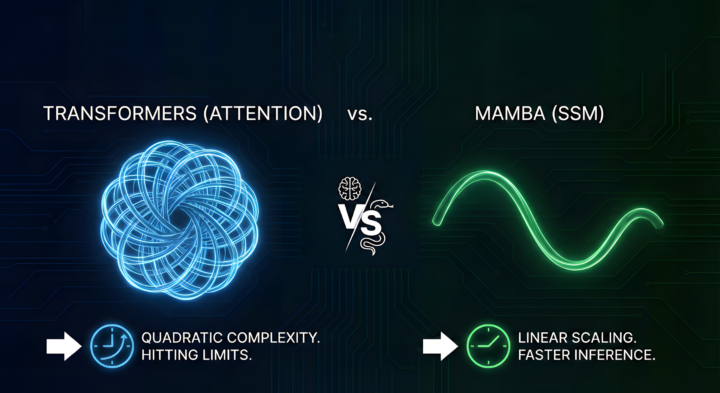

Attention is not all you need

Transformers have been the backbone of LLM models for a while… but it’s starting to feel like they’ve hit their natural limits, especially when it comes to long context and efficiency. Over the last few days, I’ve been diving deep into Mamba, a relatively new architecture based on state space models (SSMs), and honestly, it looks like a very serious contender for “what comes after Transformers.” What is Mamba? Mamba is a sequence modeling architecture built on Structured State Space Models. Instead of comparing every token with every other token (like Transformers' attention mechanism does), it keeps a compact hidden state that gets updated as the sequence progresses. As a result, you have linear scaling, constant memory, faster inference, and significantly reduced costs. This is already a significant leap in bringing AI implementation to domains that weren't that well-suited, like edge computing, IOT, etc. I'll be integrating Mamba into my workflow automation pipelines over the next few weeks, just to see how things work out. And although transformers aren't going anywhere overnight, I think we're starting to see the cracks. Mamba feels like the kind of shift that makes sense: keep the performance, lose the baggage. Until next time.

Welcome! Introduce yourself + share a career goal you have 🎉

Let's get to know each other! Comment below sharing where you are in the world, a career goal you have, and something you like to do for fun. 😊

1-30 of 11,727

skool.com/ai-automation-society

A community built to master no-code AI automations. Join to learn, discuss, and build the systems that will shape the future of work.

Powered by