Activity

Mon

Wed

Fri

Sun

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

9 contributions to AI Automation Society

Production environments for automation

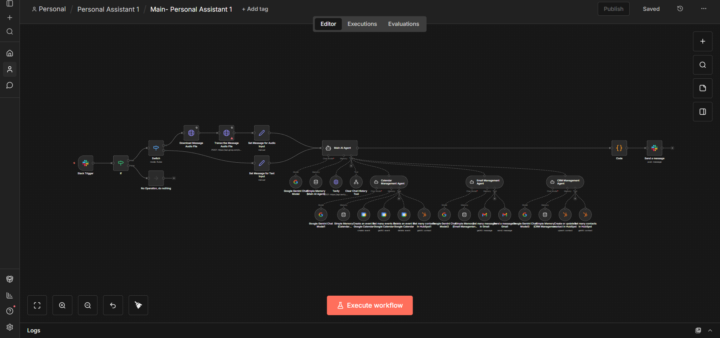

We often treat automation tools as simple scripts, but as workflows grow in complexity, the underlying infrastructure becomes just as critical as the logic itself. If the server is fragile, the automation is useless. The production architecture for my n8n instance on Oracle Cloud, and every design decision was made with one goal in mind: Realibility. When you are running critical workflows, you can't afford a system that requires constant babysitting. Reliability through Decoupling The most significant upgrade was moving the state management to a dedicated PostgreSQL 16 container. By decoupling the database from the application logic using Docker Compose, I’ve eliminated the common "database locked" errors that plague SQLite setups during high-concurrency bursts. This separation ensures that even if the execution layer creates a bottleneck, the data layer remains responsive and intact. Security via Isolation Exposing internal tools to the web is always a risk. Instead of opening ports on the firewall or managing complex reverse proxies, I implemented Zrok as an open-source tunneling solution. This provides a "Zero Trust" surface area, my server IP remains hidden, and I can expose only the specific webhook endpoints required for external triggers. It’s a security-first approach that removes the headache of manual SSL certificate rotation. Maintainability and Performance Running this on the Ampere (ARM) architecture with Ubuntu 22.04 gives me a modern, highly efficient foundation. The containerized Docker approach means updates are atomic and reversible. If a new version of n8n breaks a workflow, rolling back is a matter of changing one line in a config file, not rebuilding a server. Future Optimizations While this stack is solid, there is always room to optimize. My next steps include: Enhanced Observability: Implementing a Grafana/Prometheus stack to visualize workflow metrics and resource usage in real-time. Automated Backups: Setting up a cron job to push encrypted PostgreSQL dumps to an external S3 bucket for disaster recovery.

Handle Objections AFTER Outreach

How to Handle Objections AFTER Outreach (Most People Fumble This) Getting replies is not the hard part. Handling replies without killing the deal is. Most people lose clients after the prospect responds — not before. Let’s break this down properly. Objection #1: “Sounds interesting, but not right now.” ❌ What bad sellers do: “Okay no problem, let me know later 🙂” (aka: dead lead forever) ✅ What actually works: You don’t push. You clarify timing. Reply you can copy-paste: Got it — makes sense. >Just so I understand, is it a timing thing or a priority thing right now? Why this works: You’re not selling. You’re diagnosing. If it’s timing → you set a follow-up. If it’s priority → you address value. 👏🏻Questions for you:- 1.Any more add-ons objections you faced? 2. How you're handling the objection of saying not now ? 3. Any more better method for this objection? 👋🏻Curious to know how others Handling objections.

n8n Transcript Processor That Eliminated 900 Hours Annual Data Entry 🔥

University admissions. 1,200 transcripts annually. Manual entry consuming 900 hours. Built n8n workflow. Zero manual transcription. Perfect accuracy. THE ADMISSIONS PROBLEM: Every incoming transcript requiring manual processing. Coordinator downloads PDF from email attachment. Opens admissions system. Types student details into database. Enters institution information manually. Transcribes all courses individually - course codes, course names, credit hours, letter grades, semester completed. 15-30 courses per transcript average. Each course typed manually. Manual GPA verification against transcript calculation. Academic honors notation if present. Verification status determination based on transcript type. 45 minutes per transcript. 1,200 transcripts annually. 900 hours consumed. Quality audit revealed 14.8% error rate. Course entry typos. GPA calculation mistakes. Overlooked academic honors. Inconsistent verification flagging across coordinators. THE n8n AUTOMATION: 7-node workflow with 3-branch verification routing: Node 1 - Gmail Trigger: Monitors transcript emails Node 2 - Get Email: Downloads attachment Node 3 - Prepare Binary: Formats document Node 4 - Extract Data: Pulls student info, courses, grades, GPA, honors Node 5 - Analyze Transcript: Calculates metrics, categorizes performance Node 6 - Log Database: Updates tracking Node 7-9 - Verification Routing: Routes to verification check OR unverified path EXTRACTION: Student - name, ID, DOB, email Institution - name, address, registrar Degree - program, major, minor, dates Courses - code, name, credits, grade, semester, year (all courses) Academic - credits attempted/earned, GPA, honors Metadata - type (official/unofficial), date, signature ANALYSIS: Total courses count Completion rate percentage GPA categorization (Exceptional/Excellent/Good/Fair) Academic standing (Dean's List/Good Standing/Probation) Grade distribution summary Verification evaluation (official + student ID) VERIFICATION LOGIC:

I won 1st 🥇 place in a Hackathon worth $5000 in prizes. Here's what I built.

I recently won a hackathon by building a social media content system, and I want to share a valuable insight about building systems like this. I've been teaching AI automations for a while, and I've seen beginners make the same mistake over and over: they start building before they know what they're trying to build. Besides my extensive experience with n8n automations and Notion, I believe what truly helped me win this hackathon was dedicating significant time to planning the solution. I spent 6 days building in total, but 1.5 days were just spent planning and making small tests. I used Excalidraw to draft the entire concept, including ideas and the entire data flow. Click here to see the full diagram. The final workflow was not exactly as planned but was very close. You can find me on LinkedIn. I'll be happy to connect. Happy holidays! P.S. Feel free to ask any questions in the comments.

Ready to Make 2026 Our Most Predictable Year Yet

2025 was the year of experimenting. 2026 is the year of executing. This past year I dialed in my niche, my offers, and my systems. I made mistakes, tried too many things at once, and learned what actually moves the needle. Now it’s about coming into 2026 with focus, not noise. Here’s what I’m committed to this year: - Building repeatable systems instead of chasing “tactics” - Doubling down on one clear ICP and one painful problem - Shipping more, thinking less, and letting data tell me what works If you’re in the same boat—tired of restarting every month and ready to build something predictable—let’s make the best of 2026 together. Drop one thing you’re going all‑in on this year.

1-9 of 9