Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Owned by Mark

Build and launch AI Agents & web apps in hours.

Build and launch AI Agents & web apps in hours.

Memberships

Ai Pimp Blueprint

1.5k members • $97

OnlySam - OFM

2.5k members • Free

ALPHA OFM IA

10.2k members • Free

AI Automation Made Easy

11.9k members • Free

AI Automation Mastery

25.7k members • Free

4 contributions to Brendan's AI Community

12 Key Lessons From Building 100+ AI Agents

Just watched this talk on the "12 factors of AI agents" and it's packed with practical insights for anyone building AI systems. 1 Small, Focused Agents Work Best Break things down into micro-agents with clear, short tasks (3-10 steps). Don’t force LLMs to handle long, complex workflows in one shot - own the structure, let the agent “propose” the next step, and decide what happens next. 2. Optimize Your Prompts & Context Every improvement in quality comes from better prompt engineering and careful control over what goes into the context window. If you want reliable output, you need to hand-craft (and constantly test) what you put in. 3. Manage State and Errors Carefully Don’t just dump everything into the agent’s context. When there’s an error, summarize and clarify before sending it back in. Make stateful agents by managing state outside the LLM, just like real software. 4. Let Agents & Humans Collaborate Great agents know when to hand off to a human - trigger human input, notifications, approvals, or clarification at the right time. 5. Multi-Channel is the Future Let users interact with agents anywhere: email, Slack, SMS, etc. Don’t make them open a special chat window. 6. Frameworks Are Helpful, But You Need to Own the Core Frameworks can save time, but you need to own your loop, your prompts, and your control flow for real reliability. 7. Find the Real Edge Best teams don’t just add “magic” AI - they engineer reliability, quality, and clear user experience. Find the limits of what your agent can reliably do, then optimize for those. Summary: Building reliable agents isn’t about fancy tools or huge frameworks - it’s about solid engineering, modular code, and owning your prompts, state, and loops. Start small, keep it focused, and iterate. If you want to dig deeper, check out the 12 Factors of AI Agents video

Get Predictable AI Outputs with JSON Schema

If you’re tired of getting random or messy results from OpenAI, this guide is for you. I walk through how to use JSON schema directly with OpenAI’s HTTP API to force the model to return exactly the fields and formats you need—no more guessing, no more post-processing endless text. What’s inside: - Why you should use JSON schema for structured, stable AI responses - How to build a schema that always returns the right fields (no missing values, no surprises) - Setting up a workflow to process any input (like CVs from email attachments) and extract exactly the data you need - Parsing and validating the output—so you can use it right away, with zero cleanup The end result: You get consistent, valid JSON for every request, whether you’re extracting resume data, product info, or anything else. I use this setup for almost all my projects—because I want results I can trust, not unpredictable blobs of text. Check out the guide and start getting reliable data from OpenAI.

1

0

Agents building insights from Anthropic

Most people are building agents wrong. Here's the framework that actually works. I just watched Barry from Anthropic break down their approach to building effective agents. Three key insights that changed how I think about agents: 📌 Don't Build Agents for Everything The reality check: -> Can you map out the decision tree easily? Build a workflow instead. -> Is your budget under 10 cents per task? Stick to workflows. -> Are errors high-stakes and hard to discover? Agent isn't ready. When agents make sense: -> Complex, ambiguous tasks -> High-value outcomes justify the cost 📌 Keep It Stupidly Simple Agent = Model + Tools + Loop That's it. Three components: -> Environment (where agent operates) -> Tools (how agent takes action) -> System prompt (what agent should do) Everything else is optimization that comes later. Barry's team uses almost identical code for completely different agent use cases. 📌 Think Like Your Agent The hard truth: Agents only know what's in their 30-50k token context window. The exercise that changes everything: Put yourself in the agent's shoes. Look at only what they see. Agents aren't magic. They're sophisticated pattern matching on limited context.

1

0

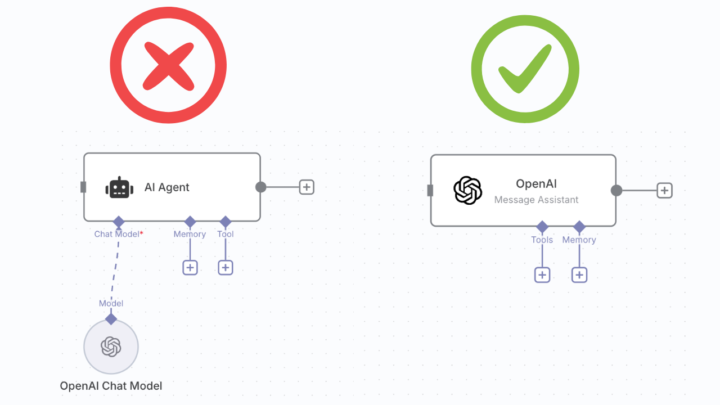

DON'T use AI Agent node in N8N

Hi guys! I’ve spent a lot of time building AI agents for real clients and found that agents made with the basic n8n node are very bad at tool selection, remembering context, and following prompts. I use only OpenAI LLMs because they provide the widest range of features, and I interact with them directly using OpenAI nodes or HTTP requests. After working on 3–5 cases, I noticed that simply replacing the AI Agent node with the OpenAI Assistant node (without changing prompts or tools) significantly increases agent performance. I decided to research how the AI Agent node works internally, and I want to share what I found.. n8n is open-source, so anyone can check how each node works internally. I looked at the code for the AI Agent node and explored how it operates. It uses the LangChain framework to make the code LLM-agnostic and add features like memory, tools, and structured output to any LLM model. Key Points: ⏺️ Universal Convenience, Performance Cost The AI Agent node lets you swap between AI models easily, but it uses LangChain’s “universal” format. This means your messages get converted back and forth, which can cause detail loss and miss optimizations specific to OpenAI. ⏺️ Main Bottlenecks 1. Message Format Translation OpenAI is optimized for its own format, ChatML (roles like system, user, assistant). LangChain uses its own message objects (HumanMessage, AIMessage), which must be converted to ChatML before reaching OpenAI’s API. This leads to subtle translation losses and missed improvements. 2. Context Management: OpenAI’s API handles conversation history and context much more effectively than LangChain’s generic method, especially for long chats. OpenAI uses smart summarization for older messages, while the AI Agent node only takes the last X messages from the memory node. I’m not saying you should strictly avoid the AI Agent node, but you need to understand the pros and cons of each approach to use them in the right cases.

2

0

1-4 of 4

@mark-shcherbakov-4001

I'm no/low-code developer with 4 years of experience working with SMB companies and stars like Miro, JTI, Rebel fund.

Active 19h ago

Joined Dec 22, 2024

Powered by