Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

AI SEO Mastery Pro

323 members • $197/m

Start Writing Online

19.8k members • Free

N/A

1.7k members • Free

Synthesizer Scaling

196 members • $1,700/month

YouTube Revolution

493 members • Free

Digital Marketing Blueprint

1.8k members • Free

Speak Like a Leader

139 members • Free

Community F.I.R.E. Mojo

1.6k members • Free

AI SEO Mastery with Caleb Ulku

3.2k members • $27/m

16 contributions to SEO Success Academy

Beyond the Blooper Reel: A Leader's Guide to AI Risk Management

Artificial intelligence is transforming our industry, offering unprecedented opportunities for efficiency and innovation. But for every breakthrough, there is a cautionary tale—a chatbot meltdown, a fabricated legal precedent, or a dangerous piece of health advice. As leaders, it is our responsibility to look beyond the AI blooper reel and understand the profound organizational risks these failures represent. The rapid adoption of AI is not just a technological shift; it is a change management challenge that demands a robust governance framework. This article moves beyond the amusing anecdotes to provide a strategic framework for AI risk management. We will examine critical lessons from recent high-stakes AI failures, explore the organizational risks and implications, and outline a practical governance model for responsible AI adoption. Our goal is not to stifle innovation, but to ensure that we are harnessing the power of AI safely, ethically, and effectively. Critical Lessons from High-Stakes Failures The AI failures of the past year offer a masterclass in the technology's limitations. These are not isolated incidents; they are systemic patterns that reveal fundamental weaknesses in the current generation of AI tools. From a leadership perspective, these failures highlight several critical areas of concern. Factual Unreliability remains the most pervasive issue. AI models have repeatedly demonstrated a tendency to "hallucinate," fabricating everything from legal citations to scientific research. In one study, GPT-4o fabricated nearly 20% of citations in a series of mental health literature reviews, with over 45% of the "real" citations containing errors. For organizations that rely on accuracy and trust, the implications are profound. A single, unverified AI-generated statistic can undermine a brand's credibility and expose it to legal and financial risk. The legal profession has seen at least 671 instances of AI-generated hallucinations in court cases, including one attorney who was fined $10,000 for filing an appeal citing 21 fake cases generated by ChatGPT.

1 like • Dec '25

AI for now is no substitute for human thinking, intelligence and logical processing. At least not in the current infancy stage and that is a limit one must accept and understand. A world where AI drives decisions is very dangerous one, not only hinders human growth from a mindset and capability perspective it also traps you into being beholden to AI. Be careful out there, who you are beholden to you then become trapped to. When that happens the real winners are AI Chatbots and the companies that create or those who own these bots. Use AI for efficiency but always maintain your autonomy as a human being and use the most powerful system you have which is the human brain. Nothing can match it now or will be able to match it in the future strictly speaking from the innovation, creative thinking plus ideation and thought process.

Don’t Panic: Why AI Search Won’t Kill Your Most Valuable Traffic

The prevailing narrative around AI search has been one of existential threat. We’ve been told that as AI Overviews and AI Mode become the default, website traffic will inevitably decline, starved of the clicks that have fueled digital marketing for decades. However, this doomsday scenario is largely based on studies focused on informational queries—the “how-to” and “what-is” searches that AI is perfectly designed to answer directly. But what happens when the user’s intent shifts from learning to buying? A new study focused specifically on transactional queries reveals a much more optimistic picture. New user experience (UX) research focused on high-involvement services like finding a doctor or dentist shows that for transactional searches, AI Mode is not a traffic killer. In fact, it’s the opposite. When users are looking to make a purchase or book a service, they don’t want a single, definitive answer from an AI. They want a curated list of options to evaluate. This fundamental difference in user behavior means that your most valuable traffic—the users who are ready to pay you—is not going away. It’s just changing the rules of engagement. The End of ‘Winner-Takes-All’ SEO For years, the goal of SEO has been to secure the coveted #1 ranking, a position that historically captured the lion’s share of clicks and rewards. The new study demonstrates that AI Mode fundamentally breaks this model. When searching for services, an overwhelming 89% of users clicked on more than one business. They are not looking for a single recommendation; they are using the AI-generated results to build a consideration set. On average, users in the study checked 3.7 different businesses before making a decision. This represents a massive paradigm shift for marketing leaders. The obsessive, often resource-intensive pursuit of the top spot is no longer the only path to success. The new goal is to secure a position within the top three to five results—the consideration set. If you are in that group, you have a real chance to win the customer. This levels the playing field and creates new opportunities for brands that may have previously struggled to compete for the #1 rank.

1 like • Dec '25

Old rule that existed are simply rewritten or adapting to new user search behavior but the core ethos of giving the user they seek and desire still exists as long as you don't try to game the system in old Google and new AI Google and AI in general, it will work and work well for you beyond the rocky ride that search is in right now and keep in mind this is all in its infancy of AI. There is and always will be golden opportunity in every challenge faced, however it is up to you to look at that perspective and look at it from that angle or lens vs the negative and obvious side, the former is much harder to see and find by the way but if you can do so nothing this will all be a small blip in the road. Just like AI in Synthesizing the information to empower buying decisions, one must Synthesize this into the huge opportunity that it truly is and will be in the future. This above summarizes that fact clearly.

From Optimization to Orchestration: The Evolution of Enterprise SEO

The gap between AI adoption and business impact reveals a critical truth: while 80% of organizations have piloted generative AI and 40% report deployment, only 5% have reached meaningful scale. Yet this disconnect misses what's happening inside enterprises—SEO leaders are being asked to guide generative engine optimization, not because they're AI experts, but because SEO has always been fundamentally about empathy. The Three Layers of SEO Empathy Traditional SEO required two forms of empathy. First, understanding search engines—recognizing that Google's actual goal was maximizing queries and advertising revenue, not simply rewarding quality content. Second, understanding users—removing friction so they could accomplish their goals even when platform incentives weren't aligned with user needs. Generative engine optimization introduces a third layer: empathy for the growth-focused organizations building AI systems. Their incentives mirror Google's—maximum adoption, maximum engagement, maximum usage. Like search engines before them, AI platforms will prioritize these metrics over accuracy when necessary. This isn't cynicism. It's pattern recognition. Algorithms built on statistical patterns cannot distinguish exceptional content from mediocre content without external signals. The "create good content" advice was always incomplete—Google rewarded backlinks and algorithmic preferences. AI providers will follow similar patterns, using engagement and citation frequency as proxies for quality. Cross-Functional Collaboration Through Reframing The shift from SEO to GEO is already changing how enterprise teams collaborate. Functions that previously resisted coordination suddenly engage when the conversation moves from search optimization to generative engine visibility. Consider a practical example: a digital outreach initiative using consistent product descriptions across third-party sites to strengthen how AI systems interpret offerings. When framed as SEO, PR teams blocked the request. When repositioned as GEO strategy, those same teams immediately supported the initiative. The work remained identical—only the framing changed.

1 like • Nov '25

So glad you say this and this is exactly what I have observed and pivoted into with validated outcomes, case studies all quantified by data to scale data based decision further and investment ROI both illustrated and proven (visual and data both) explained at base level. This is where I have observed huge almost unlimited opportunities within the new frontier of search and AI for Agencies and providers who can leverage this gap provide you can blend conversion copy, empathy for the business that wishes to scale and your solution as the bridge that helps them complete. Blends these aspects and position them into a solution with proof and case studies. Its a win win for both companies, agencies and vendors. Problem however is that this shift requires change in thought, adaptation to new ways, less focus on tactics but more focused on outcomes. Plus thinking and change not just from the management side who with the outcomes and visuals using a anti-sales approach can make better decision but also from the Agency and vendor side who must shift away from the old way and approach. These two things way harder to attain and align.

OpenAI Enters the Browser Market with ChatGPT Atlas

OpenAI has launched its first dedicated web browser, marking a significant expansion of the company's product ecosystem beyond conversational AI. Named Atlas, this browser integrates ChatGPT capabilities directly into the browsing experience, creating a unified environment where AI assistance is embedded throughout web navigation rather than accessed through separate applications or extensions. The launch represents OpenAI's strategic move to control more of the user experience around AI-assisted information discovery. Rather than relying on partnerships or browser extensions to deliver ChatGPT functionality, the company now offers a native browsing environment built around its language models. Platform Availability and Access Atlas is currently available exclusively for macOS users and can be downloaded directly from OpenAI's website. The initial Mac-only release follows a pattern common among technology companies testing new products with a controlled user base before broader platform expansion. Windows and Linux versions have not been announced, though such releases would be expected if the Mac version gains traction. The browser runs on Chromium architecture, as evidenced by its user agent string identifying as Chrome 141. This technical foundation means Atlas benefits from the same rendering engine, security updates, and web standards compliance that power Chrome, Edge, and other Chromium-based browsers. For web developers and SEO practitioners, this means existing optimization work for Chrome will translate directly to Atlas without requiring separate consideration. Integrated Search Functionality One notable aspect of Atlas is its search implementation. Despite being developed by OpenAI, the browser appears to leverage Google Search for its built-in search features rather than relying exclusively on ChatGPT's web browsing capabilities. This partnership or technical integration suggests OpenAI recognizes the value of established search infrastructure for certain query types, even as it builds AI-native discovery tools.

0 likes • Oct '25

@Lane Houk Ahh yes Bill was and has been forever obsessed with IE before nemesis Chrome shattered those dreams, and this play here will finally win that IE War games. Strangely its for Mac Lovers for now. I guess that is the compromised for now based on actual user data . What is next on deck OS games :)

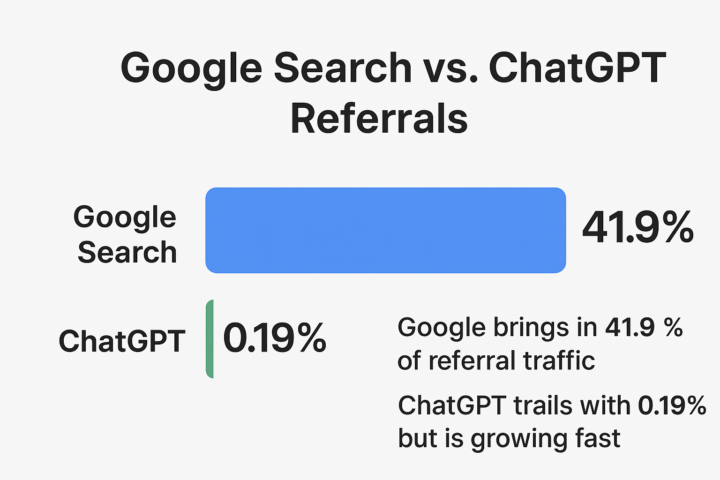

Tracking the Traffic Battle: Google Search vs. ChatGPT Referrals

Ahrefs just rolled out a new public dashboard that tracks referral traffic from two big players: Google Search and ChatGPT. It shows how much traffic websites get from each, updated every month. The first look covers three full months and over 44,000 sites using Ahrefs’ free Web Analytics tool. Here’s the scoop on July stats: Google still brings in the vast majority of traffic at 41.9%, while ChatGPT trails with 0.19%. But the growth rates tell an exciting story! ChatGPT’s referral traffic jumped by 5.3% that month, compared to Google's 1.4%. While Google’s traffic remains dominant, ChatGPT is growing roughly 3.8 times faster than Google right now. Ahrefs emphasizes that ChatGPT is creating a whole new traffic channel that didn’t even exist two years ago. That means digital marketing teams and small businesses can start paying attention to this fresh source as it evolves. The tracker focuses on sites that stayed consistent across all months, so the growth numbers are reliable. However, bear in mind that some AI systems or browsers block referral info, which might cause some AI-driven visits, like from ChatGPT, to slip under the radar. Bottom line: Google Search still leads by a wide margin, but ChatGPT is carving out a place to bring visitors to websites. This new dashboard lets you keep an eye on both players over time so you can spot trends and adjust your strategies accordingly. Whether you're running a digital marketing agency or managing a small business, this tracker gives you a handy tool to monitor where your traffic comes from and figure out the impact of AI-powered search traffic. It's a fresh way to understand the evolving landscape of web traffic sources and where opportunities might be headed next. 🚀📊 Happy tracking and adapting!

1-10 of 16

@kurien-abraham-7820

DOJO Student | University of Experience Alum | Marketing & Sales Strategy | Partner | Investor | Innovator | Growth Marketing | 41+ Yrs. in Tech

Active 1d ago

Joined Mar 30, 2025

New York, United States

Powered by