Activity

Mon

Wed

Fri

Sun

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Memberships

VibeAcademy

288 members • Free

n8n KI Agenten

9.8k members • Free

ATEM Musik Marketing

5 members • Free

Chase AI Community

35.1k members • Free

AI Accelerator

16.9k members • Free

Hamza's Automation Incubator™

44.4k members • Free

AI Agent Developer Academy

2.4k members • Free

AI Automation Society

240.2k members • Free

AI Income Blueprint

4.8k members • Free

29 contributions to AI Automation Society

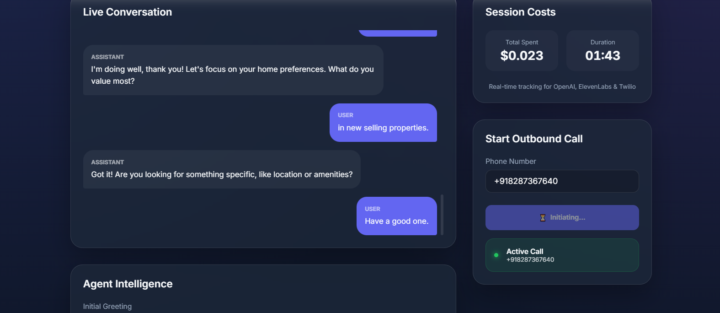

Today I built my own Vapi

Hey everyone, Today I actually took a step forward and built my own Voice AI Agent platform which can directly do outbound calls. The idea came up randomly while I was on a Google Meet call with a friend, and instead of overthinking it, I decided to just try building it. In around 3 hours, I managed to set up a basic voice AI agent. It can even make outbound calls, which honestly surprised me a bit. What really stood out was the cost. A 2-minute call costs me around 2.5 cents, which feels crazy when I compare it to tools like Retell that were costing me around 7 cents per minute. It’s still very early and definitely needs improvements and more features, but it already feels fun and promising to build. I haven’t deployed it yet, but I’m planning to keep improving it.

Be honest: which one actually scales?

Quick gut check — no overthinking. When you’re building automations for real users or clients, what actually scales better long-term? A) One simple automation that works 95% of the time B) A complex system that tries to handle every edge case Curious where people really land. 👉 React with A or B and comment why if you’ve been burned by one.

Lovable raises $330M, valued at $6.6B

Absurd... check out the details here Which website building app is your favorite?

Poll

527 members have voted

1 like • Dec '25

I've been using cline for a while inside VS Code. It's pretty cool. There are a lot of free models that you can play around with. In general, everything is better than Lovable and Bolt. Believe me, I tried a lot of apps😅 still don't understand the hype around Cursor, but I know a lot of people saying it's an amazing thing. I'm testing Google Antigravity and Open AI Codex now (both are semi-free). Codex is slower but able to resolve bugs on its own like no other builder can (runs tests, creates several versions etc.) I built several apps using just VS Code extensions and mostly free models that are actually available there. Some of them are better, some of them are worse, but I think it's like the best thing and you don't have to pay $20, 30, 40 per month for something which is not always delivering. And also on top of that, Lovable is just for simple, lightweight web apps or websites. The website you build on Lovable will be very bad for SEO because it's React Vite, which means that the website is loading as you scroll. So Google cannot really read it. It's not like HTML or Next.js, for example. It's gonna be very fast. It's gonna look pretty good, but it's not gonna be the cream of the cream anyways. Here's one I started to build with Lovable and then just moved to VS Code: https://wembly.app/ And you're completely limited to building web apps. You can't build a mobile app or a desktop app using Lovable

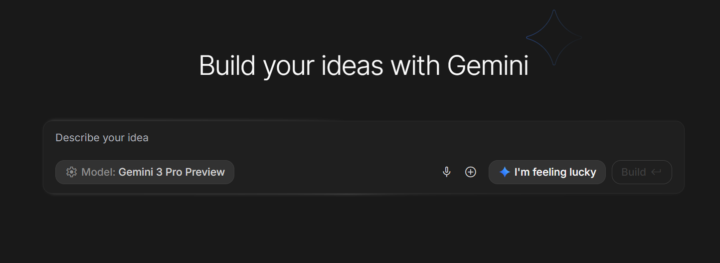

Google’s AI Studio Vibe Coding is the worst Vibe Coding tool I’ve ever seen

Google launched its Vibe Coding tool inside AI Studio a few weeks ago, and people made thousands of videos on it. The reason it’s being so popular among vibe coders is that it’s completely free. You don’t even have to integrate the Gemini API key with it because it’s integrated automatically inside AI Studio. But here are two reasons you should avoid using it right now: 1. No Support for Environment Variables AI Studio won’t let you create env vars while vibe coding. So if you are building a web app that uses APIs (e.g., OpenAI, Anthropic), you have to use them right inside your code, which will expose your API keys. 2. No Testing URL AI Studio doesn’t provide a testing URL, which is very important for setting up Google authentication, Supabase, etc. But wait! If you still want to build web Apps using Gemini, you can use Gemini CLI, which has a versatile free tier. You can install Gemini CLI inside your Device using Node.js. It means you can store your API keys inside .env.local file while building apps, and you will get a static testing URL like localhost:5000

1 like • Nov '25

@Hussam Muhammad Kazim thanks for highlighting that! I tested a bunch of vibe coding tools over the past year. I wouldn't say the AI Studio tool is that bad, but of course, it shouldn't be used to create the final product. However, it's good for turning your idea into an app, and then you can push your code to GitHub, open it on your machine, and turn it into a real app with CLI/Codex etc. My personal favorite is Grok Code Fast (which is free inside the Cline VS Code extension) and can compete with major coding/reasoning models.

OpenAI Data Residency

Anyone from Europe here? Has anyone here had practical experience with OpenAI's EU Data Residency? I'm currently building a tool based on Whisper/GPT-4o Mini Transcribe and want to make sure all data processing stays entirely within Europe (so no US region). I'm curious if anyone has successfully set up a project or organization with EU Data Residency enabled (like through an Enterprise or Team account) — and whether the audio models (Transcribe) actually work properly there. Are there any experiences or pitfalls you can share?

1-10 of 29

@kirill-zolygin-9313

Building Apps and Workflows

Check my work: https://dictata.app |

https://wembly.app/ |

Speaking: English, German, Russian, French |

Active 10h ago

Joined Apr 24, 2025

Berlin, Germany

Powered by