Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

Luke's AI Skool

2.1k members • Free

Learn Microsoft Fabric

14.5k members • Free

Fabric Dojo 织物

357 members • $30/month

65 contributions to Learn Microsoft Fabric

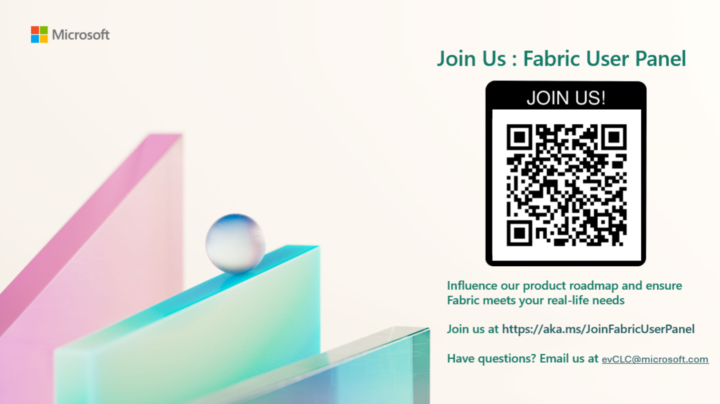

🤔 "Why did Microsoft design it like this?!" - sign up and help shape the future of Fabric 🚀

If you've ever thought... "hmm, I wonder why they've designed this feature like this?!" - this post is for you. This is a cool opportunity to help shape the future of Microsoft Fabric. You can sign up via this link to join the Fabric User Panel: aka.ms/JoinFabricUserPanel In the Panel, you'll work directly with the Fabric Product Team and provide feedback on the product, including new features that are currently private (you'll need to sign an NDA to join the panel!). You feedback will help shape the future strategic direction for the product - nice. So if you want to provide feedback to Microsoft, to help shape it into a better product, then sign up to the Fabric User Panel today 😀 PS, if you sign up, you can leave at any time. Let me know if you're thinking about joining, or if you have any questions about it!

'Migrating' from Power BI Premium to Fabric Capacity

Hi everyone, I know a lot of you are facing the requirement to move from Power BI Premium capacities, to Fabric Capacities, because of the sunsetting of the Premium capacity licensing. This blog post by David Mitchell (Microsoft) - walks you through a few different options for you, including a manual switch, and the use of semantic-link-labs. Let me know your thoughts, is this something you're planning?

0 likes • Oct '24

I have a lot of questions about this topic. The main one is how to create the Fabric Capacity. In the image below, we have a P1 with 16 cores (I had 8-cores, but in the last negotiation I think Microsoft increased it to 16 cores. I need to ask IT about this). Do I need to click on "Purchase" a Fabric capacity? it suppose that the same P1 can be used with F64. @Will Needham your comments about this? Thks in advance.

November leaderboard winners! 🥇🥈🥉

A big congrats to the following top contributors in the community for the month of November: 🥇 @Anthony Kain 🥈 @Alisa Benešová 🥉 @Matt Roberts Thank you all for your contributions to the community, you have all won a free pass to Fabric Dojo for the month of December 🥳 apart from Matt who is already a member 🤣 I'll have to think of something for you Matt! If you're new to the community, every month, we give away three one-month passes to Fabric Dojo for the top 3 contributors in the community, as per the 30day leaderboard! How do you climb the leaderboard? Read this. Well done to everyone 💪

MS IGNITE WEEK ANNOUNCEMENTS 🧵

There's a big Microsoft conference in Chicago this week, and there are lots of Fabric announcements/ news/ updates. Let's use this thread to discuss and keep track of things! Share any news articles/ announcements you come across below 🧵👇

OneLake Storage and Premium Capacity (P1)

Hello everyone! Since I couldn't find an answer to the following question and since the online documentation (in my opinion) is unclear, I want to take advantage of this community to understand the following point: Suppose a customer has a workspace with a Premium P1 capacity, a developer is able to create Fabric items (e.g. Lakehouses, Warehouses, Notebooks, etc.). Let's say we need to create a Lakehouse: do we need to buy (extra) storage to manage data? The answer should be "no" because I tested it (I was able to create it and ingest some data) but I couldn't find anything on the documentation online regarding the storage size. With a Premium capacity, is there a default storage limit? Can it be extended? As I said, I couldn't find anything concrete online. Maybe some of you have the answer I was looking for 😀 Thank you all!

1-10 of 65

@julio-ochoa-1058

Computer Science. Data and Analytics Manager. Certification on DP-600 Fabric

Active 8d ago

Joined Mar 23, 2024

Canada

Powered by