Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

What is this?

Less

More

Memberships

AI Automation Society

202.6k members • Free

7 contributions to AI Automation Society

Automating document creation with Claude + MCP connectors — lessons from an aerospace client

Working on an interesting project right now and figured I'd share what I'm learning: helping a client automate their engineering document workflow using Claude Desktop + SharePoint/365 connectors. The challenge: They create ~600 "data packages" per year. Each one requires pulling structured data from their ERP, plus unstructured content (text, tables, images) from certification PDFs. Currently takes 30-60 min each, mostly manual copy-paste. The approach: Claude Desktop with MCP connectors to SharePoint + a SQL connector to their ERP. Goal is 50% time reduction. Early findings: - The 365 connector can pull text from PDFs but not images (yet) - Might need Claude Code for the image extraction step - Data normalization is the real bottleneck — some fields live in the ERP, some only in PDFs Anyone else working on similar enterprise doc automation? Curious what approaches have worked for you.

My Compliance System Couldn't Read Its Own Audit Documents (Embarrassing Fix Inside) 🔥

Built compliance tracking workflow using community template. Deadline notifications, task assignments, status reporting - perfect automation. But compliance documents? Someone still manually reading 40-page audit reports. Extracting findings. Copying requirements. Creating remediation tasks. 8-10 hours per audit. While using an automation template. THE GAP: Template tracks compliance tasks brilliantly. Sends alerts. Routes assignments. Monitors completion. Dashboard shows everything. But creating those tasks from audit findings? Still manual document reading. Someone highlighting. Typing. Categorizing. Creating tasks one by one. THE REALITY: Regulatory audit arrives: 40 pages, 23 findings, 47 specific requirements, 12 different deadlines, 5 severity levels. Someone reads entire audit. Highlights findings. Types into tracking system. Categorizes by severity. Assigns owners. Sets deadlines. Creates monitoring tasks. Takes full day. Then the template takes over and automates everything beautifully. Missing: Reading the audit document itself. THE EXTENSION: Added audit document intelligence. Audit PDF uploaded → Extract findings automatically → Identify requirements → Extract deadlines → Categorize by severity → Create tracking tasks → Assign based on rules → System ready. Complete audit processing: 15 minutes (was 8 hours). WHAT IT EXTRACTS: Finding descriptions and severity levels. Specific compliance requirements. Deadline dates and terms. Responsible parties mentioned. Remediation steps suggested. Reference standards cited. Evidence requirements specified. THE TRANSFORMATION: Before: Receive audit → Manual processing 8 hours → Tasks ready next day → Team starts remediation After: Receive audit → Upload PDF → Review extraction → Tasks ready in 15 minutes same day → Team starts immediately Team starts remediation immediately instead of waiting for task creation. Critical findings get attention same day, not next day. THE NUMBERS: Audits processed quarterly: 4

0 likes • 5h

This is super interesting. Would you be open to recording a Loom video where you walk through this a little bit? And I'm curious, what's the text app? Like, what does the data flow look like? Is this like a fully automated workflow, or is this something where somebody's driving Claude, let's say, with a bunch of connectors with read and write access to the different systems?

AI News – December 5, 2025

The industry just pivoted from “chatbots” to autonomous AI agents: Google launched Gemini 3 with Deep Think reasoning, AWS killed the chatbot era at re:Invent with long-running “frontier agents” on new Trainium3 chips, Anthropic signed a $200M Snowflake deal for enterprise agents, Mistral dropped efficient new models, and OpenAI bought Neptune for persistent memory — while safety scores remain mediocre and GPU supply is choking. In short: 2025 is the year AI stops talking and starts working by itself.

1 like • 6h

The Neptune acquisition is interesting. I had not been aware of that one. Persistent memory is clearly a huge challenge and core to the user experience. I'm a little bit skeptical of anything short of more frequent local weight updates, since I think that's the most general solution, but I don't know how far away that is. Could be two years, could be 20. Although maybe I'm wrong, but I did a little cursory research and it seems like monitoring and observability tooling for training runs, or am I getting that wrong? That would make a lot of sense as an acquisition - a core piece of infrastructure basically for them.

AI upgrading

AI won't replace programmers, but it will eliminate the role of the coder. The current shift is accelerating: Tools like Cursor and Windsurf are no longer just "automated assistants." They understand the entire codebase and make changes across multiple files simultaneously. Your role as a software engineer is shifting from "writing functions" (compiling code) to "architecting" (creating code) and reviewing it. If you still type every line by hand, you'll save up to 70% of your time.

2 likes • 6h

I agree that the role is shifting, and I think thinking like an architect applies pretty broadly. I guess I'll share two ways that I've fundamentally changed my workflow. One is I try to spend ample time with the end user, having them explain in excruciating detail their existing workflow goals, the different systems they're interacting with, and so on, and record it all in Fireflies, meeting recordings and transcripts. Usually one to two hours worth of that content is sufficient to then pipe into a Claude Code session, along with some additional dictated context if I have ideas or additional context that wasn't documented in the transcript that would be useful to someone who's doing an actual build. And then I'll let Claude Code rip and work with it until I'm satisfied. The other side of it is thinking about using AI for the purposes of QA and QC. Automated test writing is, of course, great. And using plain language to say, I want higher levels of test coverage, or what can I do to make this more robust or easier for someone to clone the repository and contribute to themselves. I've started adding lots of bots to do automated pull request reviews. And so if I'm ready to submit a PR, I'll tell Claude Code to, after it's submitted the PR, wait for 10 minutes or however long it takes for those bots to do the reviews, make comments, have it review the comments and prioritize additional changes that it can make. And then overall, I find that that improves quality in a way that makes the project more robust. Getting really clear on the tacit knowledge that you're trying to unstick from subject matter experts and incorporate into a new piece of technology. And then the different success criteria that you'd want to validate it against seems to be where the surplus attention should flow. And that's hard for people who come from an engineering background because they're not necessarily used to spending that much time with customers. I come from the opposite direction where most of my career has been in discovery, launching new products, process transformation, et cetera, with technology always as a means to that end. For an example, here's a project in an open source repository for a command line tool that allows recruiters using the Greenhouse applicant tracking system to massively accelerate applicant review. I built it using this methodology I just described.

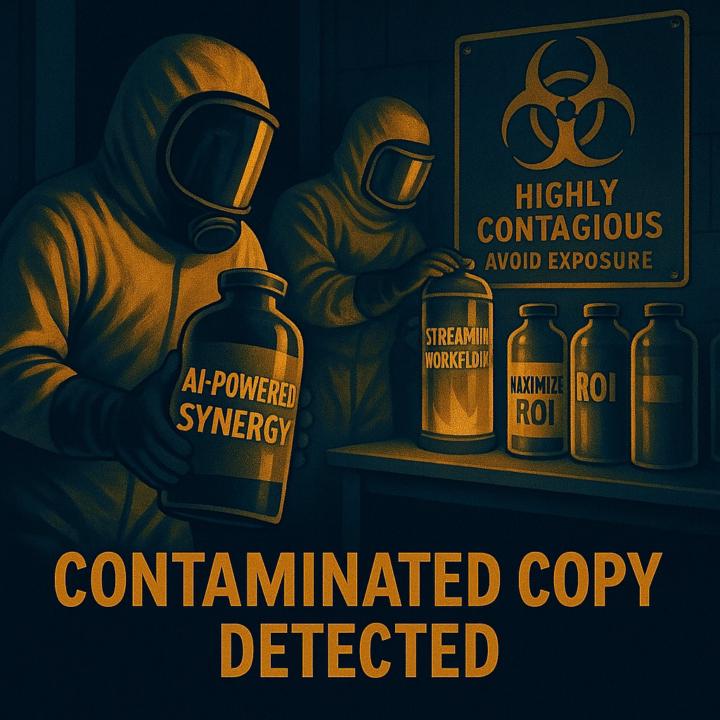

AI Agencies sound identical in 2025.

(it’s costing you leads) Question: what do these phrases have in common? "Optimize workflows" "Enhance productivity" "Streamline operations" Answer: they’re all putting your prospects to sleep… Here's why: Corporate speak doesn't just fail to engage → it actively gets filtered out. Because your prospects have heard it a thousand times… But when you use language that stands out by sounding human? You get remembered. You get responded to. You get chosen. Let’s compare three AI Agencies for example: Agency A: "Our AI solution reduces support ticket volume." Agency B: "Our AI handles customer inquiries 24/7" Agency C: "Stop the 20-ticket Mondays that make you want to throw your laptop out the window." Which one gets their attention? The first two are forgotten in 5 seconds. The last one gets a screenshot for the business partner to see. So here’s your move: audit your messaging. If it sounds like it came from a business textbook - rewrite it. Instead of saying: "Optimize task management" Say: "Stop opening your laptop at 6 AM in bed because you can't remember if you sent that critical follow-up" Instead of saying: "Automate manual data entry" Say: "Kill the soul-crushing spreadsheet work that keeps you at your desk until 9 PM" Corporate language is white noise. Human language is memorable. Spice it up a little to stand out. __ I help AI Agencies get more clients and scale to 6-figures. Follow me on LinkedIn for more content like this. Dan 🤝

0 likes • 6h

One thing I'll add to this is somebody who's been doing this now for three years is that step zero before you can get into this kind of messaging is to create compelling facts, as I like to put it, meaning actually create value and then talk about those stories. When it comes to good writing, the details are what makes something come alive. And instead of speculating, helping out someone, even for free if you're just getting started, and then recording the process end to end with Fireflies, capturing digital exhaust and email interactions and so on is a great way to go. That's how I draft all of my case studies and some of my social posts. I'll take transcripts where good outcomes were achieved or I learned something surprising when it came to applying the technology, or for multi-week or even multi-month engagements, getting an overall dump of Fireflies transcripts, emails via MailMeteor, and so on, and then piping them into Gemini 3 Pro, which has a super long context window and can generally handle that volume of data and asking it to draft compelling stories. That raw material becomes an asset that you can repurpose and point at particular personas that you're trying to reach. Don't skip step zero!

1-7 of 7

@christian-ulstrup-4556

300+ AI engagements, $2M+ EBIDTA impact | Former ARPA-H AI Advisor | MIT Sloan | Outcome-based model — CEOs pay for results, not hours.

Active 1h ago

Joined Dec 5, 2025

Powered by