Activity

Mon

Wed

Fri

Sun

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

Learn Microsoft Fabric

14.7k members • Free

Fabric Dojo 织物

354 members • $30/month

15 contributions to Learn Microsoft Fabric

Have you noticed the giant leaps in AI models?

Hey everyone! Have you been keeping up-to-date with the latest generation of AI models? If you HAVEN'T, then I recommend checking them out - they are insane to be honest - I'm talking Claude Opus 4.5, Gemini 3.0, GPT 5.1 etc. Has huge implications for how we will work in the future. I'm just figuring out the best workflows for implementing these models in Fabric workflows, I'll share more of my thinking and research in the following weeks/ months, but I'm interested - have you been keeping up-to-date with the LLM updates? Have you been thinking about how to integrate into Fabric - let me know what you've found so far!

Poll

71 members have voted

2 likes • Nov '25

As a developer that tends to move around and have to quickly adapt to the new language at the new spot, I love Claude and Copilot. Simple onramp to learning a new language, but also have used it, admittedly with many iterations, to help develop an algorithm that performs faster and more efficiently. I have also developed some Copilot agents that help refactor old sql code that I have inherited, to incorporate the latest functions available in current sql engines and to hold to a naming standard, and ADD comments. It generally works great.

❓ How are you using Variable Libraries? (If at all!)

Hey community! Are you using the Variable Library in Fabric?! I'm doing some research for a future YouTube video, and it would be great to open up a bit of a discussion around how you're using them so far, considering they are now Generally Available! What use cases are you using them for? Any tips to share about how you set them up? Or perhaps you're using the REST API endpoints for variable libraries for more advanced use cases? Any experience, big or small, would be useful to know about - so please share below! 👇👇👇

Poll

69 members have voted

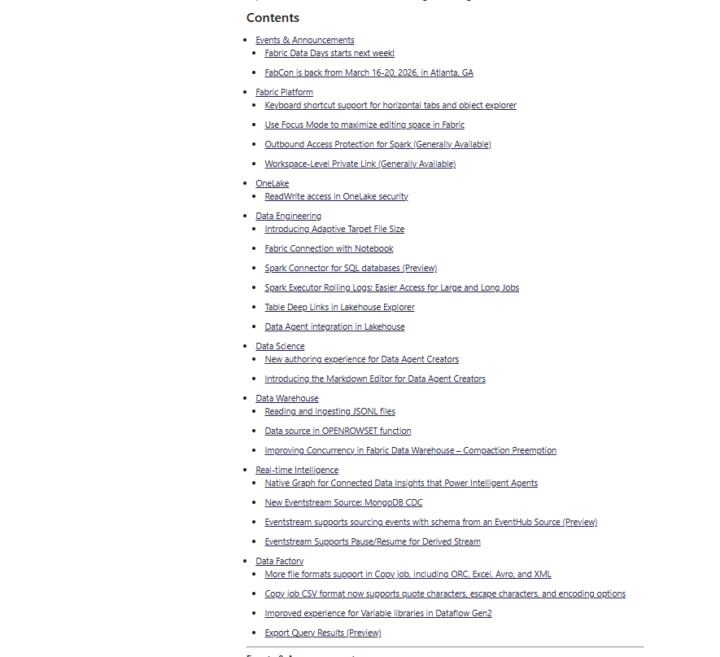

October Product Updates released

It's that time of the month again - Microsoft have recently published their list of product updates for the month of October. 👉🔗 Read the announcement blog: https://blog.fabric.microsoft.com/en-gb/blog/fabric-october-2025feature-summary?ft=All Of course, we'll be meeting on Sunday to discuss the announcement and product updates in detail. I'll be giving my views on the updates. Here's the details of that call. 📞 Fabric Product Updates Oct-25 📅 Sunday, November 2nd @ 4pm - 5pm (UK Time, see the event here) Regarding the October product updates- let me know which announcements you like the look of most! I'm always interested to hear how different new features land with people!

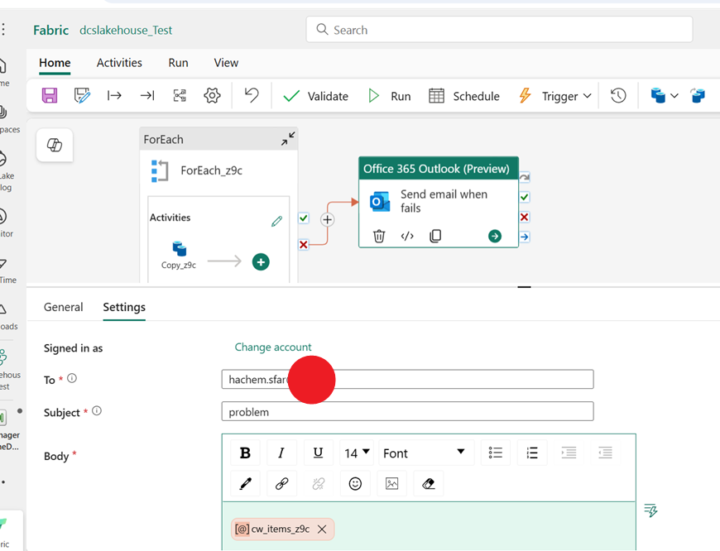

How to send detailed notification when Fabric pipelines fail?

I have multiple pipelines in Fabric. Some copy databases (like PostgreSQL) into the warehouse, while others run notebooks and collect data from APIs and Firebase into Fabric. Right now, I only receive a simple email notifying me that something went wrong, but I need to open the pipeline manually to check the error details. What I want is: - To automatically send an email with the log file or detailed error message when a pipeline fails. - To send this email in HTML format so it’s easier to read. How can I configure this in Fabric? Is there a built-in way ? Any guidance would be greatly appreciated!

1 like • Sep '25

@Warren Malcolm Thanks for sharing that solution. I am using the Outlook activity and definitely fight with it every time I have to edit the flow. Particularly because I want to send the email from a service account rather than my personal one. I will have try out using PowerAutomate

Submitting a Microsoft support ticket

I am wondering if anyone has some insight on this. When we were only using PowerBI Premium Per User, we were able to submit a support ticket to Microsoft. Now, that we have switched to an F8 capacity, when we try and submit a support ticket, we end up at the step where there is the drop down for a support plan, and no entries show up. There is an option to create a support plan, with a Premier access ID and Password, but that is not anything we have had to provide in the past... Any clues on what we are doing wrong or missing are much appreciated.

1-10 of 15

@arlette-slachmuylder-2089

Data Engineer at Portland State University Foundation

Active 4d ago

Joined Apr 14, 2024

Powered by