Write something

Week 4 Extension - Why This Build Goes Beyond “Just Using ChatGPT”

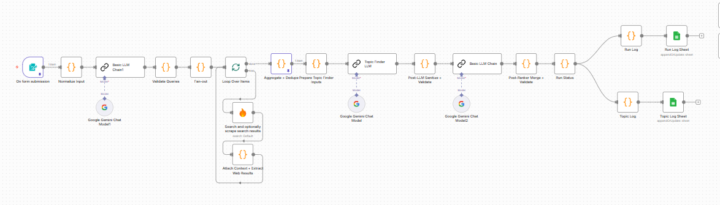

This week’s Topic Finder & Ranker classwork led me to an important realization. LLMs are excellent writers. But they shouldn’t be the ones deciding what to say. I extended the Week 4 build into an end-to-end content engine that uses an LLM as an executor, not a decision-maker. What’s different from normal GenAI usage vs this Content writing engine? When we use ChatGPT or any other GenAI tool directly, we usually ask: - “Write a post on X” - “Make it engaging” - “Add a hook” All strategic decisions are implicit and unstable. In this system, those decisions are explicit and frozen upstream. How the system works: 1️⃣ Topic Finder / Ranker: Only topics with real “why now” signals enter the system. No random prompts. 2️⃣ Idea Classification (Editor Brain) - Before writing, the system decides: - What kind of thinking this is - The intent (authority, education, engagement) - How safe or sharp the angle should be. This prevents format and intent confusion later. 3️⃣ Packaging Strategy (Creative Director Brain) Format is chosen before content: - Carousel vs text - Slide count - Visual role per slide. The LLM does not “guess” formatting. 4️⃣ Execution Engine - Only after all decisions are locked: - Post is written - Carousel blueprint is generated - PPT is auto-created via Python and Carousel prompt is generated for non-technical users. Key learning The value isn’t the writing. The value is structuring judgment around the LLM. This build helped me understand the difference between: - Prompting better vs - Designing systems that think consistently Still learning and iterating. Sharing mainly to document how my understanding of automation is evolving.

0

0

Week 4 Reflection: Topic Finder & Ranker

This week pushed me beyond simply following steps into actually understanding how data flows through an AI automation. Replacing JavaScript nodes with LLM chains sounded straightforward initially. In practice, I learned that LLMs silently break implicit assumptions unless context, schemas, and validation are handled explicitly. I ran into multiple dead ends like rate limits, broken loops, partial logs, and at first it felt like I was doing something wrong. Over time, I realized those failures were exposing gaps in how I was thinking about guardrails and observability. By the end, I was able to replace the JS ranker with an LLM-based ranker, add post-LLM validation, and build a more robust topic-finding workflow. Biggest takeaway: building AI workflows is less about prompts and more about protecting data flow and designing for failure.

Week 3 Assignment (Problem Decomposition) - Reddit

Hi team Please find the Week 3 Problem Decomposition Assignment. I worked on a Reddit Reply Assistant use case. Apologies for the late submission. Link: https://docs.google.com/document/d/1aOfobSbNBuOsRIOCnd2kxBXh14fYMgX4IIXM7QuCWFE/edit?usp=sharing @Eila Qureshi Would appreciate a review when you have time. Thanks! Happy holidays, everyone!💥

0

0

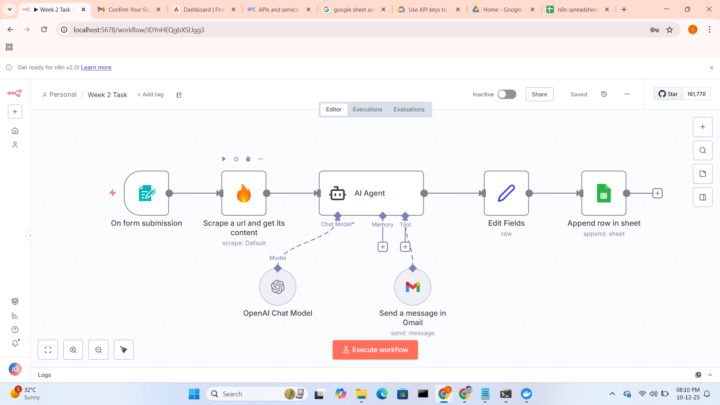

Week 2 Build Assignment

In Week 2 assignment i rebuild AI Automation for Digital Marketing B2B, included my website https://digitalimran.xyz

Week 3 Assignment – Problem Decomposition

This week was about slowing down before building. Decomposing my LinkedIn RAG project into minimal, testable nodes made me realize how much clarity comes from defining “done” first.

0

0

1-8 of 8