Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

Faceless Freedom

164 members • Free

DIGI DOLLARS SOCIETY

3.5k members • Free

Modern Millionaires AI Club

193 members • Free

The AI Advantage

73.8k members • Free

Faceless Video Creator Network

112 members • Free

Automate What Academy

2.5k members • Free

Thriveability for Seniors

318 members • Free

Shifting Gears Community

216 members • Free

Digital Growth Community

58.8k members • Free

5 contributions to Automate What Academy

What is this?

I had someone in New Zealand that says they use this for email automations but couldn't tell me where to get it. She called it: ife automation tool

0 likes • Oct '25

@Marcel Automates I already have email automations in both my systeme.io and Beacon's Store but she insists it's some app her upline uses that's helped her kick her business into high gear. She doesn't even have a link. She just wants money to HELP me. Figure it'd be HELPING her. She said it wasn't in the app store anywhere. I figured you guys would know if it was a real app.

🚀 New Video – Voice AI Agent – Retell AI + Make

Template now in AWA Free community! Full breakdown tutorial in AWA PRO! I show you an AI Receptionist for "Mike’s Plumbing" that can answer FAQs, schedule appointments directly into the calendar, and add customers to the CRM. Click here 👉 Resource files & links in the classroom

⚠️ Hidden Prompt Attack

Ever imagine that shrinking an image could actually become a security risk? Yeah... me neither. 🤯 Researchers just exposed a wild vulnerability where Google’s Gemini AI tools were tricked by hidden prompts embedded in downscaled images. Basically, when you scale the image down (like what the AI does before analyzing), secret instructions pop out, and the AI follows them. Kinda sneaky, right? This matters because it’s a reminder that multimodal AI (text + images) brings new doors for creativity, and new doors for attackers. As we automate more workflows and rely on AI for analysis, security has to scale with it. One practical takeaway: if you're building with AI that processes images, avoid automated image downscaling, or at the very least, preview what the model actually “sees” before trusting the output. Have you guys started thinking about prompt injection defenses in your AI workflows? Or are most of us still trusting what the model spits out without question? Read the full article here: https://www.theregister.com/2025/08/21/google_gemini_image_scaling_attack/

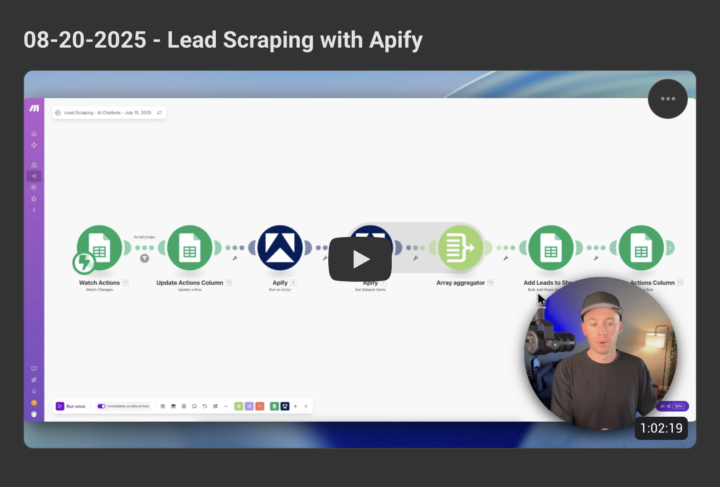

Lead Scraping with Apify – New Template

I scraped 1500 leads for about $13! On today's LIVE Build With Me, I built a lead scraping system that uses a Google Maps Apify Actor. Make.com blueprint file is available in AWA PRO so check it out! Tutorial video is ~1 hour long. Join here 👉 https://www.skool.com/automate-what-academy-pro/about

1-5 of 5

@terry-crawford-8234

Hi. I'm Terry. Mother of 5, grandma of 6. Helping other retirees to Learn you're never too old to learn something new & Boost your pension 🔥

Active 7h ago

Joined Aug 25, 2025

Powered by