Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Owned by Luca

AI DevOps Mastermind by Luca Berton: AI, DevOps, Kubernetes & Terraform. Access 50+ hours of courses, hands-on labs, and career-boosting mentorship!

Memberships

🇳🇱 Skool IRL: Amsterdam

71 members • Free

The AI Renaissance ✨

473 members • $33/m

AI Automation (A-Z)

121.1k members • Free

DOMENICO TURBO MARKETING AI

256 members • Free

Skoolers

179.2k members • Free

Break Into Cloud

810 members • Free

The Cloud Pirates

3 members • Free

308 contributions to AI DevOps Ansible Community

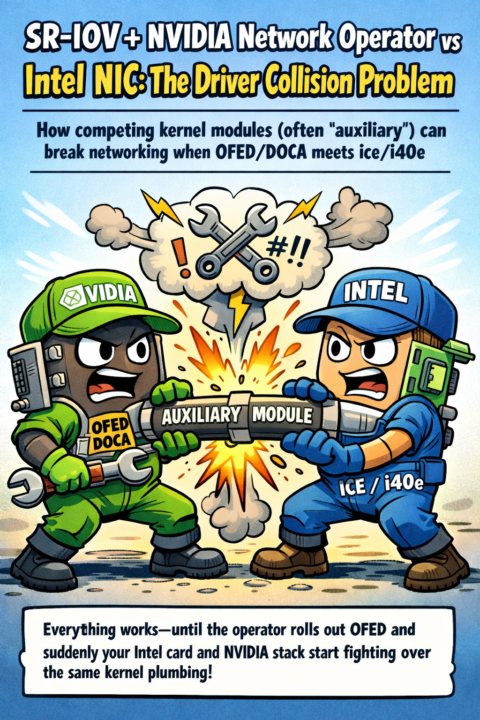

SR-IOV + NVIDIA Network Operator vs Intel NIC: The Driver Collision Problem

How competing kernel modules (often auxiliary) can break networking when OFED/DOCA meets ice/i40e. Everything works — until the operator rolls out OFED and suddenly your Intel card and NVIDIA stack start fighting over the same kernel plumbing. Here’s what’s actually colliding, why it happens, and how to stop it fast. This usually happens when NVIDIA Network Operator deploys a containerized OFED/DOCA driver and it collides with an Intel NIC driver stack on the same node (often ice / i40e). A very common symptom is an auxiliary.ko module conflict (only one auxiliary module can be loaded), e.g. “module auxiliary is in use by: ice” / “duplicate symbol … owned by kernel”. Option 1 (recommended): don’t deploy MOFED/DOCA from the Network Operator If you don’t strictly need MOFED/DOCA features on that node, omit spec.ofedDriver entirely from your NicClusterPolicy. IBM explicitly calls out that defining ofedDriver makes the operator create MOFED pods; omitting it skips them and uses OS-provided drivers instead. Also note NVIDIA’s docs: if you use host/inbox drivers (no DOCA-OFED), you may need extra host packages (Ubuntu linux-generic, RHEL-based kernel-modules-extra) and rdma-core for inbox RDMA. Minimal pattern: apiVersion: mellanox.com/v1alpha1 kind: NicClusterPolicy metadata: name: nic-cluster-policy spec: # IMPORTANT: do NOT define ofedDriver here sriovDevicePlugin: # keep SR-IOV, device plugins, etc. Option 2: keep DOCA-OFED/MOFED, but remove the Intel out-of-tree driver that brings its own auxiliary module If you must run DOCA-OFED/MOFED, avoid Intel’s out-of-tree/DKMS NIC driver package that drops a competing auxiliary.ko. The conflict described by NVIDIA users is exactly: Intel’s driver stack uses auxiliary and MOFED tries to unload/load it and fails. In practice, this usually means: revert to the in-kernel ice/i40e driver that matches your distro kernel, or ensure Intel’s driver package isn’t installing its own auxiliary.ko. Option 3: make sure SR-IOV device plugin is only selecting NVIDIA/Mellanox devices (so it doesn’t “grab” Intel)

1

0

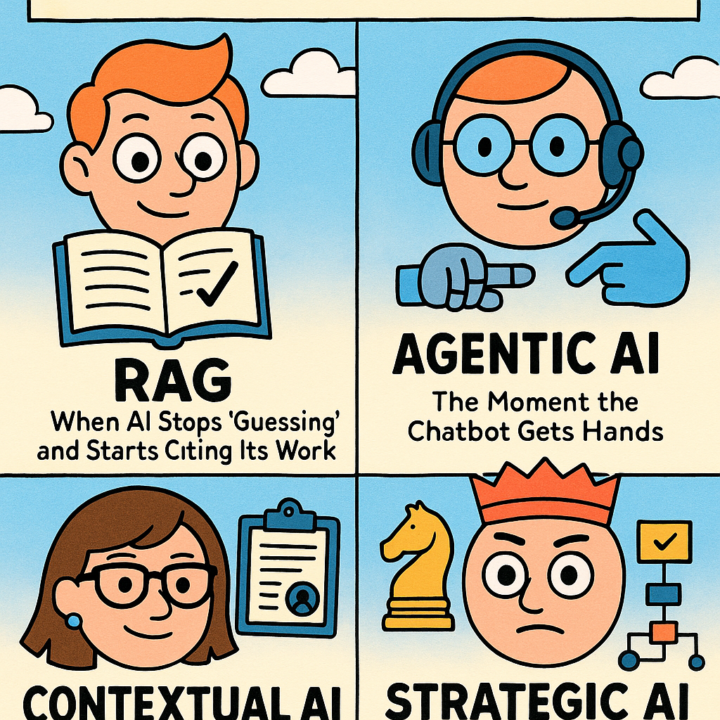

The Four Faces of Modern AI

RAG is when an AI looks up info from your documents first, then answers using that evidence. It helps reduce “made-up” answers because the model is grounded in real sources.Agentic AI is AI that can take actions, not just chat—like clicking, calling tools, or updating systems. It can plan steps and use tools to complete a task end-to-end.Contextual AI means the AI understands your situation (who you are, what you need, and what’s happening right now).It uses the right details at the right time to be more relevant and helpful.Strategic AI is AI that thinks longer-term, not just the next reply. It makes choices based on goals, trade-offs, and outcomes over time.Many real systems combine all four: retrieve (RAG), understand (context), act (agent), and optimize (strategy).The goal is AI that’s not only smart—but also reliable, safe, and useful.

0

0

Why Postgres + ClickHouse Are the Open Source Stack for Scaling Agentic AI

Postgres continues to serve transactional workloads, ClickHouse handles analytics and an ecosystem has developed that brings them closer together. https://thenewstack.io/postgres-clickhouse-the-oss-stack-to-handle-agentic-ai-scale/

0

0

AI bubble is going to burst

https://www.dw.com/en/ai-bubble-about-to-pop-as-returns-on-investment-fall-short-chatgpt-microsoft-nvidia-grok-elon-musk/a-74636881 Warren Buffett hold cash, he is waiting for crash https://www.gobankingrates.com/investing/strategy/why-warren-buffett-holds-more-cash-than-fed-signals-about-market/

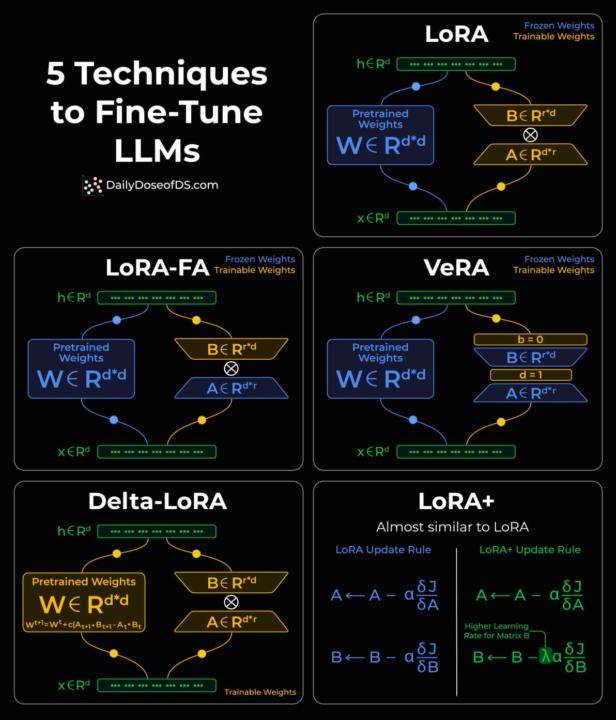

5 Techniques to Fine-Tune LLMS

LLMs are very large, so instead of changing all their weights, we use parameter-efficient fine-tuning to only train small parts. Methods like LoRA, QLoRA, prefix/prompt tuning, adapters, and BitFit add tiny extra layers or low-rank updates while keeping the main model frozen. This saves memory and compute, lets people train on normal GPUs, and makes it easy to reuse one base model for many different tasks.

0

0

1-10 of 308

Active 12d ago

Joined Oct 14, 2024