Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

HYROS Ads Hall Of Justice

4.6k members • Free

Data Alchemy

38k members • Free

Data Freelancer

124 members • Free

Coding the Future With AI

1.3k members • Free

79 contributions to Data Alchemy

Finally completed the Python course at kaggle

https://www.kaggle.com/learn/certification/jimjones26/python

Rag-based LLM Chatbot - Problems

Hello all! I am using mistral model for building a chatbot assistant. I am facing problems with the accuracy of the model. Sometimes it doesn't it respond with the retrieved context from the documents and just gives info from outside rag, and it doesn't stick to the prompt guideline after 5 queries. It's really frustrating I've tried changing the prompt style and even tried prompt chaining but the quality of the response is very low. What's the solution for this? Or have you faced a similar problem?

What are you learning right now? Let me know!

Hey everyone! As we’re heading towards the final month of 2024, I’ve been reflecting on what a whirlwind the past few months have been. Q3 and Q4 have been incredibly busy — between running Datalumina, managing client projects, seeing amazing growth in Data Freelancer, shipping the GenAI launchpad, moving into a new office, and onboarding the first customers for our new SaaS product, it’s been a ride. 🚀 On top of that, keeping up with the YouTube channel has been both a challenge and a joy. Honestly, it’s one of my favorite parts of what I do — helping you all learn, grow, and tackle data challenges with confidence. Seeing your feedback and progress keeps me motivated to keep creating. Now, I’m starting to map out my content plans for 2025, and I’d love to hear from YOU. What are you currently learning? What topics would you like me to cover next year? 🎥 Would you like to see more LLM-based tutorials? Deeper dives into machine learning workflows? Maybe even some freelancing tips for data professionals? Or something else entirely? Let me know in the comments — what are you most excited to learn in 2025? This is your chance to shape the direction of the content we create together. Thanks for being part of this community! 🙏🏻 — Dave

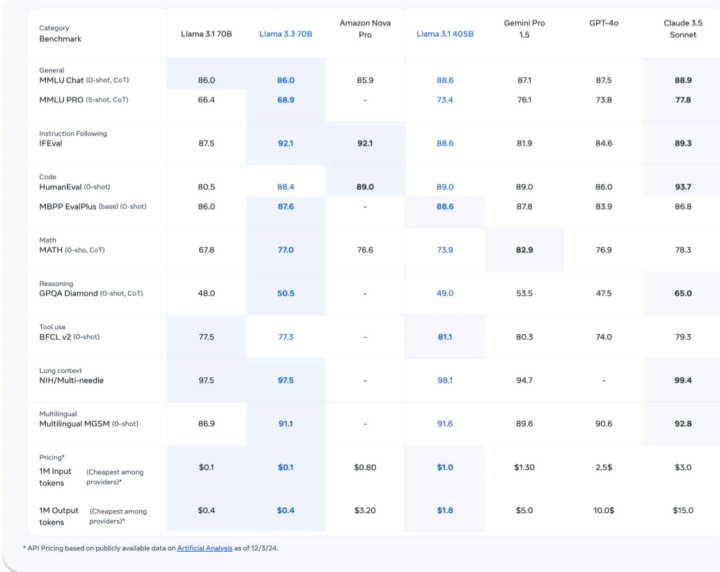

Llama 3.3 70B FTW

"As we continue to explore new post-training techniques, today we're releasing Llama 3.3 - a new open source model that delivers leading performance and quality across text-based use cases such as synthetic data generation at a fraction of the inference cost. The models are available now on llama.com and on Hugging Face soon through our broad ecosystem of partner platforms. Download from Meta https://go.fb.me/vy205y Download on Hugging Face https://go.fb.me/y5rcam. Model card https://go.fb.me/eezbpw The improvements in Llama 3.3 were driven by a new alignment process and progress in online RL techniques among other post-training improvements. We're excited to release this model as part of our ongoing commitment to open source innovation, ensuring that the latest advancements in generative Al are accessible to everyone"

1 like • Dec '24

As a followup, the chat I am using llama3.3 70b seems to fall off the rails after ~8000 tokens. I wonder if the provider I am using has limited the context window? I really wish I had the money to setup a local dev machine that could handle running 70b param models at a decent speed so I could really test them crap out of them without racking up API fees.

Is Windsurf the Cursor Killer?

New AI assistant built in code editor, Windsurf by Codeium. """ Cascade Cascade allows us to expose a new paradigm in the world of coding assitants: AI Flows. A next-gen evolution of the traditional Chat panel, Cascade is your agentic chatbot that can collaborate with you like never before, carrying out tasks with real-time awareness of your prior actions. """

1 like • Dec '24

I have been using it for almost two weeks now and I think it is better than cursor. Only thing I miss is .cursorrules file, and frankly I don't let the agent in either product run because I don't get good results. Prefer to use the chat feature. Windsurf seems to do better with answering questions within the contextual confines of my codebase, although I still have to watch the predictive autocomplete feature within a file because sometimes it goes off the rails.

1-10 of 79

@jimmy-jones-8626

24 year veteran in the web / software industries.

Active 5d ago

Joined Aug 5, 2023

INTJ

Pagosa Springs, CO

Powered by