Activity

Mon

Wed

Fri

Sun

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Memberships

The AI Advantage

70.5k members • Free

45 contributions to The AI Advantage

🔄 From One-Off Prompts to Habitual AI Use

Many people believe they are using AI because they have tried it. A prompt here, a draft there, an occasional experiment when time allows. But trying AI is not the same as integrating it. Real value does not come from one-off interactions. It comes from habits. AI delivers its greatest impact not when it is impressive, but when it is ordinary. When it becomes part of how we think, plan, and decide, rather than something we remember to use only when things get difficult. ------------- Context: Why AI Often Stays Occasional ------------- Most AI use begins with curiosity. We explore a tool, test a few prompts, and are often impressed by the results. But after that initial phase, usage becomes irregular. Days or weeks pass without opening the tool again. Each return feels like starting from scratch. This pattern is understandable. Without clear integration into existing routines, AI remains optional. It competes with habits that are already established and comfortable. When time is tight, optional tools are the first to be skipped. Organizations unintentionally reinforce this pattern by framing AI as an add-on. Something extra to try, rather than something embedded into how work already happens. As a result, AI remains novel, but not essential. The gap between potential and impact often lives right here. Not in what AI can do, but in how consistently we invite it into our workflows. ------------- Insight 1: One-Off Use Creates Familiarity Without Fluency ------------- Trying AI occasionally builds awareness, but it does not build intuition. Each interaction feels new. We forget what worked last time. We rephrase similar prompts repeatedly. Learning resets instead of compounding. Fluency requires repetition. The same way we become comfortable with any tool, language, or process, through use in similar contexts over time. Without that repetition, AI remains impressive but unreliable. This is why many people describe AI as inconsistent. In reality, their usage is inconsistent. Without patterns, there is no baseline to learn from.

1 like • 2d

Also, another important question for Igor and support team. If Liberal Arts universities would want to implement AI in their curricula, what is the best way to do it? How would AI help with music for example? I have seen on line, some beautiful transformation on classical music into modern applications, and of course there are infinite ways of transforming it. I listen but can’t figure out how to create the “backbone”, and attach the variations?

The Opportunity Isn’t the Hard Part

Sometimes you get exactly what you asked for—and instead of excitement, you feel the pressure. Because once the opportunity shows up, there’s no one else to wait on. No one else to blame. It’s on you. That’s the part most people don’t fully understand: opportunity doesn’t just require action...it requires capacity. Discipline. Decision-making. Follow-through. Responsibility. So don’t just focus on getting the opportunity. Focus on becoming the person who can execute, keep it, and continue to build it once it arrives. Question: Where do you need to increase your capacity right now...skills, systems, or standards?

The Secret to Getting 10x More Relevant Results in ChatGPT

In this video, I show you every way to customize ChatGPT as of October 2025. This includes personalization options for both the free and paid plans, so no matter how you use ChatGPT, this video will teach you how to set it up to get the best results!

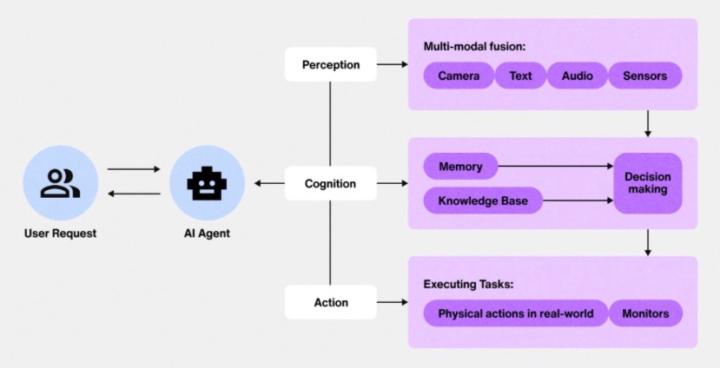

🤝 Trust Is the Missing Layer in Agentic AI

We are not limited by what AI agents can do. We are limited by what we trust them to do. As conversations accelerate around agentic AI, one truth keeps surfacing beneath the hype. Capability is no longer the bottleneck, confidence is. ------------- Context: Capability Has Outpaced Comfort ------------- Over the past year, the narrative around AI has shifted from assistance to action. We are no longer just asking AI to help us write, summarize, or brainstorm. We are asking it to decide, route, trigger, purchase, schedule, and execute. AI agents promise workflows that move on their own, across tools and systems, with minimal human input. On paper, this is thrilling. In practice, it creates a quiet tension. Many teams experiment with agents in contained environments, but hesitate to let them operate in real-world conditions. Not because the technology is insufficient, but because the human systems around it are not ready. The moment an agent moves from suggestion to execution, trust becomes the central question. We see this play out in subtle ways. Agents are built, then wrapped in excessive approval steps. Automations exist, but are rarely turned on. Teams talk about scale, while still manually double-checking everything. These are not failures. They are signals that trust has not yet been earned. The mistake is assuming that trust should come automatically once the technology works. In reality, trust is not a technical feature. It is a human layer that must be intentionally designed, practiced, and reinforced. ------------- Insight 1: Trust Is Infrastructure, Not Sentiment ------------- We often talk about trust as if it were an emotion. Something people either have or do not. In AI systems, trust functions more like infrastructure. It is built through visibility, predictability, and recoverability. Humans trust systems when they can understand what is happening, anticipate outcomes, and intervene when something goes wrong. When those conditions are missing, even highly capable systems feel risky. This is why opaque automation creates anxiety, while even imperfect but understandable systems feel usable.

1 like • 1d

Thank you, Igor. This is very insightful. Thinking deeply about what you said. Would that mean that it is risky for an engineer to provide remote automation to a process they can not observe and control closely? I think that because of communication lapses, it is difficult to do a rigorous work if not “there” to observe all subtle changes. Maybe I am a “chicken little” for this case, but this is how I see things now.

🧠 The Hidden Cost of Overthinking AI Instead of Using It

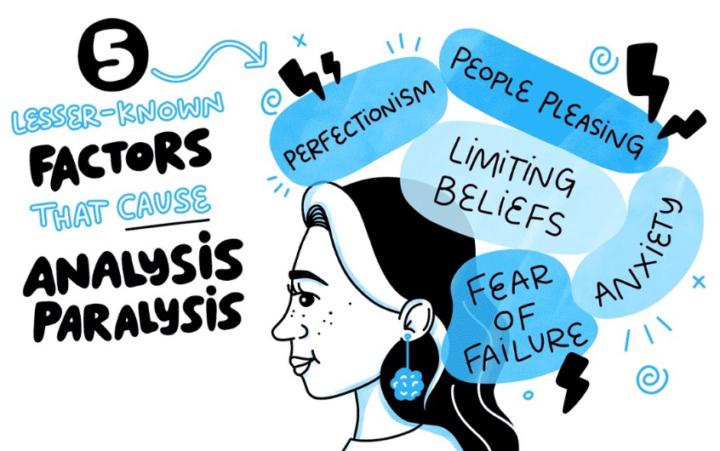

One of the most overlooked barriers to AI adoption is not fear, skepticism, or lack of access. It is overthinking. The habit of analyzing, preparing, and evaluating AI endlessly, while rarely engaging with it in practice. It feels responsible, even intelligent, but over time it quietly stalls learning and erodes confidence. ------------- Context: When Preparation Replaces Progress ------------- In many teams and organizations, AI is talked about constantly. Articles are shared, tools are compared, use cases are debated, and risks are examined from every angle. On the surface, this looks like thoughtful adoption. Underneath, it often masks a deeper hesitation to begin. Overthinking AI is socially acceptable. It sounds prudent to say we are still researching, still learning, still waiting for clarity. There is safety in staying theoretical. As long as AI remains an idea rather than a practice, we are not exposed to mistakes, limitations, or uncertainty. At an individual level, this shows up as consuming content without experimentation. Watching demos instead of trying workflows. Refining prompts in our heads instead of testing them in context. We convince ourselves we are getting ready, when in reality we are standing still. The cost of this pattern is subtle. Nothing breaks. No failure occurs. But learning never fully starts. And without practice, confidence has nowhere to grow. ------------- Insight 1: Thinking Feels Safer Than Acting ------------- Thinking gives us the illusion of control. When we analyze AI from a distance, we remain in familiar territory. We can evaluate risks, compare options, and imagine outcomes without putting ourselves on the line. Using AI, by contrast, introduces exposure. The output might be wrong. The interaction might feel awkward. We might not know how to respond. These moments challenge our sense of competence, especially in environments where expertise is valued. Overthinking becomes a way to protect identity. As long as we are still “learning about AI,” we cannot be judged on how well we use it. The problem is that this protection comes at a price. We trade short-term comfort for long-term capability.

1-10 of 45

@iris-florea-9749

I graduated the AI bootcamp, and I want to use AI in support of engineering projects, focusing on Manufacturing Operations.

Active 5m ago

Joined Nov 6, 2025

INTJ

USA, Eastern Pennsylvania.

Powered by