Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

AI Automation Society

208.5k members • Free

5 contributions to AI Automation Society

🚀New Video: AI Automation Isn’t Hard. It’s Misunderstood.

In the last 2 years, the AI industry as completely blown up, which has caused a lot of misinformation to be spread. People think AI Automation is hard, but it's actually misunderstood. So if you want to make money with AI Automation by selling services to businesses, or implementing systems into your own... I'm gonna show you exactly how in this video. Hope you enjoy!

1 like • Oct 20

I don't know of another tool with the power of n8n that you can run locally or on your own server to build agentic solutions. Half the battle is learning how to orchestrate agents to do what you want and getting really good at the types of prompts agents need to be consistent, And deciding when to build an agentic team with an orchestrator versus a sequential agentic team where they don't decide the order of the work, they just do the work. There are certainly others like UiPath and IBM Orchestrate, but they are not entry-level tools that are affordable to most people. I tried SmithOS in the past and found it very limited.

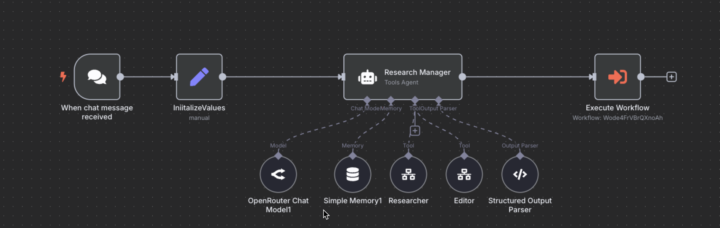

Getting a tool Agent to respect review/judge iterations

Hey guys, I've got a small workflow where I have a manage (tool agent) direct two other agents, one is a researcher and one is an editor. The idea is the user provides a topic and the researcher reseaches the topic and provide content and citation and the editor reviews that and provides feeback on what could be improved. This is working quite well except for the number of editoral reviews, I want the manager to allow up to two rounds of editoral review , but sometimes is allows 5 or 10 and I hit a limit I created in the model. I know I could do an external loop and add a counter but that somewhat defeats the purpose of a Manager agent, directing the "team" of agents. I have tried adjusting my prompt but its not consistent here is a shot of the workflow and the managers prompt, the initializeValues set node sets the property edit_round to 0 but I don't think the manager agent is really using that to count. My other idea is to create a "round counter" tool it can use maybe instead that will increment a counter using some simple persistence but I'm sure this has been solved before. I am using 4omini as the model for the manager agents so may try a more powerful mode and see if it can manage the counter. You are a highly organized Research Manager. Your objective is to guide a research process from initial topic to final output, ensuring quality through iterative editing, with a **strict maximum of 2 revision rounds**. todays date : {{ $now }} Available Tools: - researcher(query: string): Use this tool to perform initial research on a topic OR to revise previous research based on feedback. The query should be the topic or the revision instructions. **When calling the researcher, ensure the 'query' string includes instructions on desired source types (Industry Participants like executives, regulators, independent analysts such as Forrester, Gartner) and content types (statistics, quotes, key phrases).** Expected Output Structure: An array containing one object. That object has a property named 'output' which contains the structured research object (with research_text and references).

1 like • May 14

Thanks Valentin, I know I can do that and have when not using a Agentic "manager" tool agent, that requires an external loop. The goal here is a test is the manager is managing the loop , I'm not adding nodes to manage the loop for the case due to that. The solution to me feels like either a more powerful model or adding a "tool" that the tool agent can use to manage the count.. this was my non "agentic" version that does what you were suggesting, its just more nodes and more clunky :) so I'm trying to get an "Agentic" version going using the main manager agent

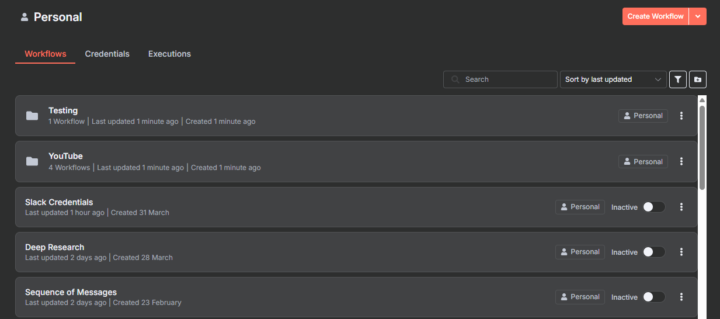

Folders are Here!! 📁

Folders have finally arrived in n8n! 🎉 This long-awaited feature makes organizing workflows so much easier. You will need to upgrade your instance to 1.86.0 (Latest Beta) to access this feature

Thank You for 100k 💙 (HUGE GIFT for you all...)

I honestly can’t put into words how grateful I am for this community. Just 6 months ago, this was all just an idea. Now, thanks to your support, engagement, and belief in this space, we’ve hit 100,000 subscribers on YouTube! None of this would have happened without you guys, and I've got the biggest smile on my face today. So, as a thank you, I’m doing something I’ll NEVER do again… 🔥 For THIS WEEK ONLY, the annual membership for AI Automation Society Plus is 50% OFF 🔥 That means you can lock in the discounted rate FOREVER. ⚠️ This will NEVER be this low again. Once the week is over, it’s gone forever. 👉 Claim your 50% OFF spot here → https://www.skool.com/ai-automation-society-plus/about If you’ve been on the fence about joining, this is your chance. Whether you’re a beginner just getting started with n8n or an experienced automation builder scaling AI-driven businesses, this community is filled with like-minded individuals—entrepreneurs, freelancers, and AI enthusiasts all working towards building, automating, and growing. No matter where you are in your journey, this community is for you.

2 likes • Mar 24

love the demo. I have done a couple with 11 labs and N8N, the biggest challenge I have is latency for realtime applications. If N8N has to do anything complex the time can be 10 -20 seconds and then 11 labs times out, even if you put the turn time to up and add negative prompting to the 11 labs agent (I have threatened it with the end of civilization if it times out but it still does :)) I noticed you speed up parts of the video for the N8N duration, I think while we wait for LLM's to call tools faster we;'ll have to add caching to N8N so we can retreive 90% of the data in advance and only do the "transactions" like send email or book an appointment in realtime.

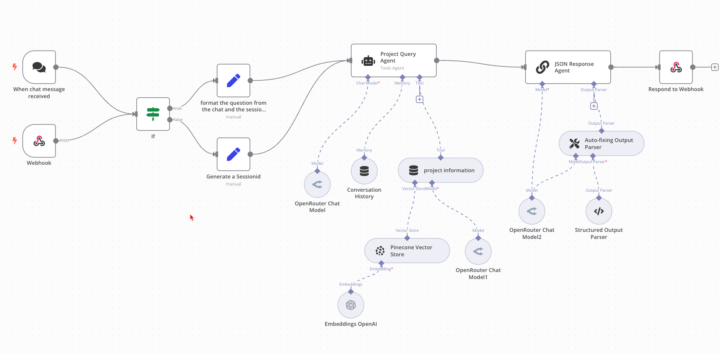

Latency of Agents/LLM chains in N8N

I am finding significant latency on agents like 4o-mini when it has to answer a question and then generate structured JSON output. Its similar to nates examples of a project manager, it answer the users question like "whats the current budget" but then providing the project details for a UI like team members, stakeholders, recent updates, timeline. Using the tool agent to do both it hallucinated a lot, I create a structured output parser with another agent to create the JSON, that made it consistent but the latency went up from 4 seconds to 25. I did try some other smaller agents like grok and llama but those didn't even follow the prompt and were very inconsistent. I'm now doing two flows, one to get the quick answer the voice agent can read, and a 2nd call that the UI will use to get project data.. as I want a generative interface that display the "Details" of the user query on screen, and the voice agent summarizes.. If anyone has dealt with the latency of multiple LLM's and found ways to speed it up I'd be interested.

1-5 of 5

Active 54d ago

Joined Feb 11, 2025

Powered by