Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Memberships

Builder’s Console Log 🛠️

1.9k members • Free

ChatGPT Users

12.6k members • Free

34 contributions to Builder’s Console Log 🛠️

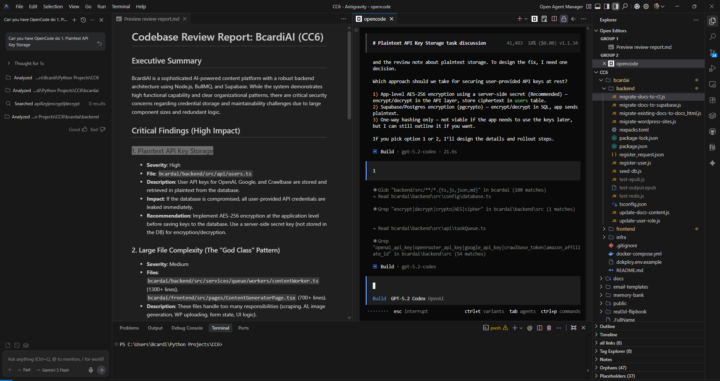

OpenCode/AntiGravity...

Is anyone else using both OpenCode and AntiGravity together? I have setup skills in AntiGravity and am now using AntiGravity to send tasks to OpenCode and find I am getting better code and it is much faster as I can send multiple tasks to OpenCode at the same time. AI Said it all: Yes, AntiGravity and OpenCode can be integrated so AntiGravity's agents handle high-level planning and orchestration while delegating specific coding tasks to OpenCode agents for execution. Integration Overview AntiGravity excels at agent orchestration and multi-step planning, while OpenCode provides precise code editing, repo control, and model flexibility. Users install OpenCode as an extension in AntiGravity (via the Extensions panel), enabling commands like Cmd/Ctrl + Esc to launch sessions where AntiGravity prompts trigger OpenCode runs. Setup Steps - Install AntiGravity IDE and OpenCode globally or as an extension. - In AntiGravity, search "Open Code" in Extensions and install it. - Use shortcuts (Cmd/Ctrl + Esc for new session) or terminal commands like opencode run [task] to invoke OpenCode agents from AntiGravity. - Optionally, add the opencode-antigravity-auth plugin for shared authentication and rate limits. Workflow Example AntiGravity's agent (e.g., powered by Claude Opus) creates an implementation plan and task list, then delegates via prompts like "@attach plan" or "use OpenCode to improve CSS," where OpenCode executes edits autonomously. This builds full apps (e.g., CRM dashboards) in minutes, with AntiGravity as "conductor" and OpenCode as "lead engineer." This setup is free, bypasses rate limits via local OpenCode runs, and supports multiple parallel OpenCode agents

1 like • 13h

@Tom Abendroth OpenCode is one of the most used Programming Tools out there now. It is only used through the command line but with using auth to use it then AntiGravity's AI can actually talk to it and send it tasks to do. It also uses many different providers and models. It is easily loaded from the AntiGravity or VSCode Extensions. One of the nice things about it is it can do multiple tasks at the same time so if you have 5 or 6 tasks to do, just send them all at once to different OpenCode windows and it does them in parallel.

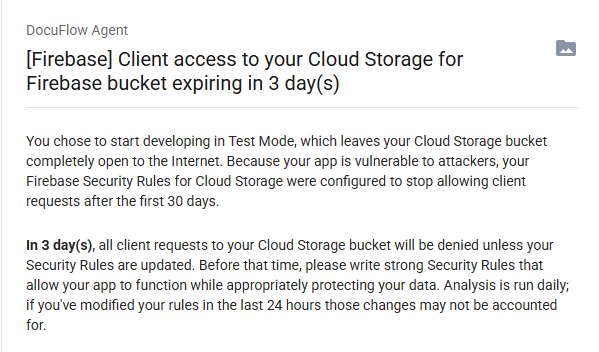

Firebase...

I got an email today that told me my Firebase in one of my apps would be cancelled if I did not update my rules to it. I had no idea what to do so I entered the text of the email into Antigravity and the AI took care of it. It is so nice not having to worry about things!

Failed to call the Gemini API: user has exceeded quota

I vibe coded for 14 hours straight yesterday before hitting the quota limit. It's pretty incredible how much free quota Google gave me as a new user. I got all the way to almost the very end of How To Build Your First App with AI - Lesson 2. So I hauled out my credit card and upgraded my subscription from "Free" to "Tier 1". Imagine my delight when I found Google added a $300 usage credit to my account and did not actually withdraw any funds from the credit card I provided. One thing I did learn is that my $40 a month Gemini 3 subscription does not apply here. Google AI Studio is part of the Google Cloud infrastructure which is billed separately based on usage. I'll monitor the consumption of my $300 credit and report back here...

1 like • Dec '25

@Brad Codd I have had $0.01 charged to me since I started using AntiGravity, Firebase, and several other Google API's (YouTube, and Search), and that was last summer when I still used Vertex. I am on Google AI Pro but haven't really been limited by it at all. I did at first but since they have upped the limits I have not had any problems. In AntiGravity I mainly use Claude Opus 4.5 and Google 3.0 Pro Thinking. There is no extra charge for those on AntiGravity and I find since AG is VSCode I can do everything I want with it and it's MUCH better than AIStudio which charges you.

1 like • Dec '25

@Brad Codd It depends on what I'm doing and if I am really working on a project sometimes I do 8-10 hours a day. One thing I have is I still smoke and do not smoke in my home so I go out on my patio many times a day, usually while the AI is doing something. I'm old and retired so I have plenty of time.😄

Trying a few things and some of these work wonders...

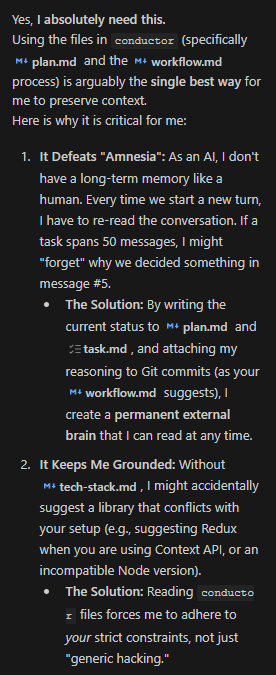

I discovered Conductor Extension for Gemini CLI and tried it out. Gemini 3.0 Pro Thinking told me that it likes the plan. I have also given it rules that I got from AICodeKing on YouTube that has it trained to do things that will keep it on track. Many times the AI hallucinates and does not even remember your tech stack. This will help on that. The features of the Conductor Extension is: Features - Plan before you build: Create specs and plans that guide the agent for new and existing codebases. - Maintain context: Ensure AI follows style guides, tech stack choices, and product goals. - Iterate safely: Review plans before code is written, keeping you firmly in the loop. - Work as a team: Set project-level context for your product, tech stack, and workflow preferences that become a shared foundation for your team. - Build on existing projects: Intelligent initialization for both new (Greenfield) and existing (Brownfield) projects. - Smart revert: A git-aware revert command that understands logical units of work (tracks, phases, tasks) rather than just commit hashes. I have it just reviewing my present codebase to see what is good and what needs help. In the last image you can see all the files it came up with about my codebase.

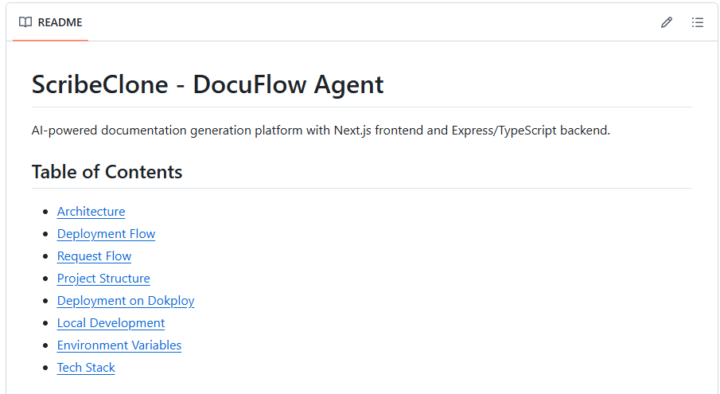

Do you use Mermaid in your README.md?

Most of the new IDE's for Coding including Vibe Coding can use Mermaid in your readme's and other markdown files. This is my README,md (yes I put a comma in there instead of a . so it wouldn't put a dead link) from one of my new apps. You will notice that it has flowcharts built into it which make it very readable. The AI put those in because I just asked it to add mermaid diagrams and flowcharts to it. I also asked it to put in a Table of Contents. It also makes it easier for others to understand the code and since AI wrote the code for me to understand it.

0 likes • Dec '25

@Tom Abendroth You are correct. I do that for any new features I want so the AI is aware of it. If you have the AI read your codebase it also tells it what you are doing or have done. Many of my projects have many implementation files that are named for the feature I want done. Also if you are using AntiGravity it always makes a Task file that is in one of the dirs in C:\Users\YOURUSERNAME\.gemini\antigravity\brain\ These are invisible to you in Antigravity but sometimes come in handy when doing something. In some of my projects I keep a DOC or DOCS folder to keep all the md files in. The other thing you might wish to do when creating a new feature is making a branch on your git https://www.skool.com/ai-for-your-business/changelogs-from-corbin?p=bd2e1367 All of this becomes easy after you do it a couple time and the easy way is to ask the AI to do it for you. (I do that almost all the time)

1-10 of 34