Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Owned by Dan

Memberships

The Main Branch

258 members • $19/month

The 1% in AI

957 members • $39/month

The AI Identity Lab

160 members • Free

AI Video Creators Community

4.4k members • $9/month

AI Profit Boardroom

2.3k members • $59/m

The AI Advantage

73.3k members • Free

Search Atlas Insider

1.2k members • Free

AEO - Get Recommended by AI

1.5k members • Free

Agentic Business Academy

263 members • $60/month

18 contributions to AEO - Get Recommended by AI

A 20-year observation: Why AEO sometimes fails (and the "Trust Layer" I’m adding)

Hey everyone, I’ve been diving deep into the AEO Blueprint content. As someone who has spent 30 years in sales and 20 years watching SEO evolve, seeing this shift to "Answer Engines" is the most significant change I've witnessed. However, I wanted to share a quick "war story" / observation from the field, especially given the recent news about AI hallucinations (Google's glue on pizza, etc.). The Observation: I've noticed that even when we get the technical AEO/Schema 100% perfect, the AI models (ChatGPT/Gemini) can still hesitate to recommend a business if the human signals don't match the code. If we tell the AI via Schema "This is the best specialist," but the reviews/sentiment online are mixed or the NAP data is messy, the AI treats the discrepancy as a "Hallucination Risk" and suppresses the answer to play it safe. The Solution: "The Trusted Answer System" Because of this, I’ve started adding a pre-work phase before I even touch the schema, which I’m calling The Trusted Answer System. Basically, I’m spending time manually fixing the client's "Real World" truth (reviews, citations, consistency) first. This ensures that when we do apply the AEO Blueprint strategies, the AI trusts the data immediately because the "Real World" matches the "Code World." I view it like this: - The AEO Blueprint is the engine (High performance). - The Trusted Answer System is the fuel quality (Safety/Trust). You can’t put race fuel in a car with a clogged line. Has anyone else noticed AI getting confused when a client's "offline reputation" doesn't match their "online schema"?

Will AI Search Replace Traditional Search?

Lately, we’ve seen people rely more on AI search tools like ChatGPT, Perplexity, and Gemini for answers instead of going straight to Google. For example, when I need a quick explanation or summary, I’ll often just ask AI instead of clicking through multiple sites. But when I need something verified (like checking product specs or comparing pricing), I still find myself Googling. Question: Do you think AI search will eventually replace traditional search engines, or will they coexist and serve different purposes? And from your own habits, when do you prefer using AI over Google (and vice versa)?

0 likes • Nov '25

@David Makrias Totally!! And it’s actually becoming more than just being referenced. These AI engines aren’t just pointing to trusted sources… they’re using those sources to form the answer itself. That’s why we’re seeing impressions climb while clicks fall. Discovery is now happening inside the AI moment, and only later (maybe) does a click occur for a transaction. The real optimization shift isn’t ranking → referencing… it’s ranking → being ingested and used.

2 likes • Nov '25

@David Makrias That’s the right question — and honestly, it’s the same question businesses asked before the internet existed. (Revealing my tenure in this space, unfortunately..) Back then, nobody could perfectly trace which billboard, radio segment, brochure, or word-of-mouth moment drove the sale… yet business still grew because influence was the real driver, not perfect attribution. AI is putting us back into that upstream space — but with far better signals than the pre-internet era. The tangible business value shows up in: - branded searches rising - direct traffic climbing - assisted conversions increasing - AI citations and mentions compounding over time - buyers showing up “pre-convinced” because AI framed the decision for them It’s not that visibility stops leading to outcomes — it simply stops being linear. Traditional SEO was 1:1: search → click → convert. AEO is upstream influence: AI → understanding → trust → later visit → conversion. It’s messier to measure, but it’s not mysterious. Just like pre-internet marketing, visibility shapes demand — and AI just becomes the new 80/20 engine that amplifies the part of your content the market actually wants. That’s where the business value lives.

Search Isn’t Dying — It’s Getting Absorbed. And Nobody’s Ready.

For the first time in 25 years, consumers aren’t choosing a search engine… they’re choosing an assistant. Atlas. Comet. Perplexity. Gemini. Copilot. Even Apple’s about to enter the chat. And here’s the quiet shift almost no one is talking about: We’re moving from Browser → Search → Results to Surface → AI → Answer. Traditional search isn’t disappearing — it’s just moving upstream where users never see it. Google knows this. That’s why the homepage is slowly steering toward AI-first by default. The “two-lane highway” (classic search vs AI mode) won’t last. In a year or two, we won’t ask: “Which search engine do you use?” We’ll ask: “Which AI do you trust to interpret the internet for you?” That’s the battleground now. And the real optimization shift isn’t ranking → referencing. It’s ranking → being ingested. The brands that understand that won’t just survive this transition — they’ll shape it. What do you think — are we ready for an AI-first internet, or are we clinging to the last stage of the old era?

If Schema Is the Answer… What Was the Question?

Lately I’ve been watching a funny pattern in our AEO conversations. Someone gets picked up by an AI engine (awesome moment, cue the small victory dance)… and then the discussion immediately splits into two camps: Camp 1: “See? Schema’s optional.” Camp 2: “Quick — cover the whole site in JSON-LD before the engines notice!” It’s like we’ve collectively decided schema is the answer… without stopping to ask what problem we’re even trying to solve. Here’s the reality check: Early AI mentions are the digital equivalent of a polite nod — not a long-term relationship. AI engines are still in their “sure, bring whatever, I’m not picky” era. But give it time — systems mature, rules tighten, and suddenly the slob phase is over. If your content structure is wobbly and your entities don’t line up, dumping schema everywhere is basically putting lipstick on a website with an identity crisis. Schema isn’t magic. It’s not a shortcut. And it’s definitely not Flex Seal for SEO. It’s annotation. The wiring — not the blueprint. So before we crown schema as the savior, maybe we should ask: Are we using schema strategically… or just because it feels like the easiest lever to pull? Because when the engines start grading more strictly — and they will — the sites with thoughtful structure, clean entities, and intentional markup are the ones that will stand up straight. Everyone else? They’ll be untangling JSON like Christmas lights. Curious to hear others: Are we leaning too hard on markup because it’s measurable… and avoiding the deeper architectural work?

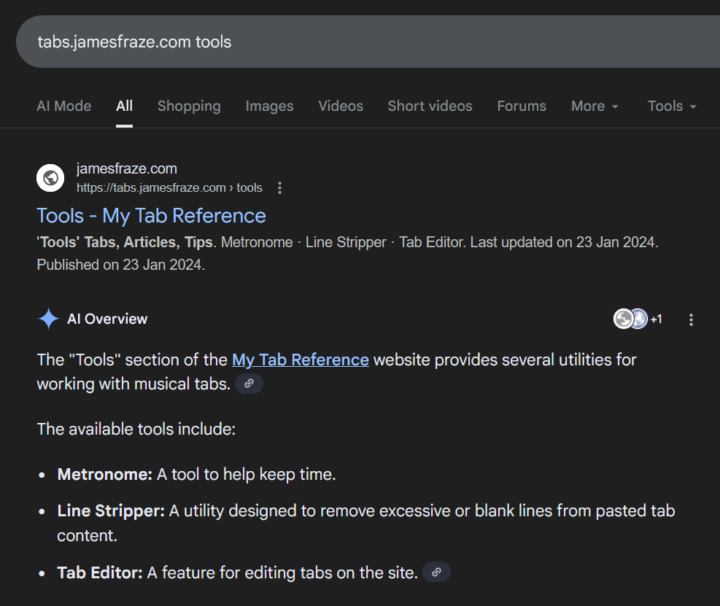

Is this how to test?

I guess this means it's indexed by AI? I haven't done any schema work, was just looking for my tab editor this morning. Made me happy regardless lol. Now, if I only knew how to monetize the thousands of visitors each of my site gets (yes I suck at that).

2 likes • Nov '25

@James Fraze Love seeing your site show up like that — AI basically gave you a little nod and said, ‘Yeah, I see you.’ 😂 But a quick reality check for everyone: this doesn’t mean schema is optional, and it definitely doesn’t mean the move now is to carpet-bomb every page with markup. Right now AI engines are in their ‘chill professor’ phase — they’ll accept late homework and sloppy formatting. But once the grading rubric tightens (and it will), anyone who panic-pasted schema everywhere is going to be stuck untangling a mess. Rushed schema is like duct-taping your house wiring: works today, burns the place down tomorrow. AEO isn’t ‘add JSON and walk away.’ The engines want clarity, consistency, and coherent entity structure — the stuff you can’t fake with a plugin. So yeah, enjoy the win — but don’t let it fool folks into thinking that long-term visibility is as simple as ‘dump schema everywhere.’ That’s how you build technical debt that will hurt way more when the engines finally tighten the screws.

1-10 of 18

@dan-league-2776

Digital marketing veteran with 20+ years experience. Expanding AI expertise to enhance data-driven strategies and client results.

Active 3d ago

Joined Oct 27, 2025

Powered by