Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

ReachInbox Community

762 members • Free

AI Business Trailblazers Hive

12.7k members • Free

Make Money With Make.com

444 members • Free

Maker School

2.3k members • $184/m

AI Automation Society

208.5k members • Free

The RoboNuggets Community

1.4k members • $97/month

AI Profit Boardroom

1.8k members • $49/m

7 contributions to AI Automation Society

🎉 Just shipped my first Apify Actor!

Started looking for a Google Maps competitor analyzer... Couldn't find one... So I built one... And here we are! It's like Tinder for businesses - swipe through all your local competitors without the awkward small talk. 🔗 https://lnkd.in/eMzEiDZ9 Sometimes the best tools are born from "fine, I'll do it myself" energy.

📌 Need to clean up company names in Google Sheets?

A client asked me how to normalize messy business names — so I’m sharing the solution here for anyone else dealing with the same headache. ✅ Goal: Strip off things like “LLC,” “Inc.,” and trailing punctuation, convert “+” to “and,” and clean up extra spaces — optionally normalize capitalization too. 🔧 Google Sheets Formula (Preserve Capitalization): excel Copy Edit =TRIM( REGEXREPLACE( REGEXREPLACE( REGEXREPLACE( REGEXREPLACE(B2, ",?\s*\b(LLC|Inc|Incorporated|Corp|Corporation|Ltd)\b\.?", ""), "\+", "and" ), "\s+", " " ), "[\.,]$", "" ) ) Examples Kane Partners LLC → Kane Partners BURKE + CO → BURKE and CO 🔠 Normalize Capitalization (Optional): excel Copy Edit =PROPER( TRIM( REGEXREPLACE( REGEXREPLACE( REGEXREPLACE( REGEXREPLACE(B2, ",?\s*\b(LLC|Inc|Incorporated|Corp|Corporation|Ltd)\b\.?", ""), "\+", "and" ), "\s+", " " ), "[\.,]$", "" ) ) ) More Examples: Dental Medical Careers, Inc. → Dental Medical Careers Solve IT Strategies, Inc. → Solve IT Strategies Global Talent Partners + Associates LLC → Global Talent Partners and Associates Jade & Co. Executive Search LLC → Jade & Co Executive Search Drop a 💬 if you want to adapt this formula for different cases like removing “|” or trimming website taglines!

🚀New Video: Locally Host n8n AI Agents for FREE (step by step)

In this video, I walk you through exactly how to self-host n8n on your own machine using the free AI Starter Kit. This setup also gives you PostgreSQL, Ollama, and Quadrant—all running locally with zero coding required. Even if you’re a complete beginner with no technical background, don’t worry—I break everything down step by step and make it as simple as possible. Just follow along with what I do on the screen. Once you're running everything locally, you won’t need to pay for cloud hosting or a virtual machine—your n8n workflows will run completely free. Plus, your data stays 100% in your environment, giving you full control and peace of mind.

8 likes • Jun 17

i'd also do a script for updating n8n here is mine #!/usr/bin/env bash set -euo pipefail #--------------------------------------- # n8n Upgrade Script (Docker Compose & Traefik) # Assumes n8n is managed by docker-compose in ~/n8n-traefik # Backs up data, compose file, certs, stops services, # pulls latest n8n, restarts services. #--------------------------------------- # --- Configuration --- # Directory containing docker-compose.yml COMPOSE_DIR="/home/info/n8n-traefik" # n8n data directory used in docker-compose.yml (!! ADJUST IF DIFFERENT !!) N8N_DATA_DIR="/home/info/n8n-data" # Service name for n8n in docker-compose.yml N8N_SERVICE_NAME="n8n" # --- End Configuration --- # Timestamped backup directory TIMESTAMP=$(date +%F-%H%M%S) BACKUP_ROOT="$HOME/n8n-backup-$TIMESTAMP" echo "📦 Creating backup directory: $BACKUP_ROOT" mkdir -p "$BACKUP_ROOT" # Change to the compose directory for context cd "$COMPOSE_DIR" || { echo "❌ Error: Could not change to $COMPOSE_DIR"; exit 1; } echo "📂 Working directory: $(pwd)" # 1. Backup n8n data directory if [ -d "$N8N_DATA_DIR" ]; then echo "⏳ Backing up n8n data from $N8N_DATA_DIR..." # Using rsync can be more efficient for large directories sudo rsync -a --info=progress2 "$N8N_DATA_DIR/" "$BACKUP_ROOT/n8n-data/" echo "✔️ Backed up n8n data directory" # 1a. Explicitly back up SQLite database if using default DB DB_FILE="$N8N_DATA_DIR/database.sqlite" if [ -f "$DB_FILE" ]; then cp "$DB_FILE" "$BACKUP_ROOT/database.sqlite" echo "✔️ Backed up SQLite database file" else echo "ℹ️ No SQLite database file found in $N8N_DATA_DIR (maybe external DB or different location?)" fi else echo "⚠️ Configured n8n data directory '$N8N_DATA_DIR' not found. Skipping data backup." # Consider exiting if backup is critical: exit 1 fi # 2. Backup docker-compose.yml echo "📝 Backing up docker-compose.yml..." cp docker-compose.yml "$BACKUP_ROOT/docker-compose.yml.bak" # 3. Backup SSL certificate data if [ -f "acme.json" ]; then echo "🔒 Backing up acme.json (SSL certificate)..."

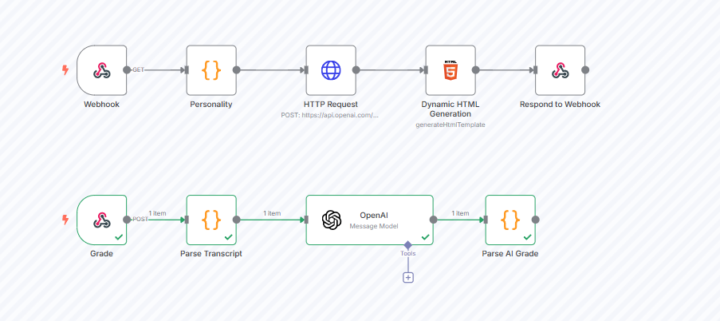

OpenAI Real-time conversation with AI / N8N

I had a client that wanted to train sales people in real-time with different personas, and then evaluate their performance. I really thought about this for awhile, and knew it was possible, but expected it to be complicated. Turns out, the base functionality in n8n can be accomplished with just 3-4 nodes - way simpler than I initially thought. The breakthrough for me was the "generate HTML" node. This lets me call my webhook directly from any web app or link, which is pretty slick. In this setup, I configure a personality for the AI before doing the OpenAI request/Whisper: json{ "instructions": "{{ $json.ai_instructions }}", "model": "gpt-4o-realtime-preview-2024-12-17", "modalities": ["audio", "text"], "voice": "alloy", "input_audio_transcription": { "model": "whisper-1" } } Here's the cool part - the HTML template gets returned to the user, building the page on the fly. So the agent on the other end takes on the specified personality (like a roof salesman), and the conversation feels surprisingly real. When you wrap up, the AI evaluates the transcript and scores your performance based on your criteria. Some other interesting possibilities that came to mind: Interview preparation with AI playing tough interviewers Negotiation training with different difficulty levels Customer complaint handling with progressively angrier customers Medical students practicing patient consultations Language learning through realistic conversation scenarios Security training for social engineering attempts This approach cuts down development time dramatically while still delivering a powerful training tool. The applications are pretty much endless, and it's all easier than expected. Best, Brian

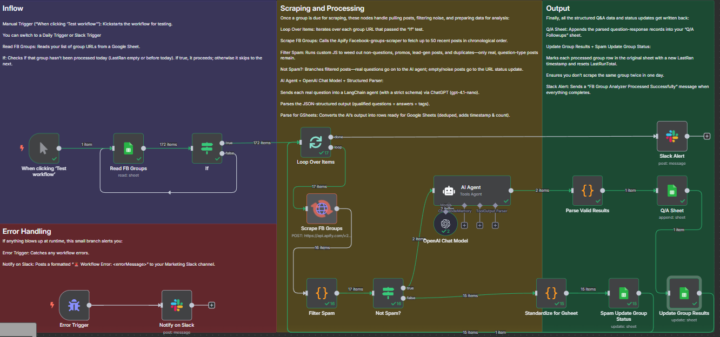

Community-Question Pipeline (with Spam Precheck)

I did this for a client last week, but this could apply to any niche where you want to become an authority. 1. Load your source list• Reads a Google Sheet of community sources (FB groups, subreddits, Discord channels, etc.).• Skips any source already processed today via a “LastRan” timestamp check. 2. Scrape raw posts• Uses a scraper node (Apify, RSS pull, Reddit API, etc.) to fetch the newest posts. 3. Spam & noise filter (pre-AI!)• A custom JS “Filter Spam” node examines each post’s text and URL.• Drops anything with no question, promotional language, obvious lead-gen or duplicates.• Only true, question-style posts continue—so you never burn an OpenAI token on ads or fluff. 4. AI analysis & response• Feeds each filtered question into a LangChain/ChatGPT agent with your strict JSON schema.• Gets back a validated object:– qualified: true/false– metadata (URL, username, datetime, location)– a concise, on-brand answer– topic tags for categorization 5. Normalize & dedupe• A single Code node un-wraps LLM output (handles both output: [] and direct arrays), de-dupes by URL+question, and tallies how many Q&A items you generated. 6. Write results & update status• Appends all new Q&A rows (with qualifiedCount) into your “Q/A Followups” sheet.• Updates each source row with LastRan and LastRunTotal so you won’t re-scrape the same group today. 7. Notifications & error alerts• On success: posts “Processed Successfully” to Slack/Discord/Teams.• On error: immediately notifies your team with the error message—so you can fix broken selectors or API issues before they pile up. Adapting to Any Niche 1. Sources → Your community 2. Spam filter tuning 3. AI prompt & tags 4. Output destinations 5. Notification channels & cadence With the precheck (you can adjust the settings as well) OpenAI call is on a genuine question—maximizing ROI on your token spend and keeping your community engagement both efficient and relevant.

3

0

1-7 of 7

Active 44d ago

Joined Feb 7, 2025

Ashburn, VA

Powered by