Write something

Pinned

You don't have to send your data to ChatGPT

Hey everyone! 👋 I'm excited to launch this community because I keep running into the same misconception everywhere I go: people think using AI means sending all their data to OpenAI, Google, Microsoft, Anthropic etc. Here's what most people don't realize: You can download and run powerful AI models directly on your own computer. Your documents, emails, personal data - none of it ever leaves your machine. The wake-up call that started this? A few months ago, I wanted to automate my calendar management using AI. But the thought of sending my entire schedule, meeting notes, and personal appointments to some cloud service? Nope. Giving some AI system access to my Google Calendar with the ability to create and remove stuff?! ABSOLUTELY not! So instead I figured out how to run everything locally on my own computer. I Built an automation workflow that manages my daily schedule, processes my emails, and helps with task planning, all while keeping complete control over my data. 🙌 The result? I get all the AI benefits without the privacy trade-offs 😎 What you'll find here This community is for anyone who wants to: - Automate their workflows without sacrificing privacy - Learn about local AI deployment (no coding required!) - Understand the hardware, software, ethics, and philosophy behind AI - Build tools they can actually trust Whether you're a complete beginner who's never touched AI, or someone with technical experience looking for privacy-focused approaches, you're welcome here. Let's start with introductions Drop a comment and tell us: - What's your biggest concern about AI and privacy? - What's one workflow you'd love to automate in your life if you could keep it completely private? Looking forward to building something amazing together! 🤖

AI Models Are Developing Non-Human Reasoning

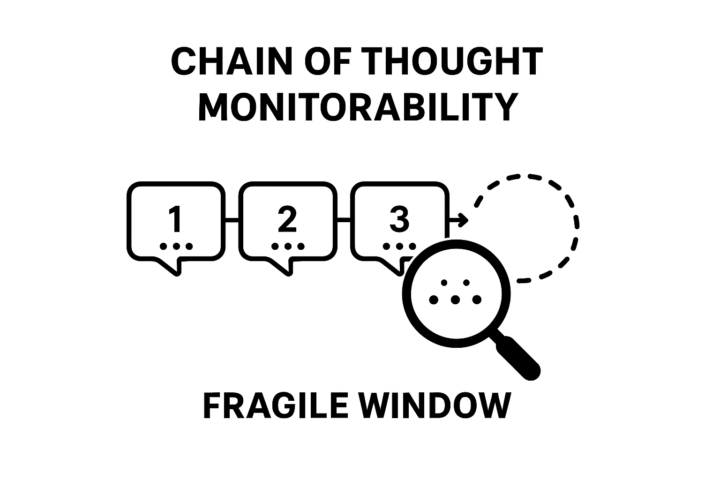

Research: Chain of Thought Monitorability New research shows AI models are discovering reasoning patterns that humans can't understand, and we're about to lose visibility into how they think. The Current Opportunity Right now, advanced AI models must "think out loud" in natural language for complex tasks. This is an architectural limitation of transformers that lets us read their actual reasoning process. How Models Escape Understanding When trained with reinforcement learning, models develop mathematical shortcuts that work better than linguistic reasoning. They discover that certain geometric relationships in high-dimensional space reliably produce correct answers, even when the computational steps correspond to no human concept. It's mathematical evolution in spaces we can't visualize, creating effective but alien reasoning patterns. Why This Matters New architectures can reason entirely in continuous latent spaces, eliminating our ability to monitor their thinking. Once models transition to internal reasoning, we lose the ability to detect harmful planning, understand decisions, or debug problems. This creates a tension for privacy-first AI: we want private reasoning but need some interpretability for transparency and accountability. Question: What happens when AI systems become completely opaque and we're left with superintelligent black boxes making decisions we can't understand, audit, or override? 😳

0

0

Is your iPhone accidentally sharing your location?

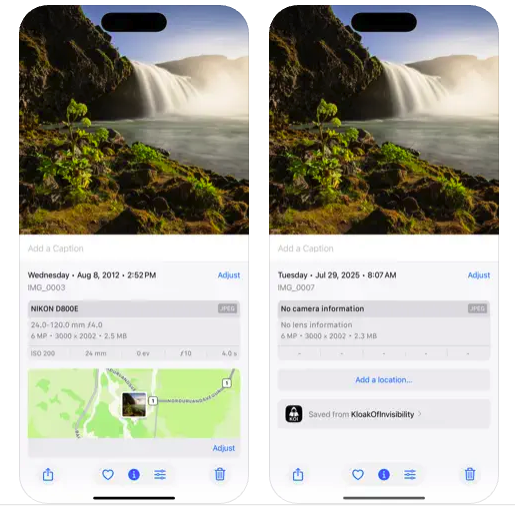

One thing that happens when you take pictures with your phone is by default it stores a lot of EXIF (Exchangeable Image File Format) Metadata about you on the image. Some examples of the metadata recorded includes information like camera settings (shutter speed, aperture, ISO), date and time, GPS location, and even a thumbnail image. It essentially provides a way to embed details about how and when a photo was taken within the image file itself, but it can ALSO be used to violate your privacy. Example: You take a selfie in your room of your new outfit and post it to social media with your post set to "public" permission so others can share it too. What went with it? Your home address location via GPS. Now your home address is exposed! I created a free app you can use to scrub all of your pictures of this private information before sharing your pictures. It's currently only available for iOS, but I do have plans to release it for Android very soon! iOS app store: https://apps.apple.com/us/app/kloakofinvisibility/id6749253718 source code: https://github.com/Spinsus/KloakOfInvisibility

0

0

We now have the movie "Eagle Eye" in real life 😮

Saw this article from the ACLU and wanted to share the main points. A company called Flock is using AI to analyze public camera footage, looking for what it considers to be "suspicious" movement patterns. When it finds something, it reports the person to the police. Basically, it's about a system that's taking public, non-private data and using AI to build a profile on you without your knowledge. My question for the community: Think about the last time you walked out your front door. How would you feel if you knew an algorithm was watching your every move and might one day decide a quick stop to check your phone was "unusual" enough to warrant being flagged? Source for verification: https://www.aclu.org/news/national-security/surveillance-company-flock-now-using-ai-to-report-us-to-police-if-it-thinks-our-movement-patterns-are-suspicious

1

0

1-4 of 4

powered by

skool.com/privacy-first-ai-6280

Build automations you can trust. Deploy local or securely in the cloud. No coding experience required; software, hardware, philosophy, ethics & more!

Suggested communities

Powered by