Deploy Embedding Models on Amazon SageMaker

Train and Deploy Embeddings Models on Amazon SageMaker 🚀

0%

Unlock at Level 1

Continued Pre-Training LLMs

Don't have labeled or paired data? Try further training your model with your unlabeled data.

Llama 3 , Mistral, Gemma

0%

Unlock at Level 1

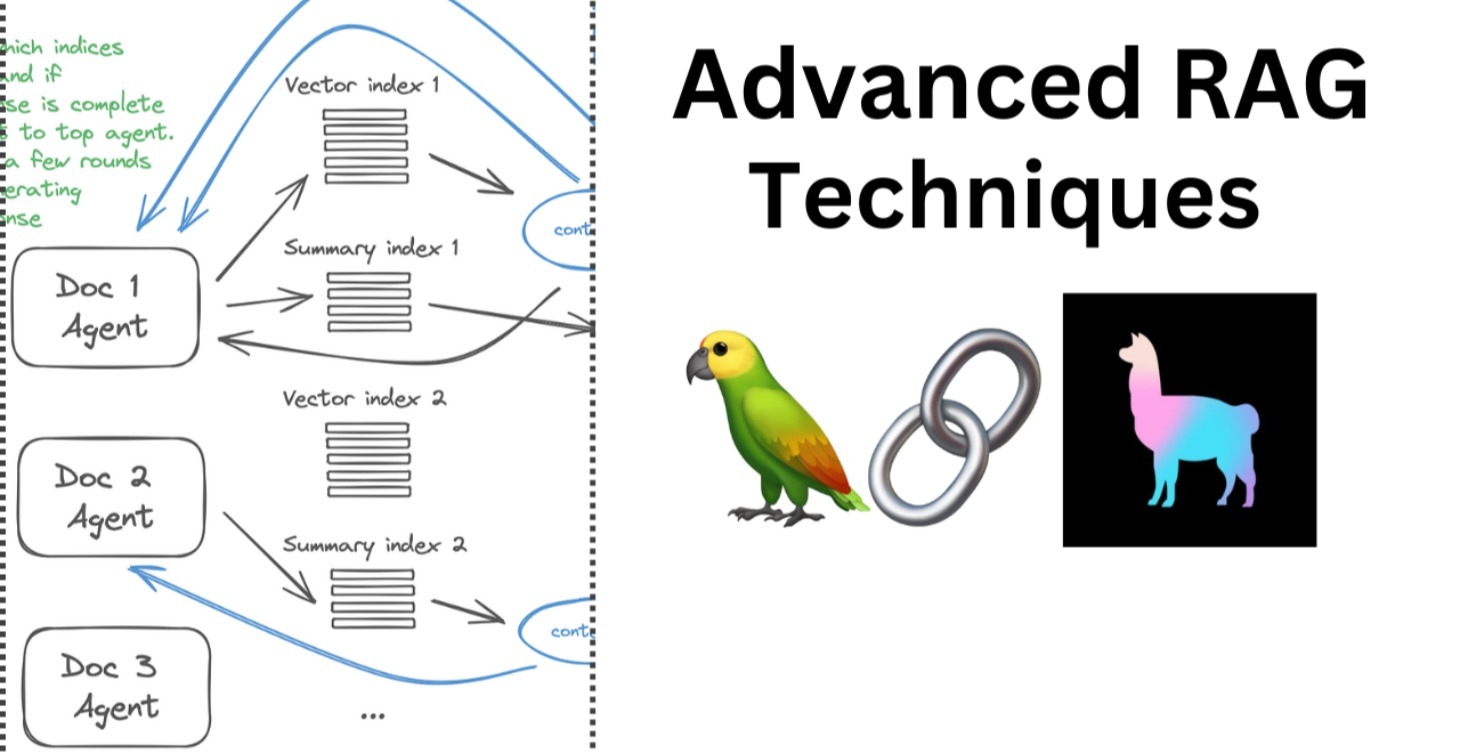

Advanced RAG Techniques

Curated Advanced RAG Techniques in One place

0%

Unlock at Level 1

Deploy Mixtral 8x7B on AWS Inferentia2

Unlock the full potential of Mixtral 8x7B by deploying it on AWS Inferentia2. This guide provides a step-by-step process for setting up the environment, retrieving the Hugging Face LLM Inf2 Container, and deploying on Amazon SageMaker. Achieve efficient inference with high throughput and low latency. Perfect for deep learning tasks, this deployment ensures optimal performance

0%

Unlock at Level 1

Advanced Synthetic Embeddings Generation

Synthetically Generate Embeddings to Increase Data Variety & Boost Performance

0%

1-5 of 5