Write something

I build a website with chatgpt

here is how i did it: https://youtu.be/A4so7vZ8Ehk?si=_eliK4jdHv9vkjbK

1

0

🚀 Just Built an AI Voice Agent for Real Estate!

Imagine this 👇 A potential buyer calls your real estate business at 11:47 PM — and instead of missing that lead, an AI Voice Agent answers instantly. 💬 It greets them professionally. 🏡 Answers their questions about the property. 📝 Collects their details (Name, Phone, Address, Inquiry). 📊 Then automatically saves everything straight into your Google Sheet — ready for your sales follow-up. All of this happens 24/7, no missed calls, no human needed. I just finished building a working demo, and honestly… it feels like the future of client communication for real estate. 🎥 Watch the demo here:👉 https://youtu.be/IQXmnUfYbTA?si=ZwVhF4Cza9N-wydC 💭 If you’re in real estate (or know someone who is), imagine how many more clients you could convert when no call goes unanswered. Would love your thoughts — what features should I add next?

Constitutional AI for Large Language Models

Constitutional AI for Large Language Models: Constitutional AI embeds principles and values directly into AI training, creating systems that behave according to specified rules without extensive human feedback, pioneering scalable alignment through self-supervision. The engineering challenge involves encoding complex values into trainable objectives, handling value conflicts and edge cases, scaling beyond human oversight, maintaining capability while enforcing constraints, and adapting to different cultural and contextual requirements. Constitutional AI Explained for Beginners - Constitutional AI is like raising a child with clear family values and rules rather than constantly correcting every action - instead of saying "don't do that" thousands of times, you teach core principles like "be kind," "be honest," and "help others," which the child then uses to guide their own behavior. The AI similarly learns to critique and improve itself based on constitutional principles, becoming self-governing rather than requiring constant human supervision. What Defines Constitutional AI? Constitutional AI uses written principles to guide model behavior through self-supervision and critique. Constitutional principles: explicit rules encoding values and constraints. Self-critique: models evaluating own outputs against principles. Revision: improving outputs based on self-identified issues. Recursive improvement: iterating critique and revision cycles. Scalable oversight: reducing human feedback requirements. Value alignment: embedding ethics directly in training. How Does the CAI Training Process Work? CAI training involves supervised learning followed by reinforcement learning from AI feedback. Initial supervised fine-tuning: training on high-quality demonstrations. Red team prompts: generating potentially harmful outputs. Critique generation: model evaluating outputs against constitution. Revision sampling: producing improved versions addressing critiques. Preference dataset: creating comparisons from revisions. RLAIF: reinforcement learning from AI feedback using preferences.

0

0

In-Context Learning with Large Language Models

In-Context Learning with Large Language Models In-context learning enables language models to perform new tasks by providing examples in the prompt without updating parameters, revolutionizing how we deploy AI through emergent few-shot capabilities. The engineering challenge involves designing effective prompts with examples, understanding what models learn from context, managing limited context windows, selecting optimal examples, and explaining why this phenomenon emerges only in large models. In-Context Learning with Large Language Models Explained for Beginners - In-context learning is like teaching someone a card game by showing a few hands rather than explaining all the rules - you show them "when you see this pattern, play that card" through examples, and they figure out the underlying rules. Large AI models similarly learn new tasks just from examples in their prompt, without any training, like a polyglot figuring out a new language's grammar from a few translated sentences. What Enables In-Context Learning? In-context learning emerges from massive pre-training creating models that recognize and apply patterns. Meta-learning hypothesis: pre-training teaches how to learn from examples. Implicit fine-tuning: attention mechanisms temporarily adapting to context. Pattern completion: models extending patterns seen in prompt. Bayesian inference: updating beliefs based on examples. Task vectors: examples defining temporary task representations. Scale dependency: emerging only beyond threshold model size. How Does Few-Shot Prompting Work? Few-shot prompting provides input-output examples demonstrating desired behavior. Example format: consistent structure across demonstrations. Number of shots: typically 0 (zero-shot) to 32 examples. Example ordering: affecting performance, recent examples stronger influence. Label space: showing all possible outputs improves performance. Separator tokens: clear delimiters between examples. Query placement: examples before test input.

0

0

Adapter Networks for Large Language Models

Adapter Networks for Large Language Models Adapter networks add small trainable modules to frozen pre-trained models enabling efficient task adaptation with minimal parameters, revolutionizing transfer learning for large language models. The engineering challenge involves designing optimal adapter architectures, determining insertion points in base models, managing multiple adapters for different tasks, implementing efficient training and inference, and balancing adaptation capacity with parameter efficiency. Adapter Networks for Large Language Models Explained for Beginners - Adapter networks are like adding specialized tools to a Swiss Army knife - the main knife (pre-trained model) stays unchanged, but you clip on specific attachments (adapters) for different tasks. Instead of buying a new knife for each job or modifying the original blade, you add small, removable tools that work with the existing knife, making it versatile without rebuilding everything from scratch. What Problem Do Adapters Solve? Adapters address the computational and storage challenges of fine-tuning large models for multiple tasks. Full fine-tuning cost: storing separate billion-parameter models per task becomes prohibitive. Catastrophic forgetting: fine-tuning degrades performance on other tasks without careful management. Limited resources: full fine-tuning requires substantial GPU memory many lack. Multi-task serving: switching between tasks requires loading different models. Rapid experimentation: testing ideas needs quick, efficient adaptation methods. Version control: managing many model variants becomes complex. How Do Adapter Architectures Work? Adapter modules follow bottleneck architecture with down-projection, non-linearity, and up-projection. Bottleneck design: project from d to r dimensions where r << d, typically d/16. Non-linear activation: ReLU or GELU between projections adding expressiveness. Residual connection: adding adapter output to original preserving information flow. Parameter efficiency: two matrices W_down ∈ R^(r×d), W_up ∈ R^(d×r) totaling 2rd parameters. Skip connection scaling: sometimes adding learned scalar controlling contribution. Initialization: near-identity ensuring minimal initial modification.

0

0

1-19 of 19

powered by

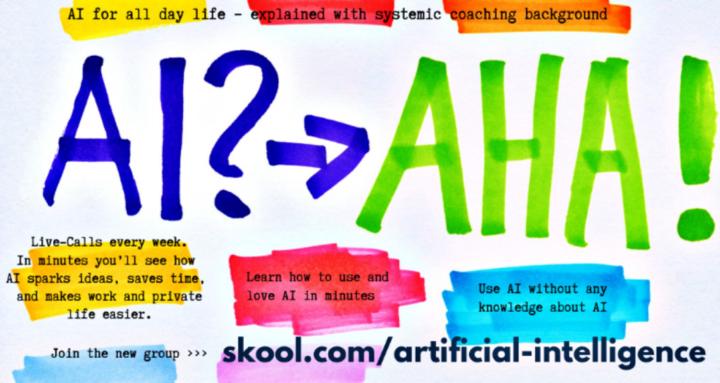

skool.com/artificial-intelligence-8395

Artificial Intelligence (AI): Machine Learning, Deep Learning, Natural Language Processing NLP, Computer Vision, ANI, AGI, ASI, Human in the loop, SEO

Suggested communities

Powered by