Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Memberships

Agent Zero

2.1k members • Free

AI Automation Society

246.1k members • Free

IA Masters Automations

939 members • $79/month

AndyNoCode Premium

177 members • $59/month

AI Automations by Jack

1.6k members • $77/m

AI Automation Society Plus

3.4k members • $94/month

Early AI-dopters

822 members • $59/month

19 contributions to AI Automation Society

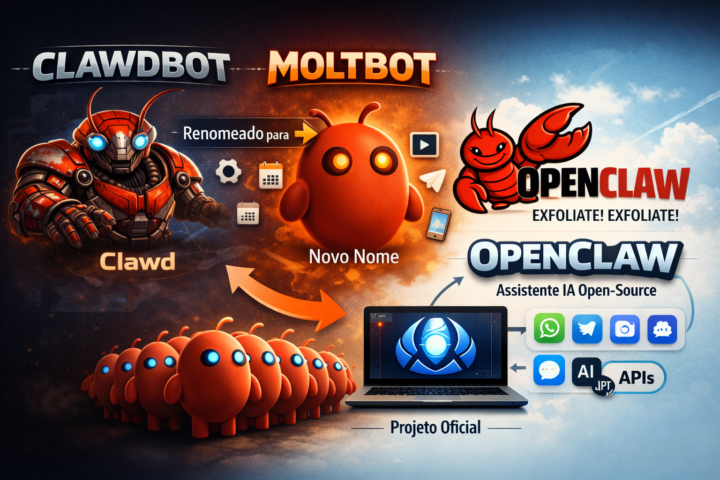

OpenClaw now

🧠 What Happened (Straightforward Explanation) 1) Origins: Clawdbot - The project started as Clawdbot, an open-source AI assistant that runs autonomously on your own server or machine. - It gained viral attention in tech communities because it can: 2) Name Change to Moltbot - Due to trademark concerns raised by Anthropic (the company behind Claude), the original name Clawdbot conflicted with Anthropic’s branding. - The creator renamed the project Moltbot. - The functionality remained the same — only the name and branding were changed. 3) Repository Move to OpenClaw - The project’s GitHub repository formerly under “moltbot” is now housed under a new GitHub organization called OpenClaw. - This means OpenClaw is the current official home of the code and ongoing development. ⚙️ What the Tool Does Today Moltbot / OpenClaw is essentially: - An AI agent framework that runs locally or on a server (VPS). - Connects to messaging platforms to provide always-on interaction. - Integrates with APIs (email, calendars, drive, etc.) to execute tasks. - Supports different large models (not limited to Claude). It’s more than a chatbot — it acts as an automated assistant. 🚨 Security & Risk — Updated (as of today) Recent reports from cybersecurity researchers emphasize: - Security risks exist if deployed carelessly. - Many early adopters exposed dashboards and control panels without passwords. - Instances have been found on the open internet exposing: - If misconfigured, attackers might gain access to sensitive systems. This aligns with the creator’s own warnings that the tool is experimental and not ready for production by non-technical users. 📈 Adoption Status As of today: - The project continues receiving attention and forks. - Community contributions are increasing. - Discussions around best practices, safety guides, and deployment patterns are active on GitHub and social media. - People experiment with integrating Moltbot/OpenClaw into workflows, but most deployments are still technical and early-stage.

0 likes • 56m

@Hicham Char This is undoubtedly a laboratory. Anyone who doesn’t test it will waste time. I believe it is bringing the same kind of movement that ChatGPT brought. What we need to do is think about how to use it within acceptable risk limits. (Risk is not the same as being in danger.) But staying on the sidelines can be worse, especially for those who are already in the AI game.

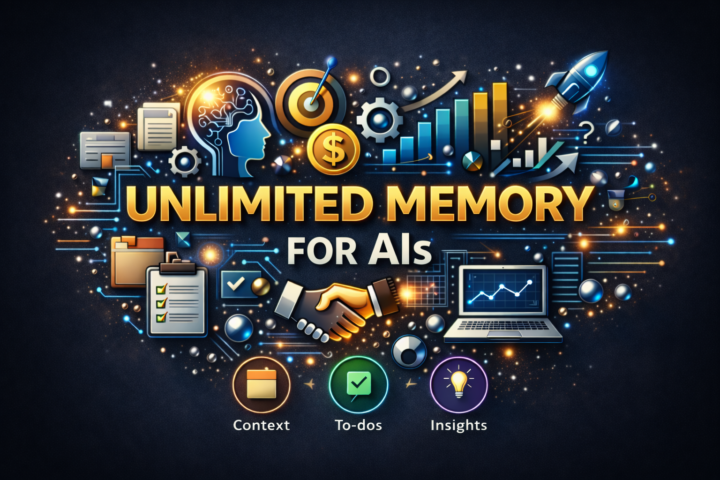

UNLIMITED MEMORY FOR AI

A practical way to overcome the memory limits of AI models (Claude, ChatGPT, Gemini) by externalizing memory into files, without writing code. Problem - AI models have limited memory. - When processing many files (e.g., dozens of transcripts), they “forget” parts of the content, lose context, or hallucinate. - Even large files that are “accepted” are not always read in full. Solution - Use a tool that allows the AI to read and write local files (e.g., Claude Desktop / Claude Code). - These files act as persistent notes, allowing the AI to resume work after its internal memory is “reset.” The 4 components of the system 1. Data: files to be processed (transcripts, emails, tickets, documents, etc.). 2. Context (context.md): describes the main objective of the task. 3. Checklist / To-dos (todos.md): list of steps/files to process, marked as progress is made. 4. Insights (insights.md): where the AI continuously saves extracted results. How the cycle works - The AI processes the files. - It continuously updates the three documents. - When internal memory runs out: - The process continues until everything is completed, maintaining quality. Setup (no code) - Install Claude Desktop. - Use Code mode to allow local read/write access. - Select the folder containing the files. - Use a structured prompt that specifies: Standard prompt structure - Goal: what to analyze/extract. - Before you start: create the three files. - As you work: update insights and checklist. - After memory reset: reread context and to-dos. - Final constraint: continue until everything is completed. Use case examples - Extract customer language for marketing and copywriting. - Create real FAQs from conversations. - Map sales objections. - Identify churn signals. - Prioritize leads in old email archives. - Generate feature ideas from recurring requests. Conclusion - “Unlimited memory” comes from external files, not from the model itself. - With context + checklist + insights, AI can work for hours without losing quality. - The method is reusable for virtually any type of data or objective.

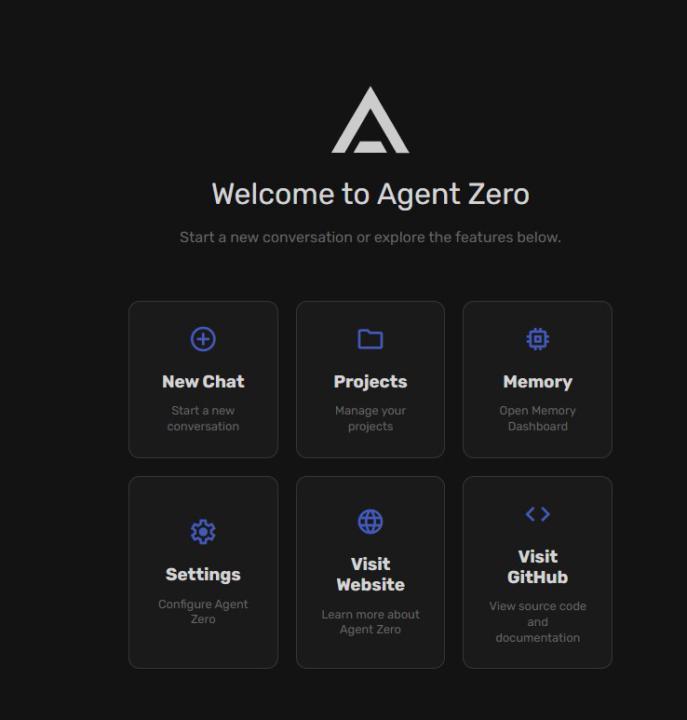

AGENT Zero

🤖 What is Agent Zero Agent Zero is an open-source AI agent that runs 100% locally inside a Docker container, with full access to an isolated Linux operating system. It is not just a chatbot. It is an AI system that can plan, reason, and execute real actions such as running commands, manipulating files, executing code, generating reports, editing images and videos, analyzing data, and automating complex workflows — all securely and privately. 🔑 Key features - 🧠 Autonomous AI agent (plans and executes tasks) - 🐳 Runs in Docker (isolated and safe) - 🔐 Privacy-first: your data stays on your machine - 🌍 Supports cloud models (OpenRouter, OpenAI, Anthropic, etc.) - 🖥️ Supports local models (Ollama, LM Studio) - 🧰 Full access to Linux tools and libraries - 🔎 Fully open source and auditable on GitHub 🚀 What it’s used for - Task automation - Coding, debugging, and benchmarking - Accounting and financial analysis - File manipulation (PDFs, images, videos) - Data analysis and visualization - Security research / ethical pentesting - Private document analysis - Local task management 🧠 In one sentence Agent Zero is a powerful, privacy-focused local AI agent that does real work on your computer — faster and more reliably than traditional AI chat tools. agent0ai/agent-zero: Agent Zero AI framework

0 likes • 17d

To install it, first download **Docker Desktop** (Windows Intel/AMD). After downloading and installing, it will restart the PC. You may need to start the **Docker engine** in the Windows CMD. `WSL.exe` may show a button to start it — you just need to confirm in the CMD. In Docker, search for **agent0ai/agent-zero**. Download the **latest version**, use **PULL** and then run **RUN**. Now check the port — in my case it was **32768**. You may need to configure **port 80 to 0** so Docker can use another available port. Access it like this: ``` http://localhost:32768 ``` Then configure the **LLM API**: * **External Services** → **API Keys** * **Agent Settings** → model used **English translation:** To install it, first download **Docker Desktop** (Windows Intel/AMD). After downloading and installing, it will restart the PC. You may need to start the **Docker engine** in the Windows CMD. `WSL.exe` may show a button to start it — you just need to confirm in the CMD. In Docker, search for **agent0ai/agent-zero**. Download the **latest version**, use **PULL** and then run **RUN**. Now check the port — in my case it was **32768**. You may need to configure **port 80 to 0** so Docker can use another available port. Access it like this: ``` http://localhost:32768 ``` Then configure the **LLM API**: * **External Services** → **API Keys** * **Agent Settings** → model used

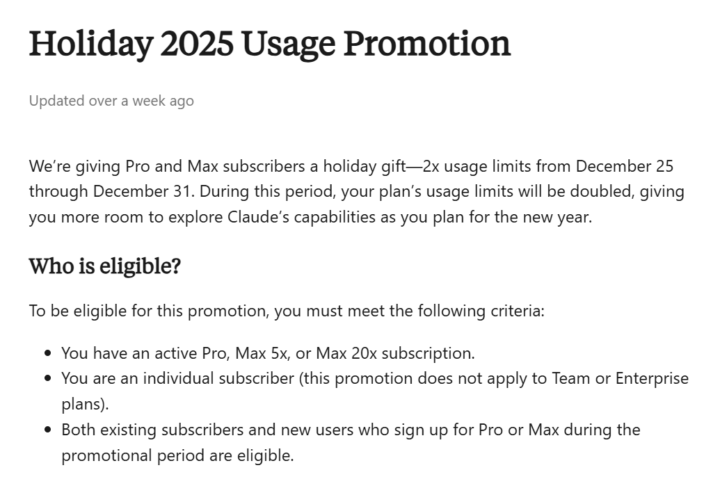

Claude 2x UUUPPP Claude 2x UUUPPP

Holiday 2025 Usage Promotion | Claude Help Center

1-10 of 19

Active 3m ago

Joined Mar 6, 2025

Powered by