Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

What is this?

Less

More

Memberships

The AI Advantage

64k members • Free

Ai Automation Vault

14.4k members • Free

4D Copywriting Community

62.3k members • Free

AI Automation (A-Z)

116.4k members • Free

AI Automation Agency Ninjas

18.7k members • Free

Agency Growth

3.9k members • Free

AI Automation Society

202.6k members • Free

Marketing Elevator

643 members • Free

Agency Owners

17.7k members • Free

3 contributions to The AI Advantage

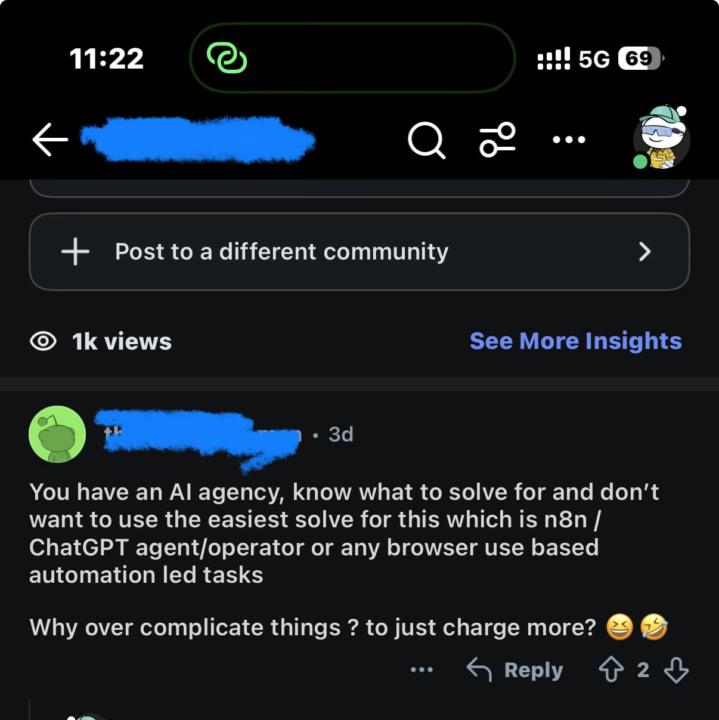

Every time I share the system we're building, at least one comment says: "Why not just use n8n? This is easy". So here's why... it's not :)

We’re building an agent (MK1) that does large-scale competitor analysis across dozens of newsletters automatically! Scraping → Structuring → Compressing → Multi-LLM analysis → aggregation → dashboards. If it were as simple as “drag a few n8n nodes,” trust me, we’d be doing that. Allow me to elaborate: 1. The data sources we pull from are NOT friendly to scrapers. - Requests get blocked instantly - HTML structure changes unpredictably - Anti-bot systems shut down your pipeline mid-run - Content loads dynamically - Layouts differ per issue - Rate limits kick in - Rendering methods break your parser When you have to keep the entire structure consistent for downstream LLM analysis, a single DOM change breaks the whole chain. No-code tools don't handle that kind of fragility well. 2. The content isn’t simple text, it requires meaningful structure. When you’re analyzing 30–100 newsletters at a time, you need: - Section extraction - Visual mapping - CTA identification - Ad block recognition - Tone markers - Intent patterns - Word & emoji stats - Structural compression (to cut token costs by ~70%) 3. Real orchestration > visual workflows People underestimate what happens when you’re: - Running 40+ analysis jobs in parallel - Retrying failed tasks - Re-queuing partial data - Handling timeouts - Managing token budgets - Caching compressed representations - Tracing every run end-to-end - Ensuring idempotency 4. Maintaining the scraper is half the battle When the website changes structure (which happens often), your scraper must: - adapt automatically or - be fixable with minimal downtime You cannot do that reliably in a visual builder. These aren’t static URLs. Each issue is rendered differently and sometimes changes backend structure.Our scraping approach has to evolve constantly. Even a small structure shift breaks an entire n8n chain.

0

0

🧠 Results Aren’t Random...They’re Earned in the Reps No One Sees

Just wrapped my morning workout and was reminded of this… Putting in the work matters... in the gym, in business, in life. Everyone wants the result: ➡️ The energy ➡️ The confidence ➡️ The example you set for your family But none of that happens by accident. The gym can be lonely. It’s easy to avoid. Easy to justify skipping. But if you want the outcome…you have to show up. Your business is the same. You can’t build something meaningful over a weekend and expect it to carry you for decades. You’ll question yourself, sweat, repeat the reps no one sees, and say “no” now so you can say “yes” later. Wherever you want results — your body, your career, your relationships — it all comes down to consistent work. And in every case I’ve ever seen…it’s worth it.

Building an Agent that analyses 30+ Competitor Newsletters at once. Here’s the system overview!

We’re working with a newsletter agency that wants their competitor research fully automated. Right now, their team has to manually: - Subscribe to dozens of newsletters - Read every new issue - Track patterns (hooks, formats, CTAs, ads, tone, sections, writing style) - Reverse-engineer audience + growth strategies We’re trying to take that entire workflow and turn it into a single “run analysis” action. High-level goal: - Efficiently scrape competitor newsletters - Structure them into a compressed format - Run parallel issue-level analyses - Aggregate insights across competitors - Produce analytics-style outputs - Track every request through the whole distributed system How the system works (current design): Step 1 – You trigger an analysis You give the niche. The system finds relevant competitors. Step 2 – Scraper fetches issues Our engine pulls their latest issues, cleans them, and prepares them for analysis. Step 3 – Convert each issue into a “structured compact format” Instead of sending messy HTML to the LLM, we: - extract sections, visuals, links, CTAs, and copy - convert them into a structured, compressed representationThis cuts token usage down heavily. Step 4 – LLM analyzes each issue We ask the model to: - detect tone - extract key insights - identify intent - spot promotional content - summarize sections Step 5 – System aggregates insights Across all issues from all competitors. Step 6 – Results surface in a dashboard / API layer So the team can actually use the insights, not just stare at prompts. Now I’m very curious: what tech would you use to build this, and how would you orchestrate it? P.S. We avoid n8n-style builders here — they’re fun until you need multi-step agents, custom token compression, caching, and real error handling across a distributed workload. At that point, “boring” Python + queues starts looking very attractive again.

1-3 of 3

@kshoneesh-chaudhary-2571

I help Marketing Agencies leverage AI to gain a competitive advantage

Active 21m ago

Joined Dec 2, 2025

Powered by