Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Owned by Josue

The pub where we build the future of sales. Crack a cold one, talk shop, and automate your way to the top.

A space to learn AI and automation, share your journey, and grow together. 🚀

Memberships

AI Automation Skool

2.1k members • Free

AI Automation Station

2.2k members • Free

Skoolers

190k members • Free

School of Content

824 members • Free

AI Systems & Soda

3k members • Free

Agent Space

692 members • Free

Builder’s Console Log 🛠️

1.9k members • Free

Chase AI Community

36.7k members • Free

Open Source Voice AI Community

816 members • Free

110 contributions to AI Automation Society

Testing Clawdbot: What Actually Works 🦞

Been playing with Clawdbot this week and wanted to share my hands-on experience with what works (and what doesn't) after running several real tests. Quick context: Clawdbot is that open-source "AI employee" everyone's been talking about lately. It can access your local files, calendar, and various apps through integrations. I've been testing it extensively to cut through the hype. Here's what I found actually works well: - Notion integration is solid (created and managed a Kanban board seamlessly) - Spotify control works surprisingly well (played specific songs on command) - Basic file operations and text generation are reliable - API integrations (when properly set up) generally work as advertised What's still rough: - Browser control is hit-or-miss - Voice cloning setup is more complex than advertised - Some integrations require significant troubleshooting - Response times can be slow I'm focusing more on learning Claude Code for now, but I'd love to hear from others who've tested Clawdbot. Have you found any particularly useful applications? Or is it still too early/rough for serious use? I'll keep testing and share more specific use cases soon. Drop your thoughts below if you've tried it! https://youtu.be/QoFg9HA6BsM?si=yeDqsoNNblytYhtW

8 Essential n8n Nodes You Should Master in 2026

I just dropped a new video breaking down the core nodes you need to understand if you want to build solid, scalable automations in n8n this year. This is based entirely on real experience — from simple CRM automations to complex AI-powered workflows with agents, tools, and sub-flows. In the video, I walk through: - The triggers you’ll use all the time (and why they’re more powerful than they look) - How to properly use Set / Edit Fields to clean and structure data - Why HTTP Request + Code unlock almost any integration - When to use IF vs Switch - The difference between AI Agents vs Message Model steps - Why Sub-workflows are a must once things start getting complex If you’ve ever built something that worked but felt overcomplicated — this video will help you rethink how you design automations. 👉 Watch the full breakdown here: https://youtu.be/LOTsPJplHnA?si=e8u7ka7w7w-vfKJt I’d also recommend revisiting one of your older workflows after watching and asking yourself:“How would I rebuild this today using these nodes?”

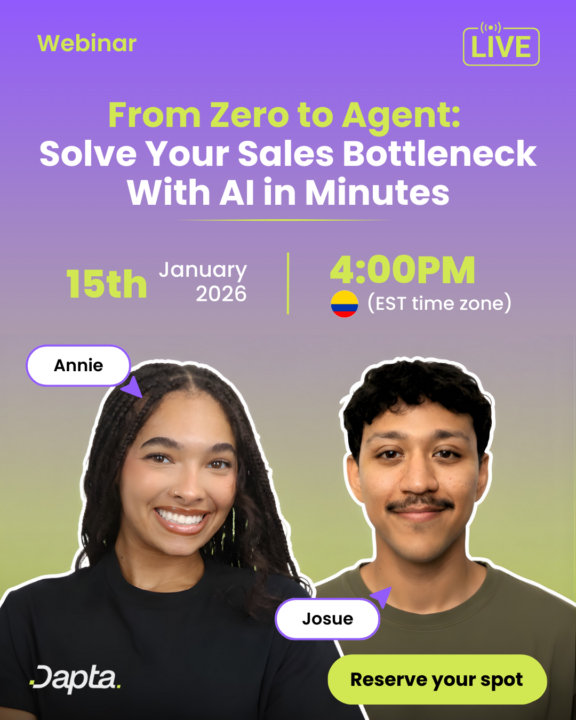

Dapta 101: From Zero to Agent (Live Webinar)

If you’ve been curious about AI sales agents but felt overwhelmed by tools, setup, or where to even start, this webinar is for you. In Dapta 101: From Zero to Agent, I’ll walk you through how to build your first AI sales agent from scratch. Live. No-code. Beginner-friendly. What we’ll cover: • How AI sales agents actually work • How to go from a blank canvas to a working agent • A live walkthrough you can follow step by step • How to use the agent in a real scenario By the end, you’ll have a clear mental model and a working foundation you can build on. Stick around until the end for a special opportunity to kickstart your agent journey. Date & Time: Thursday, January 15th, 4:00PM–5:00PM EST Register here: REGISTER

Auto-Generate YouTube Titles, Descriptions, and Timestamps From a Link

Posting consistently on YouTube gets way easier when you stop doing the repetitive stuff manually. In this video, I show a system that takes a single YouTube link and automatically generates: - A high quality title - A full SEO-optimized description - Clean chapter timestamps - And updates the video directly on YouTube High level flow: 1. Submit a YouTube URL through a form 2. Pull the transcript while the video is still unlisted 3. Use AI to generate timestamps first 4. Use AI again to generate the title and description 5. Update the video automatically via the YouTube API This is the exact system I use to publish my own videos faster without copy pasting prompts every time. If you want to see how it’s built step by step, the full walkthrough is in the video. https://youtu.be/F2mmral8PjU?si=VmZ_0WS7Vp7XUyd3 Subscribe for more practical AI and automation tutorials.

Never Miss a Failed n8n Workflow Again

If you run workflows in production, errors are going to happen. The real problem is not knowing when they happen. In this video, I show a simple way to catch *every* failed execution in n8n and get notified instantly with the exact workflow and execution link. High level overview of the setup: - Use an Error Trigger workflow that fires when another workflow crashes - Attach it to any production workflow through the workflow settings - Capture details like workflow name and execution URL - Send a Slack message automatically so you can jump straight to the error This works great if you: - Manage client workflows - Run multiple production automations - Want your team notified the moment something breaks It is a small setup that saves you a lot of headaches. https://youtu.be/hL6ev_vIOjQ?si=cGl-swebTmpU5ZzS Subscribe for more n8n and AI automation tutorials.

1-10 of 110

@josue-hernandez-1795

Automation and AI Enthusiast

Check out my YT!

https://www.youtube.com/@JosueHernandez-AI

Active 2h ago

Joined Feb 12, 2025

Phoenix, Arizona

Powered by