Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

AI Think Tank

9 members • Free

3 contributions to AI Think Tank

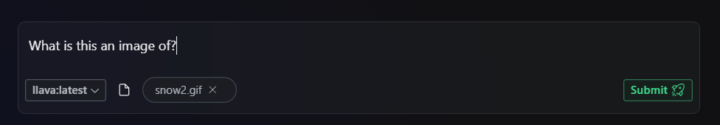

Vision Locally with Llava

Just got implemented the ability to upload an image, so now a multimodal model like Llava can be used to analyze and describe images. For the most part, Llava seems to do pretty well with describing images, and with some prompting can generate output how you like.

0

0

Utilizing Vision with Videos????

I stumbled across this article in the openai cookbook that shows you how to use vision with videos: https://cookbook.openai.com/examples/gpt_with_vision_for_video_understanding After reading through it, I learned alot and it seemed like a pretty straight forward way of processing videos through LLMs. Take the video cut it into frames and then pass some of the frames to create some type of commentary or description. Any ideas for what this can be used for? I'm thinking of maybe a live commentator for sports or movie critique 🤔

0 likes • Sep '24

Another idea perhaps - Assisting visually impariered individuals with gpt vision: Some sort of device with two pieces: - A camera to record your environment, or a screen capture to capture your monitor / tv. - A small device that can output continous stages of text in braille. (i.e. bumps that can be pushed out or retracted as new text flows into the device). The flow: - Use gpt vision to peridoically capture the users surroundings and create brief but descriptive annotations of the users environment. - Convert this text generated by GPT into continous input into this braille device so the user can, in somewhat real-time, what is happening around them.

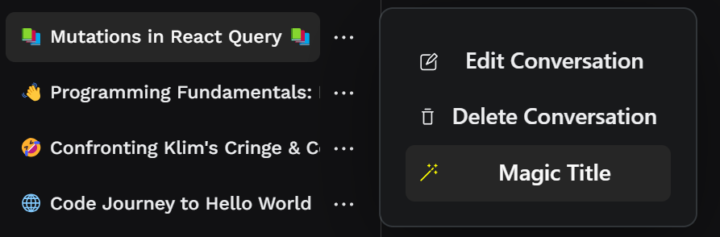

React App to interface with locally hosted LLMs

Figured I would share this app I have been making, mainly to learn react. I host large language models locally using Ollama. With Ollama, you can download and host open source models for chat completions and generation, as well as opensource embedding models as well. This exposes an API endpoint to your local network and can be called using a similar payload structure as OpenAI. Endpoint: http://127.0.0.1:11434/api/chat Payload: {"model":"llama3.1:latest","messages":[{"role":"user","content":"Hello there!"}],"stream":false} This app is just a Chatbot UI using React, Tailwind CSS, Radix UI components, and a Python Django rest API to handle storing conversations, messages, available models, user data, etc. Aside from being a chatbot, LLMs are also used for other features, like generating a meaningful titles for your conversations based on the messages. There are mutilmodal models that are open source, like llava: llava (ollama.com), which I could use for analyzing images in the future. I also run image generation models locally as well, like Stable Diffusion, which could be used for generating images for users. Code: https://github.com/ethanlchristensen/BS-LLM-WebUI Inspiration: nulzo/Ollama-WebUI: Interact with LLMs, with an emphasis on privacy. (github.com) Tailwind CSS: Tailwind CSS - Rapidly build modern websites without ever leaving your HTML. Radix UI: Radix UI (radix-ui.com) Ollama: Ollama Django Rest:Home - Django REST framework (django-rest-framework.org)

1-3 of 3