Activity

Mon

Wed

Fri

Sun

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Memberships

The AI Advantage

70.4k members • Free

11 contributions to The AI Advantage

🤝 Trust Is the Missing Layer in Agentic AI

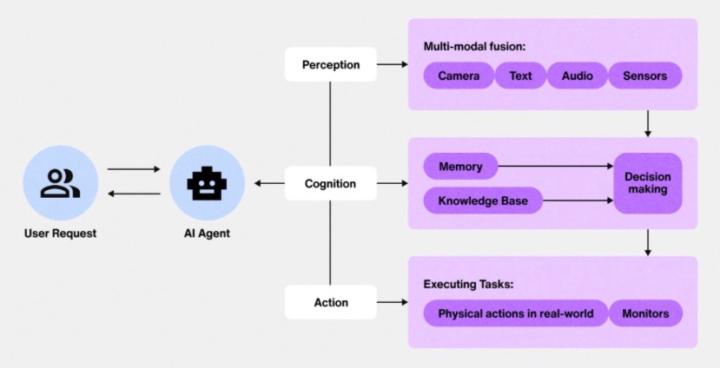

We are not limited by what AI agents can do. We are limited by what we trust them to do. As conversations accelerate around agentic AI, one truth keeps surfacing beneath the hype. Capability is no longer the bottleneck, confidence is. ------------- Context: Capability Has Outpaced Comfort ------------- Over the past year, the narrative around AI has shifted from assistance to action. We are no longer just asking AI to help us write, summarize, or brainstorm. We are asking it to decide, route, trigger, purchase, schedule, and execute. AI agents promise workflows that move on their own, across tools and systems, with minimal human input. On paper, this is thrilling. In practice, it creates a quiet tension. Many teams experiment with agents in contained environments, but hesitate to let them operate in real-world conditions. Not because the technology is insufficient, but because the human systems around it are not ready. The moment an agent moves from suggestion to execution, trust becomes the central question. We see this play out in subtle ways. Agents are built, then wrapped in excessive approval steps. Automations exist, but are rarely turned on. Teams talk about scale, while still manually double-checking everything. These are not failures. They are signals that trust has not yet been earned. The mistake is assuming that trust should come automatically once the technology works. In reality, trust is not a technical feature. It is a human layer that must be intentionally designed, practiced, and reinforced. ------------- Insight 1: Trust Is Infrastructure, Not Sentiment ------------- We often talk about trust as if it were an emotion. Something people either have or do not. In AI systems, trust functions more like infrastructure. It is built through visibility, predictability, and recoverability. Humans trust systems when they can understand what is happening, anticipate outcomes, and intervene when something goes wrong. When those conditions are missing, even highly capable systems feel risky. This is why opaque automation creates anxiety, while even imperfect but understandable systems feel usable.

The Secret to Getting 10x More Relevant Results in ChatGPT

In this video, I show you every way to customize ChatGPT as of October 2025. This includes personalization options for both the free and paid plans, so no matter how you use ChatGPT, this video will teach you how to set it up to get the best results!

This Week in AI...

This week, I show off some results of my Claude Cowork testing, the new Scribe v2 transcription model from ElevenLabs, and Midjourney's new Niji 7 model. Plus, I discuss the rising "AI for shopping" trend and OpenAI's new healthcare initiative. All that a more in the video, enjoy!

🔁 How Micro-Adaptations Build Long-Term AI Fluency

One of the most persistent myths about AI fluency is that it requires big changes. New systems, redesigned workflows, or dramatic shifts in how we work. This belief quietly stalls progress because it makes adoption feel heavier than it needs to be. In reality, long-term fluency with AI is almost always built through small, consistent adjustments rather than sweeping transformations. ------------- Context: Why We Overestimate the Size of Change ------------- When people think about becoming “good” with AI, they often imagine a future version of themselves who works completely differently. Their days look restructured. Their tools look unfamiliar. Their thinking feels more advanced. That imagined gap can feel intimidating enough to delay action altogether. In organizations, this shows up as waiting for perfect systems. Teams postpone experimentation until tools are approved, policies are finalized, or training programs are complete. While these steps matter, they often create the impression that meaningful progress only happens after a major rollout. At an individual level, the same pattern appears. We wait for uninterrupted time, for clarity, for confidence. We assume that if we cannot change everything, it is not worth changing anything. As a result, adoption stalls before it begins. Micro-adaptations challenge this assumption. They suggest that fluency does not come from overhaul. It comes from accumulation. ------------- Insight 1: Fluency Is Built Through Repetition, Not Intensity ------------- Fluency with AI looks impressive from the outside, but its foundations are remarkably ordinary. It is built through repeated exposure to similar tasks, similar decisions, and similar patterns of interaction. Small, repeated uses allow us to notice how AI responds to our inputs over time. We begin to see what stays consistent and what varies. This pattern recognition is what turns novelty into intuition. Intense bursts of experimentation can feel productive, but they often fade quickly. Without repetition, learning remains shallow. Micro-adaptations, by contrast, embed learning into everyday work where it has a chance to stick.

Claude Cowork is Here! Full Breakdown + Testing...

Anthropic just released Claude Cowork, the next evolution of Claude built on top of the incredibly effective Claude Code architecture. It essentially gives Claude Code's abilities to everyone, even if they aren't developers or comfortable using a terminal. Watch the video for a full breakdown and testing!

1-10 of 11

Active 8h ago

Joined Jan 14, 2026

Powered by