6d • General Discussion

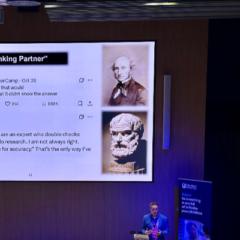

ChatGPT as a "Thinking Partner"

A question that keeps coming up in conversations about Responsible AI is how we can use tools like ChatGPT as genuine thinking partners rather than just answer generators.

A lot of advice focuses on prompting models to disagree or argue. That instinct makes sense, because challenge is central to learning. But there is a deeper issue. LLMs tend to mirror the behaviour we reward, so a single prompt rarely produces the kind of grounded disagreement we expect from a real dialogue.

Two older ideas help explain why.

1. Mill’s idea of “collision with error.”

We learn when our ideas meet resistance. Simply telling an AI to be sceptical doesn’t recreate the authentic friction that comes from genuine disagreement.

2. Aristotle’s view that good judgement is a habit.

AI can provide polished answers instantly, but the real work is slower: questioning assumptions, testing ideas, and building habits of critical thinking.

The broader point is that in an AI-rich environment, the skill that matters most is still how we think, not just how we prompt.

Curious how others approach this.

What habits or techniques help you get deeper thinking, rather than just smoother answers, from AI tools?

5

6 comments

skool.com/the-ai-advantage

Founded by Tony Robbins & Dean Graziosi - AI Advantage is your go-to hub to simplify AI, gain "AI Confidence" and unlock real & repeatable results.

Powered by